Hadoop HDFS的Java API使用

前言

在前面的章节中Hadoop Shell 命令 与 WordCount. 我们介绍了经常使用的HDFS经常使用的Shell命令. 本章我们讲解下 Hadoop的HDFS Java API.

本文相关代码, 可在我的Github项目 https://github.com/SeanYanxml/bigdata/ 目录下可以找到. PS: (如果觉得项目不错, 可以给我一个Star.)

正文 - 简单示例(上传/下载)

- Pom.xml(导入需要的Jar包)

使用Java操作HDFS之前, 我们需要先导入相应的Jar包. 如果你使用过Maven, 那么操作将会非常简单. (如果你没有使用过Maven, 那么手动将HadoopClient依赖的包下载到本地, 然后导入.) 注意, Jar包版本尽量要高于你Hadoop安装的版本.

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><parent><groupId>com.yanxml</groupId><artifactId>bigdata</artifactId><version>0.0.1-SNAPSHOT</version></parent><artifactId>hadoop</artifactId><dependencies><!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client --><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-client</artifactId><version>2.7.5</version></dependency><!-- https://mvnrepository.com/artifact/junit/junit --><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>4.12</version><scope>test</scope></dependency></dependencies></project>

HdfsClientDemo.java

下方的例子中主要包括3个部分.(1. 获取FS 2. 上传 3. 下载)package com.yanxml.bigdata.hadoop.hdfs.simple;

import java.io.IOException;

import java.net.URI;import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Before;

import org.junit.Test;/**

- Hadoop HDFS 的Java API.

- 客户端去操作Hdfs时, 是有一个用户身份的

- 默认情况下, hdfs客户端API会从jvm中获取一个参数作为自己的身份, -DHADOOP_USER_NAME=hadoop

- 也可以咋构造客户端fs对象时, 通过参数传递进去.

*/

public class HdfsClientDemo {

FileSystem fs = null;@Before

public void init(){Configuration conf = new Configuration();

// conf.set(“fs.defaultFS”, “hdfs://localhost:9000”);

// 拿到一个文件操作系统的客户端实例对象try {

// fs = FileSystem.get(conf);

// 直接传入URL 与用户证明fs = FileSystem.get(new URI("hdfs://localhost:9000"), conf, "Sean");} catch (Exception e) {e.printStackTrace();}

}

// 测试上传

@Test

public void testUpload(){try {

// Thread.sleep(1000000);

fs.copyFromLocalFile(new Path("/Users/Sean/Desktop/hello.sh"), new Path("/"+System.currentTimeMillis()));} catch (IllegalArgumentException e) {e.printStackTrace();} catch (IOException e) {e.printStackTrace();}catch (Exception e) {e.printStackTrace();}finally {try {fs.close();} catch (IOException e) {e.printStackTrace();}}

}

// 测试下载

@Test

public void testDownLoad(){try {fs.copyToLocalFile(new Path("/hello2019.sh"), new Path("/Users/Sean/Desktop/"));fs.close();} catch (IllegalArgumentException e) {e.printStackTrace();} catch (IOException e) {e.printStackTrace();}

}

}

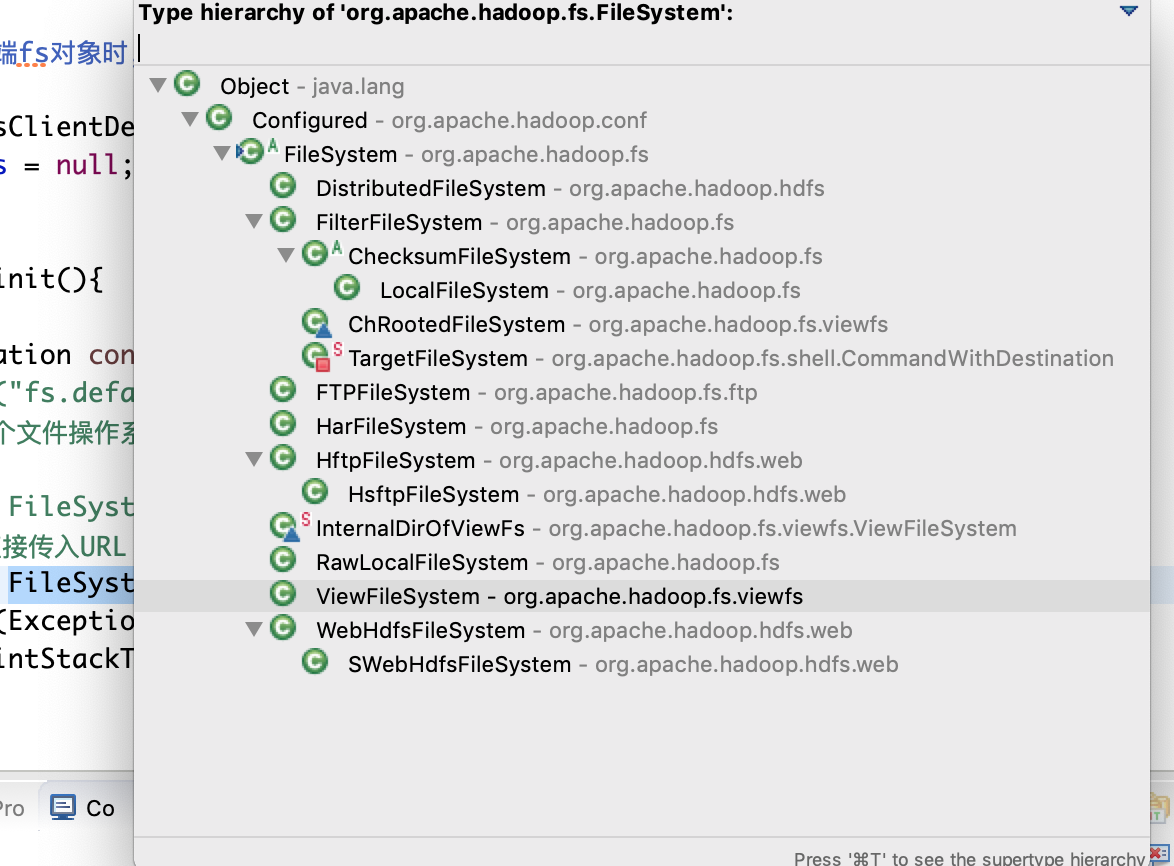

文件系统的使用类型.

配置用户

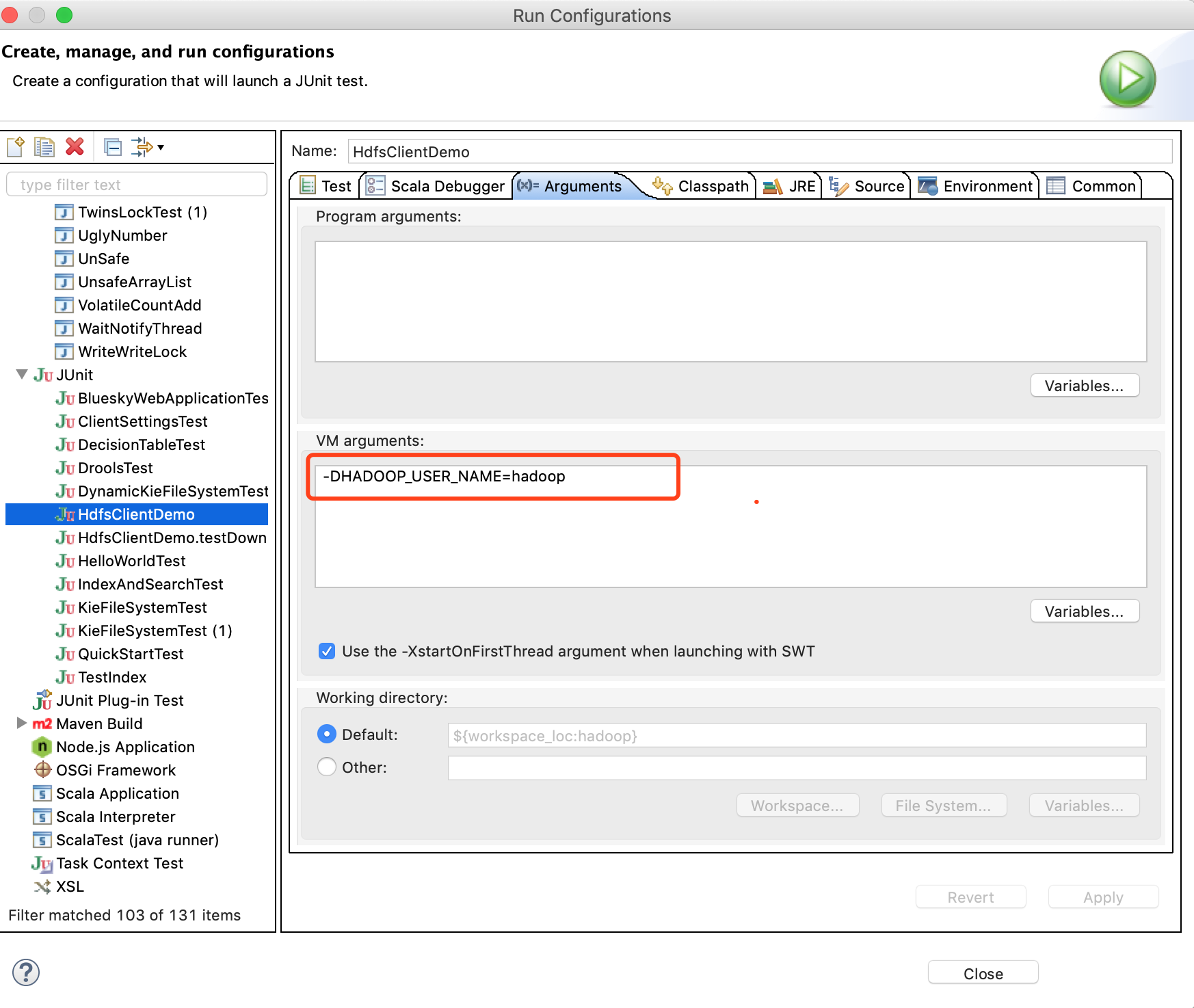

配置方法1 (JVM运行时传递)

配置参数2fs = FileSystem.get(new URI(“hdfs://localhost:9000”), conf, “Sean”);

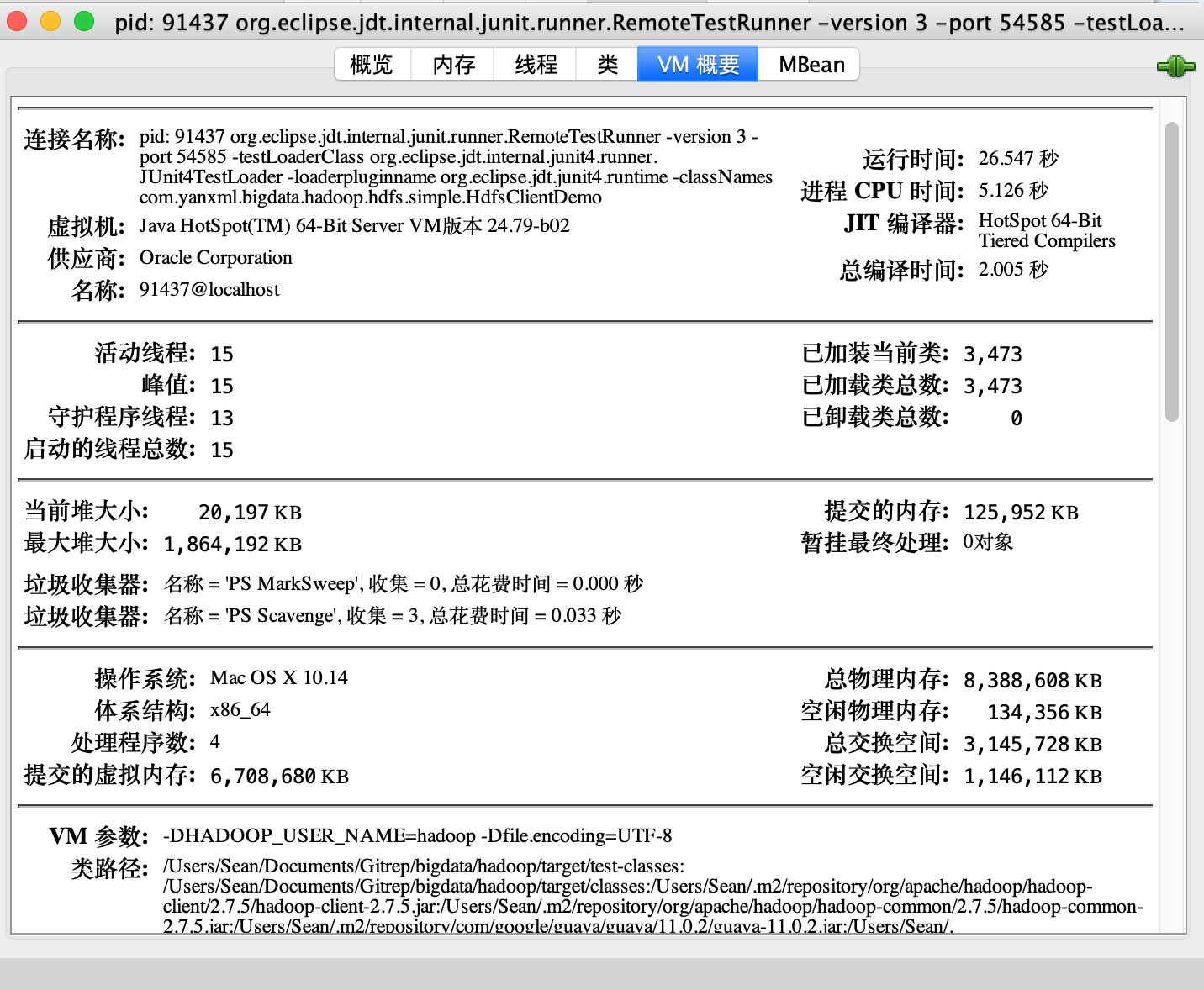

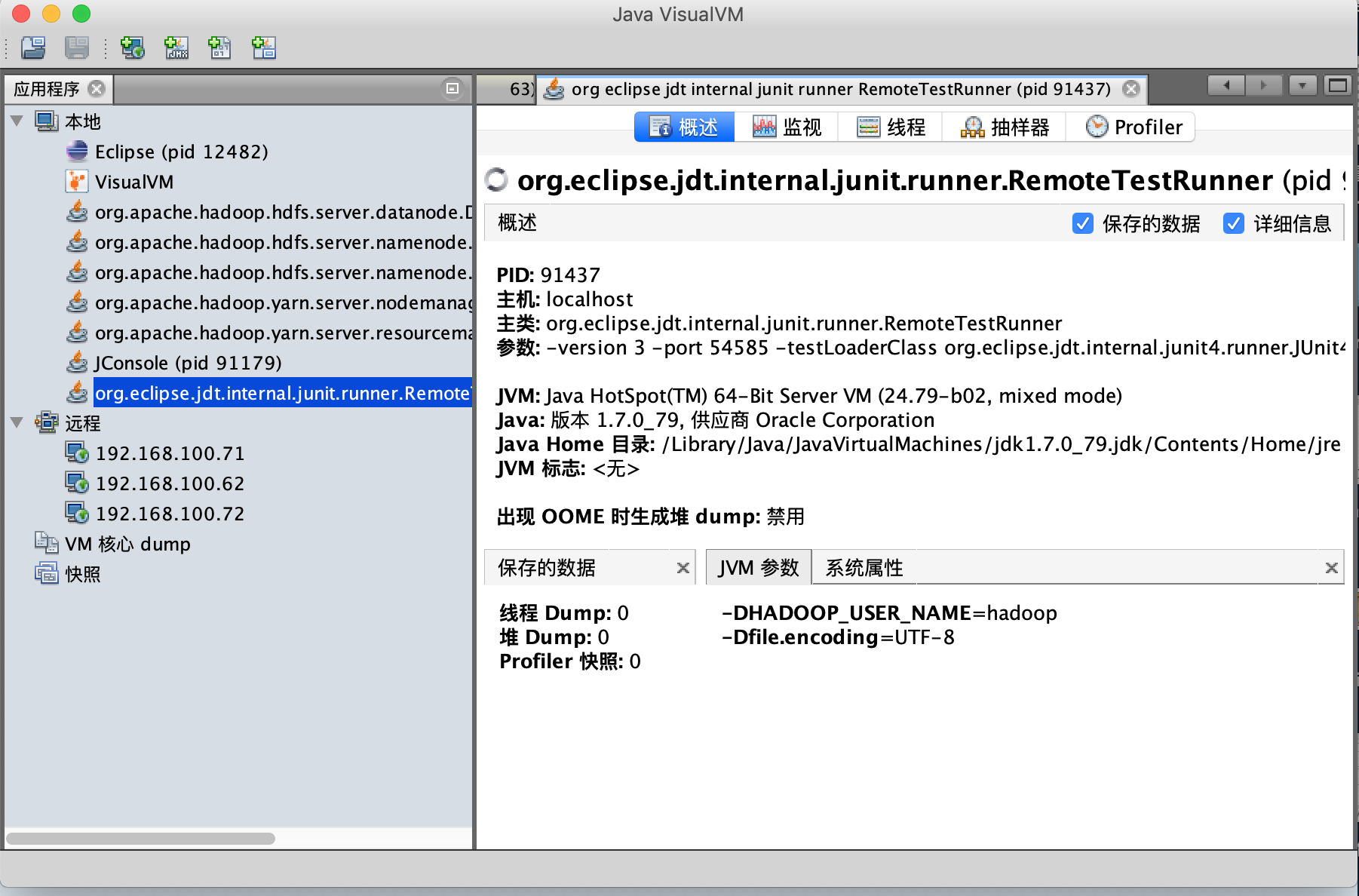

Tips

Tips1 配置用户后 使用jconsole链接查看相关参数.

Tips2 配置用户后 使用jvisualvm链接查看相关参数.

Tip3 没有权限异常(按照上方配置更改后, 成功)org.apache.hadoop.security.AccessControlException: Permission denied: user=hadoop, access=WRITE, inode=”/“

supergroup:drwxr-xr-x

supergroup:drwxr-xr-xat org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:308)at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:214)at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1752)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1736)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1719)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2536)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2471)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2355)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:624)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:398)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2213)at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:526)at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:106)at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:73)at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1841)at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1698)at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1633)at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:448)at org.apache.hadoop.hdfs.DistributedFileSystem$7.doCall(DistributedFileSystem.java:444)at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:459)at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:387)at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:910)at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:891)at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:788)at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:365)at org.apache.hadoop.fs.FileUtil.copy(FileUtil.java:338)at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:1968)at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:1936)at org.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:1901)at com.yanxml.bigdata.hadoop.hdfs.simple.HdfsClientDemo.testUpload(HdfsClientDemo.java:43)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:606)at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:50)at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:47)at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:325)at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:78)at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:57)at org.junit.runners.ParentRunner$3.run(ParentRunner.java:290)at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:71)at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:288)at org.junit.runners.ParentRunner.access$000(ParentRunner.java:58)at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:268)at org.junit.runners.ParentRunner.run(ParentRunner.java:363)at org.eclipse.jdt.internal.junit4.runner.JUnit4TestReference.run(JUnit4TestReference.java:86)at org.eclipse.jdt.internal.junit.runner.TestExecution.run(TestExecution.java:38)at org.eclipse.jdt.internal.junit.runner.RemoteTestRunner.runTests(RemoteTestRunner.java:459)at org.eclipse.jdt.internal.junit.runner.RemoteTestRunner.runTests(RemoteTestRunner.java:678)at org.eclipse.jdt.internal.junit.runner.RemoteTestRunner.run(RemoteTestRunner.java:382)at org.eclipse.jdt.internal.junit.runner.RemoteTestRunner.main(RemoteTestRunner.java:192)

Caused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.AccessControlException): Permission denied: user=hadoop, access=WRITE, inode=”/“

supergroup:drwxr-xr-x

supergroup:drwxr-xr-xat org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:308)at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:214)at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1752)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1736)at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkAncestorAccess(FSDirectory.java:1719)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInternal(FSNamesystem.java:2536)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2471)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2355)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:624)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:398)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2213)at org.apache.hadoop.ipc.Client.call(Client.java:1476)at org.apache.hadoop.ipc.Client.call(Client.java:1413)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)at com.sun.proxy.$Proxy14.create(Unknown Source)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.create(ClientNamenodeProtocolTranslatorPB.java:296)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:606)at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:191)at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)at com.sun.proxy.$Proxy15.create(Unknown Source)at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1836)... 40 more

Tip4 - Windows系统可能需要配置相应的Path变量才能执行成功.

正文 - 基本API(常用API)

在上节内, 我们介绍了Hadoop的HDFS简单Java API的简单使用.

增删查改

获取配置(初始化)

public void init(){

conf = new Configuration();// 拿到一个文件操作系统的客户端实例对象try {// 直接传入URL 与用户证明fs = FileSystem.get(new URI("hdfs://localhost:9000"), conf, "Sean");} catch (Exception e) {e.printStackTrace();}}

创建目录

// 递归删除

@Testpublic void testMkdir() throws IllegalArgumentException, IOException{boolean flag = fs.mkdirs(new Path("/sean2019/aaa/bbb"));}

创建文件(上传文件)

// 上传文件

@Testpublic void testUpload(){try {fs.copyFromLocalFile(new Path("/Users/Sean/Desktop/hello.sh"), new Path("/"+System.currentTimeMillis()));} catch (Exception e) {e.printStackTrace();}finally {try {fs.close();} catch (IOException e) {e.printStackTrace();}}}

删除(目录/文件)

// 递归删除

@Testpublic void testDelete() throws IllegalArgumentException, IOException{// 递归删除boolean flag = fs.delete(new Path("/sean2019/aaa"),true);System.out.println(flag);}

下载文件

// 下载文件

@Testpublic void testDownLoad(){try {fs.copyToLocalFile(new Path("/hello2019.sh"), new Path("/Users/Sean/Desktop/"));fs.close();} catch (IllegalArgumentException e) {e.printStackTrace();} catch (IOException e) {e.printStackTrace();}}

查询目录 - 迭代器

主要注意的是迭代器内, 还能获取到文件的块的信息.// 递归列出子文件夹下的所有文件

// 目录(层级递归) - 返回迭代器@Testpublic void testLs() throws FileNotFoundException, IllegalArgumentException, IOException{RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);while(listFiles.hasNext()){LocatedFileStatus file = listFiles.next();System.out.println("Name: "+file.getPath().getName());System.out.println("Permission: "+file.getPermission());System.out.println("BlockSize: "+file.getBlockSize());System.out.println("Owner: "+file.getOwner());System.out.println("Replication: "+file.getReplication());BlockLocation[] blockLocations = file.getBlockLocations();for(BlockLocation blockLocation: blockLocations){// 偏移量System.out.println("Block "+blockLocation.getOffset());System.out.println("Block Length: "+blockLocation.getLength());for(String data:blockLocation.getHosts()){System.out.println("Block Host:"+data);}}}}

查询目录 - 数组(单层目录)

@Test

public void testLs2() throws FileNotFoundException, IllegalArgumentException, IOException{FileStatus[] fileStatus= fs.listStatus(new Path("/"));for(FileStatus file:fileStatus){System.out.println("Name:" + file.getPath().getName());System.out.println(file.isFile()?"file":"directory");}}

流模式(上传/下载/指定长度)

流模式上传

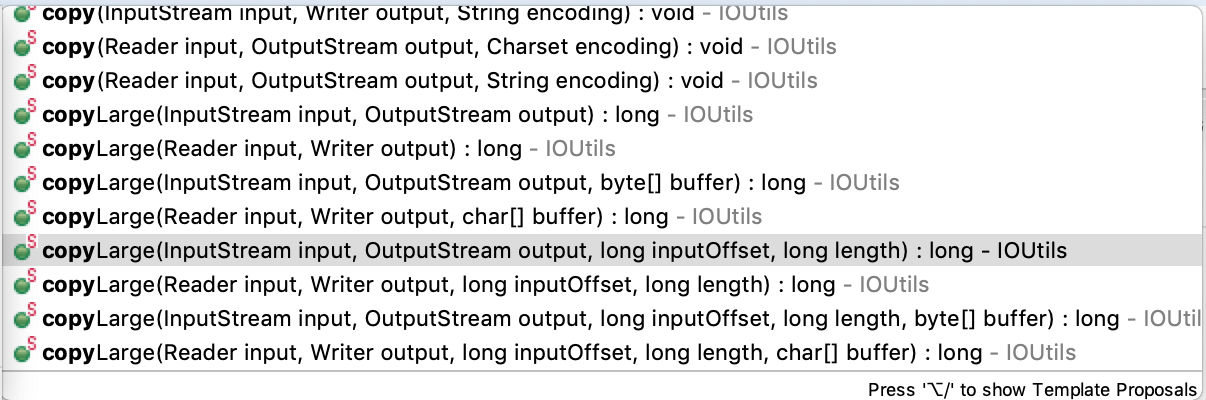

/**

* 通过流的方式 上传文件到Hdfs.** */@Testpublic void testUpload() throws IllegalArgumentException, IOException{// HDFS输出流FSDataOutputStream outputStream = fs.create(new Path("/sean2019/hellok.sh"),true);// 获取本地输入流FileInputStream localInputStream = new FileInputStream("/Users/Sean/Desktop/hello.sh");IOUtils.copy(localInputStream, outputStream);}

流模式下载

/**

* 通过流的方式 下载文件.* @throws IOException* @throws IllegalArgumentException* */

// @Test

public void testWrite() throws IllegalArgumentException, IOException{FSDataInputStream inputStream = fs.open(new Path("/sean2019/hellok.sh"));FileOutputStream localOutPutStream = new FileOutputStream("/Users/Sean/Desktop/helloG.sh");IOUtils.copy(inputStream, localOutPutStream);}

流模式指定长度

使用inputStream.seek(xxx)方法可以指定文件读取的开始长度. 使用IOUtils.copyLarge()方法可以指定开始和结束长度.

/**

* 指定文件流.* @throws IOException* @throws IllegalArgumentException* */@Testpublic void testRandomAccess() throws IllegalArgumentException, IOException{FSDataInputStream inputStream = fs.open(new Path("/sean2019/hellok.sh"));// 通过Seek方法指定长度inputStream.seek(12);FileOutputStream localOutPutStream = new FileOutputStream("/Users/Sean/Desktop/helloG.sh.part2");IOUtils.copy(inputStream, localOutPutStream);}

输出到窗口(

System.out流)/**

* 显示文件流到屏幕上* @throws IOException* @throws IllegalArgumentException* */@Testpublic void testConsole() throws IllegalArgumentException, IOException{FSDataInputStream inputStream = fs.open(new Path("/sean2019/hellok.sh"));IOUtils.copy(inputStream, System.out);}

// 读取指定长度伪代码

//inputStream.seek(60M);

//while(read){

// count++;

// if(count>60M)break;

//}

Reference

[1].

还没有评论,来说两句吧...