使用tf.keras搭建mnist手写数字识别网络

使用tf.keras搭建mnist手写数字识别网络

目录

使用tf.keras搭建mnist手写数字识别网络

1.使用tf.keras.Sequential搭建序列模型

1.1 tf.keras.Sequential 模型

1.2 搭建mnist手写数字识别网络(序列模型)

1.3 完整的训练代码

2.构建高级模型

2.1 函数式 API

2.2 搭建mnist手写数字识别网络(函数式 API)

2.3 完整的训练代码

tf.keras高级应用:回调 tf.keras.callbacks.Callback

tf.keras高级应用:自定义层tf.keras.layers.Layer

1.使用tf.keras.Sequential搭建序列模型

1.1 tf.keras.Sequential 模型

在 Keras 中,您可以通过组合层来构建模型。模型(通常)是由层构成的图。最常见的模型类型是层的堆叠:tf.keras.Sequential 模型。要构建一个简单的全连接网络(即多层感知器),请运行以下代码:

model = keras.Sequential()# Adds a densely-connected layer with 64 units to the model:model.add(keras.layers.Dense(64, activation='relu'))# Add another:model.add(keras.layers.Dense(64, activation='relu'))# Add a softmax layer with 10 output units:model.add(keras.layers.Dense(10, activation='softmax'))

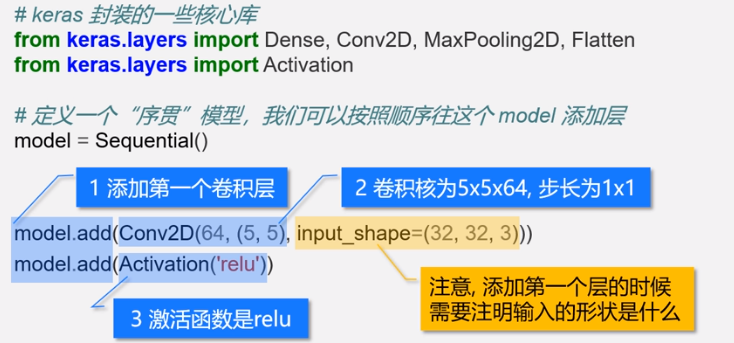

图文例子:

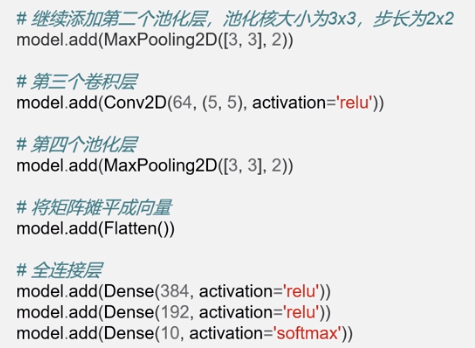

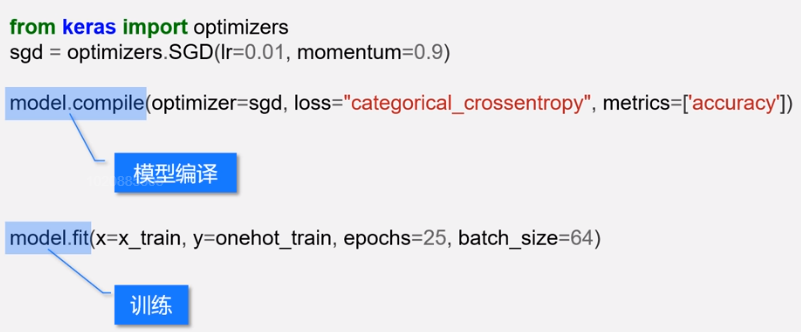

1.2 搭建mnist手写数字识别网络(序列模型)

def mnist_cnn(input_shape):'''构建一个CNN网络模型:param input_shape: 指定输入维度:return:'''model=keras.Sequential()model.add(keras.layers.Conv2D(filters=32,kernel_size = 5,strides = (1,1),padding = 'same',activation = tf.nn.relu,input_shape = input_shape))model.add(keras.layers.MaxPool2D(pool_size=(2,2), strides = (2,2), padding = 'valid'))model.add(keras.layers.Conv2D(filters=64,kernel_size = 3,strides = (1,1),padding = 'same',activation = tf.nn.relu))model.add(keras.layers.MaxPool2D(pool_size=(2,2), strides = (2,2), padding = 'valid'))model.add(keras.layers.Dropout(0.25))model.add(keras.layers.Flatten())model.add(keras.layers.Dense(units=128,activation = tf.nn.relu))model.add(keras.layers.Dropout(0.5))model.add(keras.layers.Dense(units=10,activation = tf.nn.softmax))return model

1.3 完整的训练代码

# -*-coding: utf-8 -*-"""@Project: tensorflow-yolov3@File : keras_mnist.py@Author : panjq@E-mail : pan_jinquan@163.com@Date : 2019-01-31 09:30:12"""import tensorflow as tffrom tensorflow import kerasimport matplotlib.pyplot as pltimport numpy as npmnist=keras.datasets.mnistdef get_train_val(mnist_path):# mnist下载地址:https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz(train_images, train_labels), (test_images, test_labels) = mnist.load_data(mnist_path)print("train_images nums:{}".format(len(train_images)))print("test_images nums:{}".format(len(test_images)))return train_images, train_labels, test_images, test_labelsdef show_mnist(images,labels):for i in range(25):plt.subplot(5,5,i+1)plt.xticks([])plt.yticks([ ])plt.grid(False)plt.imshow(images[i],cmap=plt.cm.gray)plt.xlabel(str(labels[i]))plt.show()def one_hot(labels):onehot_labels=np.zeros(shape=[len(labels),10])for i in range(len(labels)):index=labels[i]onehot_labels[i][index]=1return onehot_labelsdef mnist_net(input_shape):'''构建一个简单的全连接层网络模型:输入层为28x28=784个输入节点隐藏层120个节点输出层10个节点:param input_shape: 指定输入维度:return:'''model = keras.Sequential()model.add(keras.layers.Flatten(input_shape=input_shape)) #输出层model.add(keras.layers.Dense(units=120, activation=tf.nn.relu)) #隐含层model.add(keras.layers.Dense(units=10, activation=tf.nn.softmax))#输出层return modeldef mnist_cnn(input_shape):'''构建一个CNN网络模型:param input_shape: 指定输入维度:return:'''model=keras.Sequential()model.add(keras.layers.Conv2D(filters=32,kernel_size = 5,strides = (1,1),padding = 'same',activation = tf.nn.relu,input_shape = input_shape))model.add(keras.layers.MaxPool2D(pool_size=(2,2), strides = (2,2), padding = 'valid'))model.add(keras.layers.Conv2D(filters=64,kernel_size = 3,strides = (1,1),padding = 'same',activation = tf.nn.relu))model.add(keras.layers.MaxPool2D(pool_size=(2,2), strides = (2,2), padding = 'valid'))model.add(keras.layers.Dropout(0.25))model.add(keras.layers.Flatten())model.add(keras.layers.Dense(units=128,activation = tf.nn.relu))model.add(keras.layers.Dropout(0.5))model.add(keras.layers.Dense(units=10,activation = tf.nn.softmax))return modeldef trian_model(train_images,train_labels,test_images,test_labels):# re-scale to 0~1.0之间train_images=train_images/255.0test_images=test_images/255.0# mnist数据转换为四维train_images=np.expand_dims(train_images,axis = 3)test_images=np.expand_dims(test_images,axis = 3)print("train_images :{}".format(train_images.shape))print("test_images :{}".format(test_images.shape))train_labels=one_hot(train_labels)test_labels=one_hot(test_labels)# 建立模型# model = mnist_net(input_shape=(28,28))model=mnist_cnn(input_shape=(28,28,1))model.compile(optimizer=tf.train.AdamOptimizer(),loss="categorical_crossentropy",metrics=['accuracy'])model.fit(x=train_images,y=train_labels,epochs=5)test_loss,test_acc=model.evaluate(x=test_images,y=test_labels)print("Test Accuracy %.2f"%test_acc)# 开始预测cnt=0predictions=model.predict(test_images)for i in range(len(test_images)):target=np.argmax(predictions[i])label=np.argmax(test_labels[i])if target==label:cnt +=1print("correct prediction of total : %.2f"%(cnt/len(test_images)))model.save('mnist-model.h5')if __name__=="__main__":mnist_path = 'D:/MyGit/tensorflow-yolov3/data/mnist.npz'train_images, train_labels, test_images, test_labels=get_train_val(mnist_path)# show_mnist(train_images, train_labels)trian_model(train_images, train_labels, test_images, test_labels)

2.构建高级模型

2.1 函数式 API

tf.keras.Sequential 模型是层的简单堆叠,无法表示任意模型。使用 Keras 函数式 API 可以构建复杂的模型拓扑,例如:

多输入模型,

多输出模型,

具有共享层的模型(同一层被调用多次),

具有非序列数据流的模型(例如,剩余连接)

使用函数式 API 构建的模型具有以下特征:

层实例可调用并返回张量。

输入张量和输出张量用于定义 tf.keras.Model 实例。

此模型的训练方式和 Sequential 模型一样。

以下示例使用函数式 API 构建一个简单的全连接网络:

inputs = keras.Input(shape=(32,)) # Returns a placeholder tensor# A layer instance is callable on a tensor, and returns a tensor.x = keras.layers.Dense(64, activation='relu')(inputs)x = keras.layers.Dense(64, activation='relu')(x)predictions = keras.layers.Dense(10, activation='softmax')(x)# Instantiate the model given inputs and outputs.model = keras.Model(inputs=inputs, outputs=predictions)# The compile step specifies the training configuration.model.compile(optimizer=tf.train.RMSPropOptimizer(0.001),loss='categorical_crossentropy',metrics=['accuracy'])# Trains for 5 epochsmodel.fit(data, labels, batch_size=32, epochs=5)

2.2 搭建mnist手写数字识别网络(函数式 API)

def mnist_cnn():"""使用keras定义mnist模型"""# define a truncated_normal initializertn_init = keras.initializers.truncated_normal(0, 0.1, SEED, dtype=tf.float32)# define a constant initializerconst_init = keras.initializers.constant(0.1, tf.float32)# define a L2 regularizerl2_reg = keras.regularizers.l2(5e-4)"""输入占位符。如果输入图像的shape是(28, 28, 1),输入的一批图像(16张图)的shape是(16, 28, 28, 1);那么,在定义Input时,shape参数只需要一张图像的大小,也就是(28, 28, 1),而不是(16, 28, 28, 1)。input placeholder. the Input's parameter shape should be a image'sshape (28, 28, 1) rather than a batch of image's shape (16, 28, 28, 1)."""# inputs: shape(None, 28, 28, 1)inputs = layers.Input(shape=(IMAGE_SIZE, IMAGE_SIZE, NUM_CHANNELS), dtype=tf.float32)"""卷积,输出shape为(None, 28,18,32)。Conv2D的第一个参数为卷积核个数;第二个参数为卷积核大小,和tensorflow不同的是,卷积核的大小只需指定卷积窗口的大小,例如在tensorflow中,卷积核的大小为(BATCH_SIZE, 5, 5, 1),那么在Keras中,只需指定卷积窗口的大小(5, 5),最后一维的大小会根据之前输入的形状自动推算,假如上一层的shape为(None, 28, 28, 1),那么最后一维的大小为1;第三个参数为strides,和上一个参数同理。其他参数可查阅Keras的官方文档。"""# conv1: shape(None, 28, 28, 32)conv1 = layers.Conv2D(32, (5, 5), strides=(1, 1), padding='same',activation='relu', use_bias=True,kernel_initializer=tn_init, name='conv1')(inputs)# pool1: shape(None, 14, 14, 32)pool1 = layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2), padding='same', name='pool1')(conv1)# conv2: shape(None, 14, 14, 64)conv2 = layers.Conv2D(64, (5, 5), strides=(1, 1), padding='same',activation='relu', use_bias=True,kernel_initializer=tn_init,bias_initializer=const_init, name='conv2')(pool1)# pool2: shape(None, 7, 7, 64)pool2 = layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2), padding='same', name='pool2')(conv2)# flatten: shape(None, 3136)flatten = layers.Flatten(name='flatten')(pool2)# fc1: shape(None, 512)fc1 = layers.Dense(512, 'relu', True, kernel_initializer=tn_init,bias_initializer=const_init, kernel_regularizer=l2_reg,bias_regularizer=l2_reg, name='fc1')(flatten)# dropoutdropout1 = layers.Dropout(0.5, seed=SEED)(fc1)# dense2: shape(None, 10)fc2 = layers.Dense(NUM_LABELS, activation=None, use_bias=True,kernel_initializer=tn_init, bias_initializer=const_init, name='fc2',kernel_regularizer=l2_reg, bias_regularizer=l2_reg)(dropout1)# softmax: shape(None, 10)softmax = layers.Softmax(name='softmax')(fc2)# make new modelmodel = keras.Model(inputs=inputs, outputs=softmax, name='nmist')return model

2.3 完整的训练代码

# -*-coding: utf-8 -*-"""@Project: tensorflow-yolov3@File : mnist_cnn2.py@Author : panjq@E-mail : pan_jinquan@163.com@Date : 2019-01-31 10:59:33"""# 从tensorflow里导入keras和keras.layerfrom tensorflow import kerasfrom tensorflow.keras import layersimport tensorflow as tfimport matplotlib.pyplot as pltimport numpy as npmnist=keras.datasets.mnist# 图像的大小IMAGE_SIZE = 28# 图像的通道数,为1,即为灰度图像NUM_CHANNELS = 1# 图像想素值的范围PIXEL_DEPTH = 255# 分类数目,0~9总共有10类NUM_LABELS = 10# 验证集大小VALIDATION_SIZE = 5000 # Size of the validation set.# 种子SEED = 66478 # Set to None for random seed.# 批次大小BATCH_SIZE = 64# 训练多少个epochNUM_EPOCHS = 10EVAL_BATCH_SIZE = 64def mnist_cnn():"""使用keras定义mnist模型"""# define a truncated_normal initializertn_init = keras.initializers.truncated_normal(0, 0.1, SEED, dtype=tf.float32)# define a constant initializerconst_init = keras.initializers.constant(0.1, tf.float32)# define a L2 regularizerl2_reg = keras.regularizers.l2(5e-4)"""输入占位符。如果输入图像的shape是(28, 28, 1),输入的一批图像(16张图)的shape是(16, 28, 28, 1);那么,在定义Input时,shape参数只需要一张图像的大小,也就是(28, 28, 1),而不是(16, 28, 28, 1)。input placeholder. the Input's parameter shape should be a image'sshape (28, 28, 1) rather than a batch of image's shape (16, 28, 28, 1)."""# inputs: shape(None, 28, 28, 1)inputs = layers.Input(shape=(IMAGE_SIZE, IMAGE_SIZE, NUM_CHANNELS), dtype=tf.float32)"""卷积,输出shape为(None, 28,18,32)。Conv2D的第一个参数为卷积核个数;第二个参数为卷积核大小,和tensorflow不同的是,卷积核的大小只需指定卷积窗口的大小,例如在tensorflow中,卷积核的大小为(BATCH_SIZE, 5, 5, 1),那么在Keras中,只需指定卷积窗口的大小(5, 5),最后一维的大小会根据之前输入的形状自动推算,假如上一层的shape为(None, 28, 28, 1),那么最后一维的大小为1;第三个参数为strides,和上一个参数同理。其他参数可查阅Keras的官方文档。"""# conv1: shape(None, 28, 28, 32)conv1 = layers.Conv2D(32, (5, 5), strides=(1, 1), padding='same',activation='relu', use_bias=True,kernel_initializer=tn_init, name='conv1')(inputs)# pool1: shape(None, 14, 14, 32)pool1 = layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2), padding='same', name='pool1')(conv1)# conv2: shape(None, 14, 14, 64)conv2 = layers.Conv2D(64, (5, 5), strides=(1, 1), padding='same',activation='relu', use_bias=True,kernel_initializer=tn_init,bias_initializer=const_init, name='conv2')(pool1)# pool2: shape(None, 7, 7, 64)pool2 = layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2), padding='same', name='pool2')(conv2)# flatten: shape(None, 3136)flatten = layers.Flatten(name='flatten')(pool2)# fc1: shape(None, 512)fc1 = layers.Dense(512, 'relu', True, kernel_initializer=tn_init,bias_initializer=const_init, kernel_regularizer=l2_reg,bias_regularizer=l2_reg, name='fc1')(flatten)# dropoutdropout1 = layers.Dropout(0.5, seed=SEED)(fc1)# dense2: shape(None, 10)fc2 = layers.Dense(NUM_LABELS, activation=None, use_bias=True,kernel_initializer=tn_init, bias_initializer=const_init, name='fc2',kernel_regularizer=l2_reg, bias_regularizer=l2_reg)(dropout1)# softmax: shape(None, 10)softmax = layers.Softmax(name='softmax')(fc2)# make new modelmodel = keras.Model(inputs=inputs, outputs=softmax, name='nmist')return modeldef get_train_val(mnist_path):# mnist下载地址:https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz(train_images, train_labels), (test_images, test_labels) = mnist.load_data(mnist_path)print("train_images nums:{}".format(len(train_images)))print("test_images nums:{}".format(len(test_images)))return train_images, train_labels, test_images, test_labelsdef show_mnist(images,labels):for i in range(25):plt.subplot(5,5,i+1)plt.xticks([])plt.yticks([ ])plt.grid(False)plt.imshow(images[i],cmap=plt.cm.gray)plt.xlabel(str(labels[i]))plt.show()def one_hot(labels):onehot_labels=np.zeros(shape=[len(labels),10])for i in range(len(labels)):index=labels[i]onehot_labels[i][index]=1return onehot_labelsdef trian_model(train_images,train_labels,test_images,test_labels):# re-scale to 0~1.0之间train_images=train_images/255.0test_images=test_images/255.0# mnist数据转换为四维train_images=np.expand_dims(train_images,axis = 3)test_images=np.expand_dims(test_images,axis = 3)print("train_images :{}".format(train_images.shape))print("test_images :{}".format(test_images.shape))train_labels=one_hot(train_labels)test_labels=one_hot(test_labels)# 建立模型model=mnist_cnn()model.compile(optimizer=tf.train.AdamOptimizer(),loss="categorical_crossentropy",metrics=['accuracy'])model.fit(x=train_images,y=train_labels,epochs=5)test_loss,test_acc=model.evaluate(x=test_images,y=test_labels)print("Test Accuracy %.2f"%test_acc)# 开始预测cnt=0predictions=model.predict(test_images)for i in range(len(test_images)):target=np.argmax(predictions[i])label=np.argmax(test_labels[i])if target==label:cnt +=1print("correct prediction of total : %.2f"%(cnt/len(test_images)))model.save('mnist-model.h5')if __name__=="__main__":mnist_path = 'D:/MyGit/tensorflow-yolov3/data/mnist.npz'train_images, train_labels, test_images, test_labels=get_train_val(mnist_path)# show_mnist(train_images, train_labels)trian_model(train_images, train_labels, test_images, test_labels)

3. tf.keras高级应用:回调 tf.keras.callbacks.Callback

回调是传递给模型的对象,用于在训练期间自定义该模型并扩展其行为。您可以编写自定义回调,也可以使用包含以下方法的内置 tf.keras.callbacks:

tf.keras.callbacks.ModelCheckpoint:定期保存模型的检查点。tf.keras.callbacks.LearningRateScheduler:动态更改学习速率。tf.keras.callbacks.EarlyStopping:在验证效果不再改进时中断训练。tf.keras.callbacks.TensorBoard:使用 TensorBoard 监控模型的行为。

要使用 tf.keras.callbacks.Callback,请将其传递给模型的 fit 方法:

callbacks = [# Interrupt training if `val_loss` stops improving for over 2 epochskeras.callbacks.EarlyStopping(patience=2, monitor='val_loss'),# Write TensorBoard logs to `./logs` directorykeras.callbacks.TensorBoard(log_dir='./logs')]model.fit(data, labels, batch_size=32, epochs=5, callbacks=callbacks,validation_data=(val_data, val_targets))

将mnist训练代码增加回调打印模型的信息:

def trian_model(train_images,train_labels,test_images,test_labels):# re-scale to 0~1.0之间train_images=train_images/255.0test_images=test_images/255.0# mnist数据转换为四维train_images=np.expand_dims(train_images,axis = 3)test_images=np.expand_dims(test_images,axis = 3)print("train_images :{}".format(train_images.shape))print("test_images :{}".format(test_images.shape))train_labels=one_hot(train_labels)test_labels=one_hot(test_labels)# 建立模型model=mnist_cnn()# 打印模型的信息model.summary()# 编译模型;第一个参数是优化器;第二个参数为loss,因为是多元分类问题,固为# 'categorical_crossentropy';第三个参数为metrics,就是在训练的时候需# 要监控的指标列表。# compile modelmodel.compile(optimizer=tf.train.AdamOptimizer(),loss="categorical_crossentropy",metrics=['accuracy'])# model.compile(optimizer=keras.optimizers.SGD(lr=0.01, momentum=0.9, decay=1e-5),loss='categorical_crossentropy', metrics=['accuracy'])# 设置回调# setting callbackscallbacks = [# 把TensorBoard的日志写入文件夹'./logs'# write TensorBoard' logs to directory 'logs'keras.callbacks.TensorBoard(log_dir='./logs'),]# 开始训练# start trainingmodel.fit(train_images, train_labels, BATCH_SIZE, epochs=5,validation_data=(test_images, test_labels), callbacks=callbacks)# evaluateprint('', 'evaluating on test sets...')loss, accuracy = model.evaluate(test_images, test_labels)print('test loss:', loss)print('test Accuracy:', accuracy)# save modelmodel.save('mnist-model.h5')

4. tf.keras高级应用:自定义层tf.keras.layers.Layer

通过对 tf.keras.layers.Layer 进行子类化并实现以下方法来创建自定义层:

build:创建层的权重。使用 add_weight 方法添加权重。

call:定义前向传播。

compute_output_shape:指定在给定输入形状的情况下如何计算层的输出形状。

或者,可以通过实现 get_config 方法和 from_config 类方法序列化层。

下面是一个使用核矩阵实现输入 matmul 的自定义层示例:

class MyLayer(keras.layers.Layer):def __init__(self, output_dim, **kwargs):self.output_dim = output_dimsuper(MyLayer, self).__init__(**kwargs)def build(self, input_shape):shape = tf.TensorShape((input_shape[1], self.output_dim))# Create a trainable weight variable for this layer.self.kernel = self.add_weight(name='kernel',shape=shape,initializer='uniform',trainable=True)# Be sure to call this at the endsuper(MyLayer, self).build(input_shape)def call(self, inputs):return tf.matmul(inputs, self.kernel)def compute_output_shape(self, input_shape):shape = tf.TensorShape(input_shape).as_list()shape[-1] = self.output_dimreturn tf.TensorShape(shape)def get_config(self):base_config = super(MyLayer, self).get_config()base_config['output_dim'] = self.output_dim@classmethoddef from_config(cls, config):return cls(**config)# Create a model using the custom layermodel = keras.Sequential([MyLayer(10),keras.layers.Activation('softmax')])# The compile step specifies the training configurationmodel.compile(optimizer=tf.train.RMSPropOptimizer(0.001),loss='categorical_crossentropy',metrics=['accuracy'])# Trains for 5 epochs.model.fit(data, targets, batch_size=32, epochs=5)

还没有评论,来说两句吧...