全连接神经网络的梯度反向传播

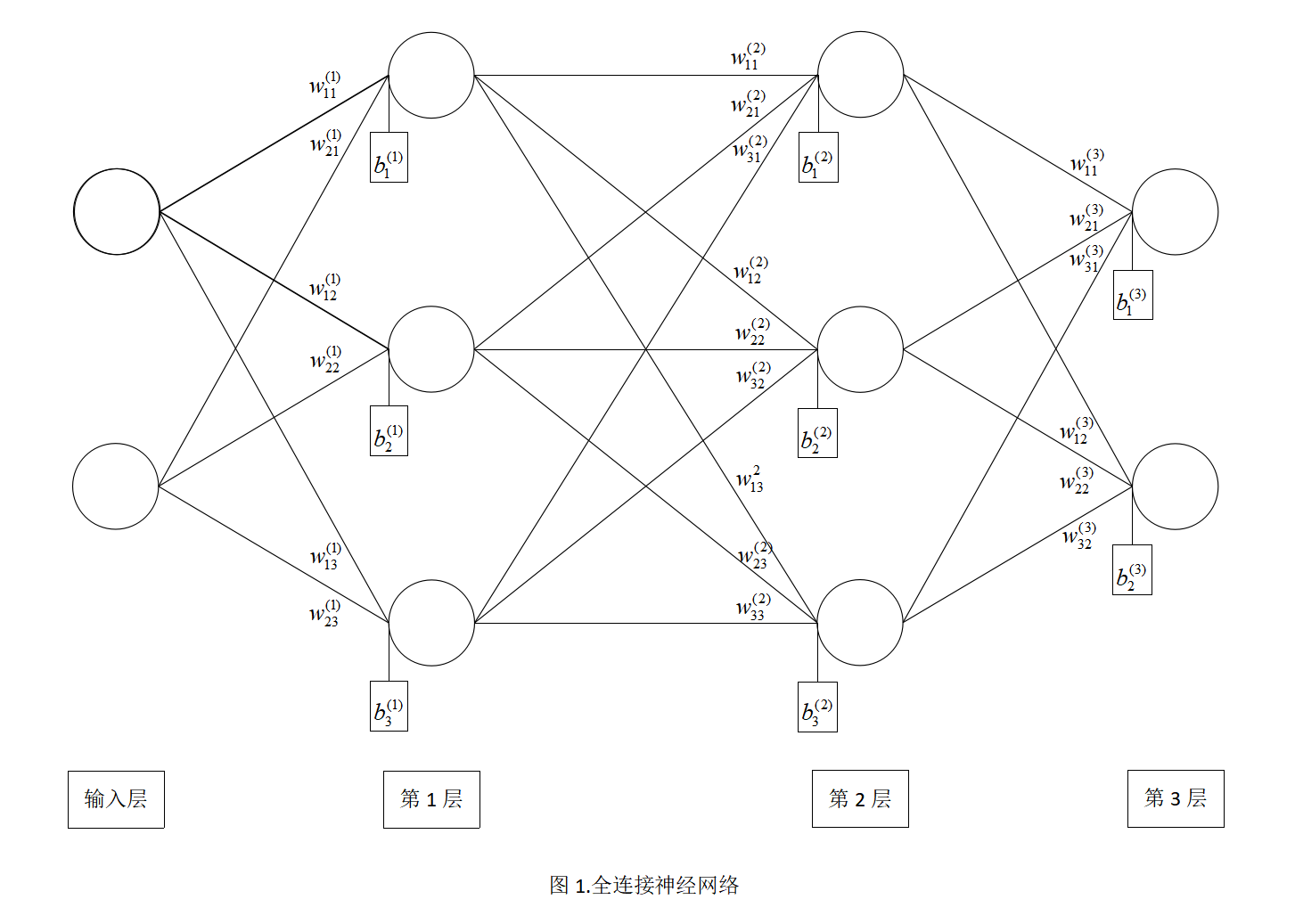

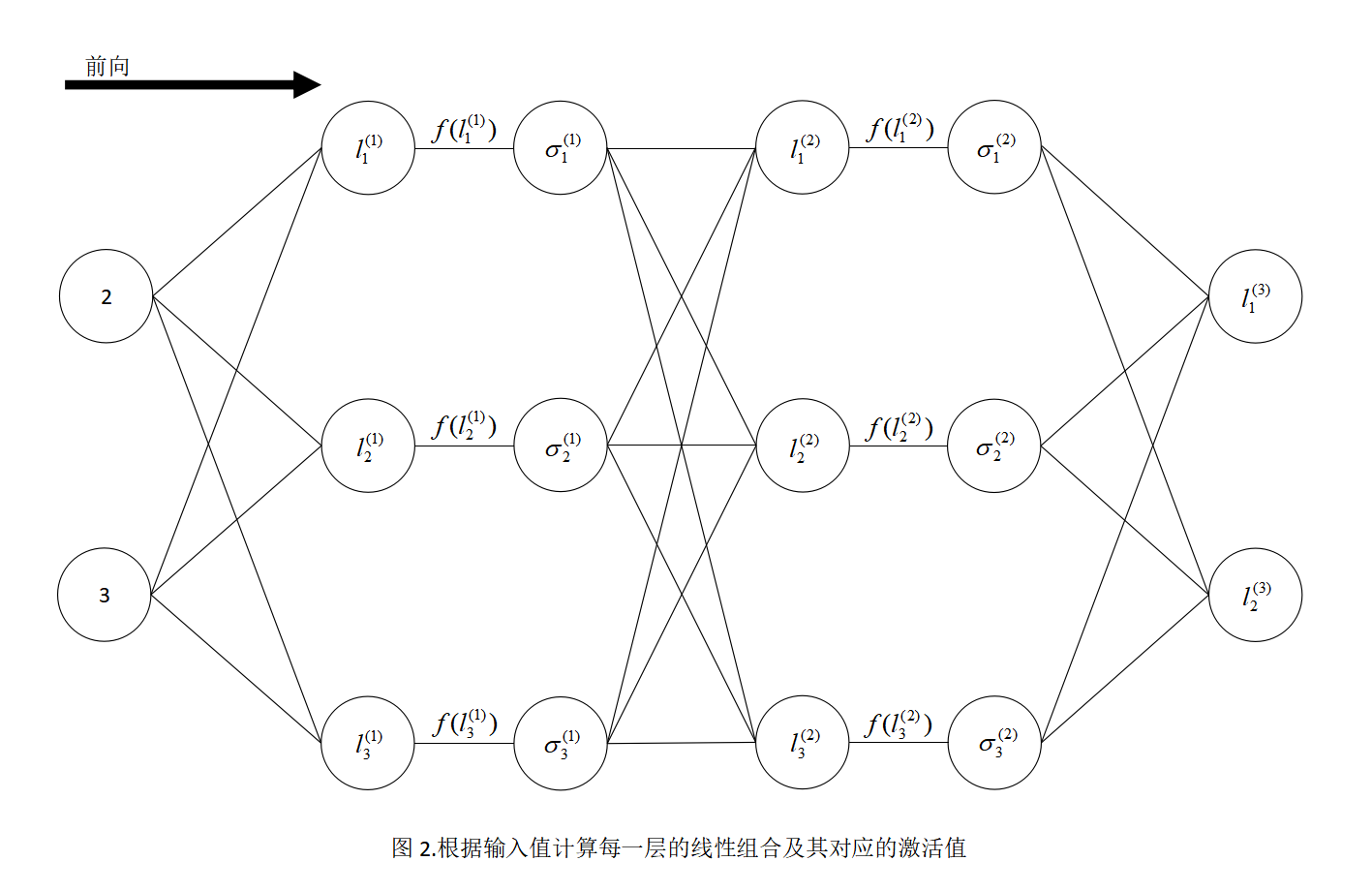

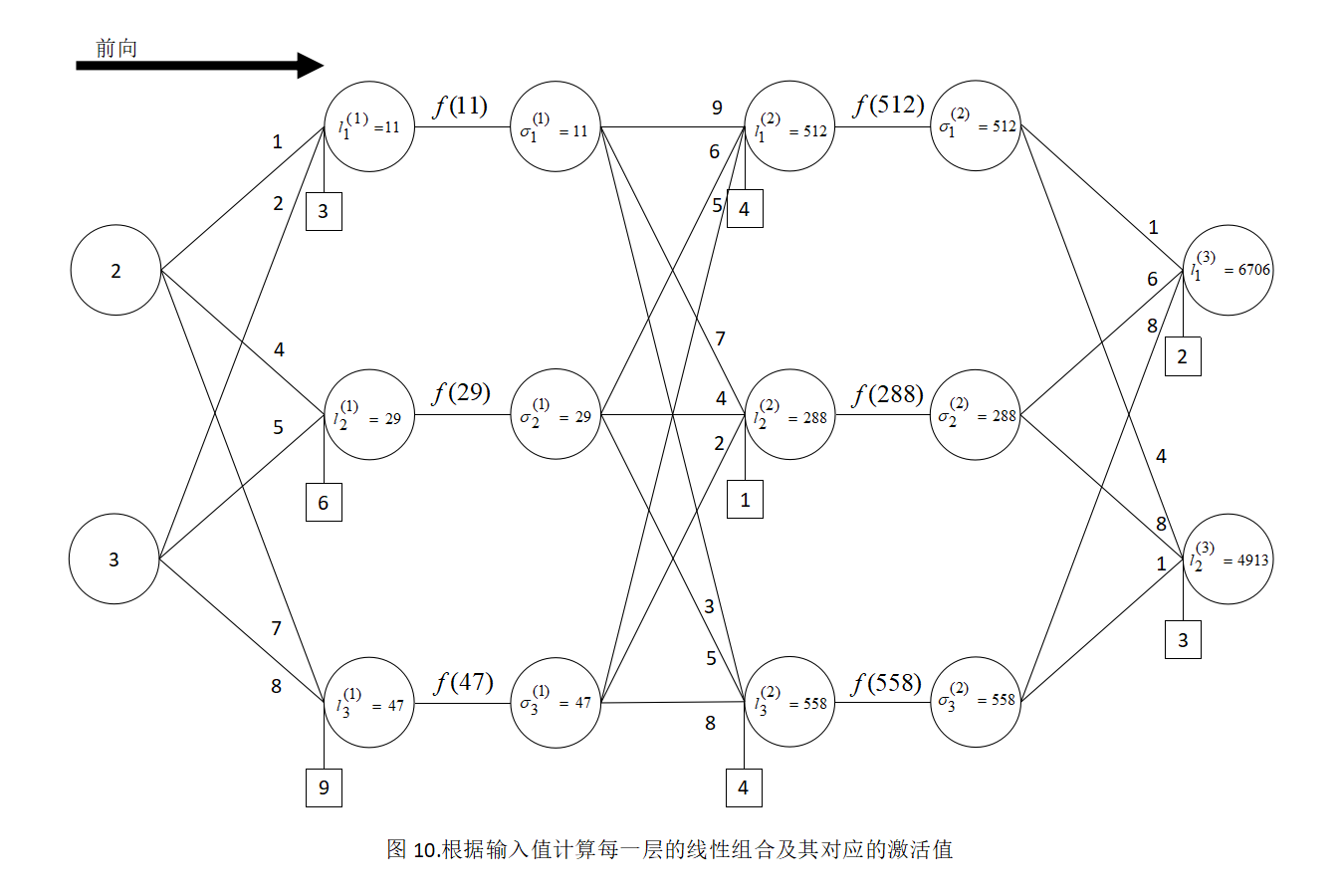

我们通过以下示例来理解梯度反向传播。假设全连接神经网络如下图1所示,其中有2个隐含层,输入层的神经个数为2,第1层的神经元个数为3,第2层的神经元个数为3,输出层的神经元个数为2,激活函数为 f ( ⋅ ) f(\cdot) f(⋅),第1层和第2层有激活函数操作,输出层没有激活函数操作,权重和偏置均为未知数。假设该全连接神经网络有一个输入[2, 3],根据前馈全连接神经网络的计算方式,可以计算出每一层的线性组合及其对应的激活值,如图2所示。为了便于讨论,将分别显示中间的两个隐含层计算出来的线性组合和激活值。

设置函数 F F F是根据输出层的值 l 1 ( 3 ) l_1^{(3)} l1(3)和 l 2 ( 3 ) l_2^{(3)} l2(3)构造出的函数,那么函数 F F F就是以全连接神经网络权重和偏置为自变量的函数,其中自变量的个数为29,假设为:

F ( w 11 ( 1 ) , w 21 ( 1 ) , b 1 ( 1 ) , w 12 ( 1 ) , w 22 ( 1 ) , b 2 ( 1 ) , w 13 ( 1 ) , w 23 ( 1 ) , b 3 ( 1 ) , w 11 ( 2 ) , w 21 ( 2 ) , w 31 ( 2 ) , b 1 ( 2 ) , w 12 ( 2 ) , w 22 ( 2 ) , w 32 ( 2 ) , b 2 ( 2 ) , w 13 ( 2 ) , w 23 ( 2 ) , w 33 ( 2 ) , b 3 ( 2 ) , F(w_{11}^{(1)}, w_{21}^{(1)}, b_{1}^{(1)}, w_{12}^{(1)}, w_{22}^{(1)}, b_{2}^{(1)}, w_{13}^{(1)}, w_{23}^{(1)}, b_{3}^{(1)}, w_{11}^{(2)}, w_{21}^{(2)}, w_{31}^{(2)}, b_{1}^{(2)}, w_{12}^{(2)}, w_{22}^{(2)}, w_{32}^{(2)}, b_{2}^{(2)}, w_{13}^{(2)}, w_{23}^{(2)}, w_{33}^{(2)}, b_{3}^{(2)}, F(w11(1),w21(1),b1(1),w12(1),w22(1),b2(1),w13(1),w23(1),b3(1),w11(2),w21(2),w31(2),b1(2),w12(2),w22(2),w32(2),b2(2),w13(2),w23(2),w33(2),b3(2),

w 11 ( 3 ) , w 21 ( 3 ) , w 31 ( 3 ) , b 1 ( 3 ) , w 12 ( 3 ) , w 22 ( 3 ) , w 32 ( 3 ) , b 2 ( 3 ) ) w_{11}^{(3)}, w_{21}^{(3)}, w_{31}^{(3)}, b_{1}^{(3)}, w_{12}^{(3)}, w_{22}^{(3)}, w_{32}^{(3)}, b_{2}^{(3)}) w11(3),w21(3),w31(3),b1(3),w12(3),w22(3),w32(3),b2(3))

= ( l 1 ( 3 ) + l 2 ( 3 ) ) 2 = (l_1^{(3)} + l_2^{(3)})^2 =(l1(3)+l2(3))2

l 1 ( 3 ) l_1^{(3)} l1(3)和 l 2 ( 3 ) l_2^{(3)} l2(3)是关于 σ 1 ( 2 ) \sigma_1^{(2)} σ1(2)、 σ 2 ( 2 ) \sigma_2^{(2)} σ2(2)、 σ 3 ( 2 ) \sigma_3^{(2)} σ3(2)的函数,根据层数的链式法则。

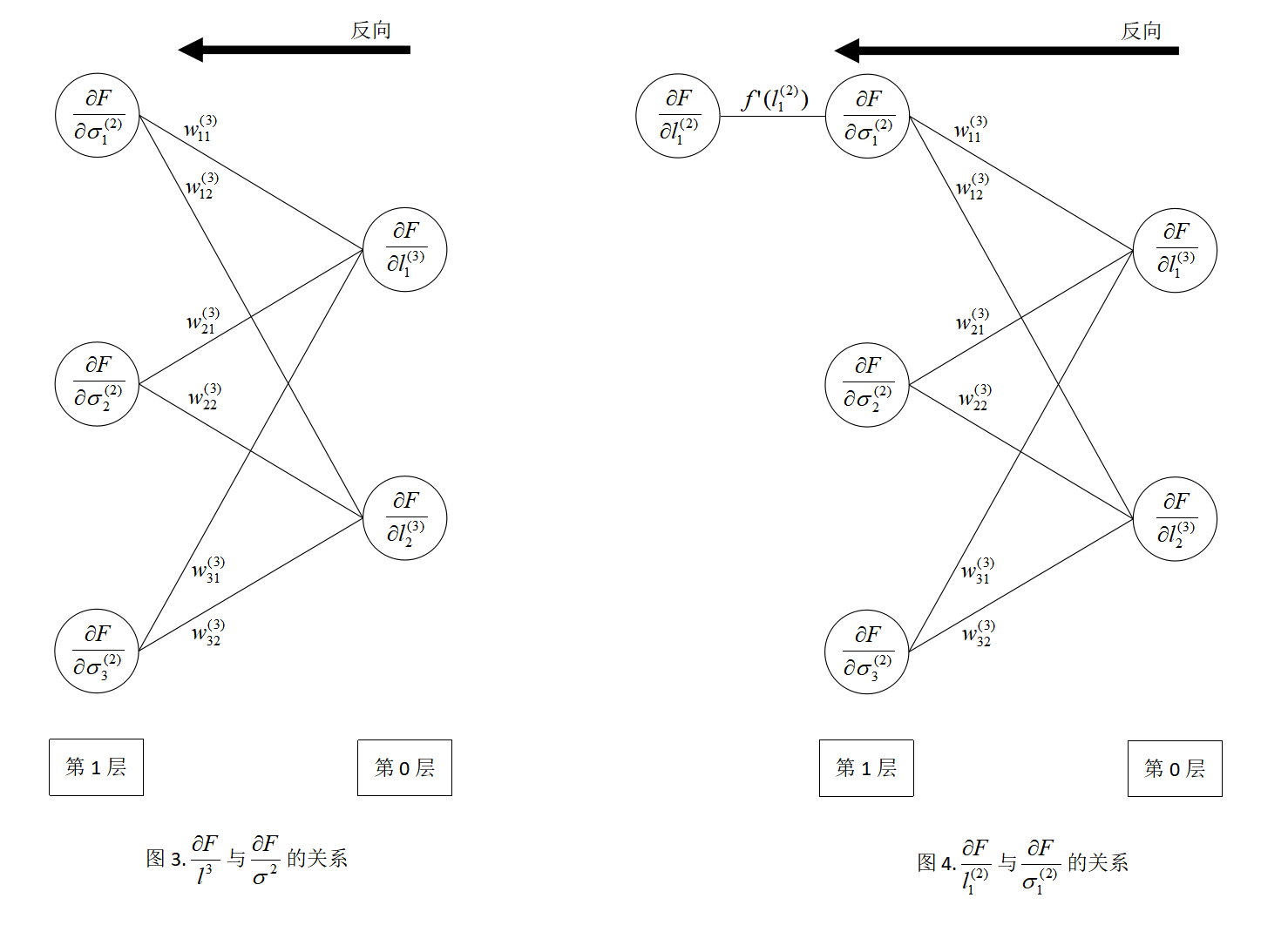

F F F关于 σ 1 ( 2 ) \sigma_1^{(2)} σ1(2)的导数为: ∂ F ∂ σ 1 ( 2 ) \frac{\partial F}{\partial {\sigma_1^{(2)}}} ∂σ1(2)∂F = ∂ F ∂ l 1 ( 3 ) ∂ l 1 ( 3 ) ∂ σ 1 ( 2 ) \frac{\partial F}{\partial {l_1^{(3)}}}\frac{\partial {l_1^{(3)}}}{\partial\sigma_1^{(2)}} ∂l1(3)∂F∂σ1(2)∂l1(3) + ∂ F ∂ l 2 ( 3 ) ∂ l 2 ( 3 ) ∂ σ 1 ( 2 ) \frac{\partial F}{\partial {l_2^{(3)}}}\frac{\partial {l_2^{(3)}}}{\partial\sigma_1^{(2)}} ∂l2(3)∂F∂σ1(2)∂l2(3) = ∂ F ∂ l 1 ( 3 ) w 11 ( 3 ) \frac{\partial F}{\partial {l_1^{(3)}}}w_{11}^{(3)} ∂l1(3)∂Fw11(3) + ∂ F ∂ l 2 ( 3 ) w 12 ( 3 ) \frac{\partial F}{\partial {l_2^{(3)}}}w_{12}^{(3)} ∂l2(3)∂Fw12(3)

F F F关于 σ 2 ( 2 ) \sigma_2^{(2)} σ2(2)的导数为: ∂ F ∂ σ 2 ( 2 ) \frac{\partial F}{\partial {\sigma_2^{(2)}}} ∂σ2(2)∂F = ∂ F ∂ l 1 ( 3 ) ∂ l 1 ( 3 ) ∂ σ 2 ( 2 ) \frac{\partial F}{\partial {l_1^{(3)}}}\frac{\partial {l_1^{(3)}}}{\partial\sigma_2^{(2)}} ∂l1(3)∂F∂σ2(2)∂l1(3) + ∂ F ∂ l 2 ( 3 ) ∂ l 2 ( 3 ) ∂ σ 2 ( 2 ) \frac{\partial F}{\partial {l_2^{(3)}}}\frac{\partial {l_2^{(3)}}}{\partial\sigma_2^{(2)}} ∂l2(3)∂F∂σ2(2)∂l2(3) = ∂ F ∂ l 1 ( 3 ) w 21 ( 3 ) \frac{\partial F}{\partial {l_1^{(3)}}}w_{21}^{(3)} ∂l1(3)∂Fw21(3) + ∂ F ∂ l 2 ( 3 ) w 22 ( 3 ) \frac{\partial F}{\partial {l_2^{(3)}}}w_{22}^{(3)} ∂l2(3)∂Fw22(3)

F F F关于 σ 3 ( 2 ) \sigma_3^{(2)} σ3(2)的导数为: ∂ F ∂ σ 3 ( 2 ) \frac{\partial F}{\partial {\sigma_3^{(2)}}} ∂σ3(2)∂F = ∂ F ∂ l 1 ( 3 ) ∂ l 1 ( 3 ) ∂ σ 3 ( 2 ) \frac{\partial F}{\partial {l_1^{(3)}}}\frac{\partial {l_1^{(3)}}}{\partial\sigma_3^{(2)}} ∂l1(3)∂F∂σ3(2)∂l1(3) + ∂ F ∂ l 2 ( 3 ) ∂ l 2 ( 3 ) ∂ σ 3 ( 2 ) \frac{\partial F}{\partial {l_2^{(3)}}}\frac{\partial {l_2^{(3)}}}{\partial\sigma_3^{(2)}} ∂l2(3)∂F∂σ3(2)∂l2(3) = ∂ F ∂ l 1 ( 3 ) w 31 ( 3 ) \frac{\partial F}{\partial {l_1^{(3)}}}w_{31}^{(3)} ∂l1(3)∂Fw31(3) + ∂ F ∂ l 2 ( 3 ) w 32 ( 3 ) \frac{\partial F}{\partial {l_2^{(3)}}}w_{32}^{(3)} ∂l2(3)∂Fw32(3)

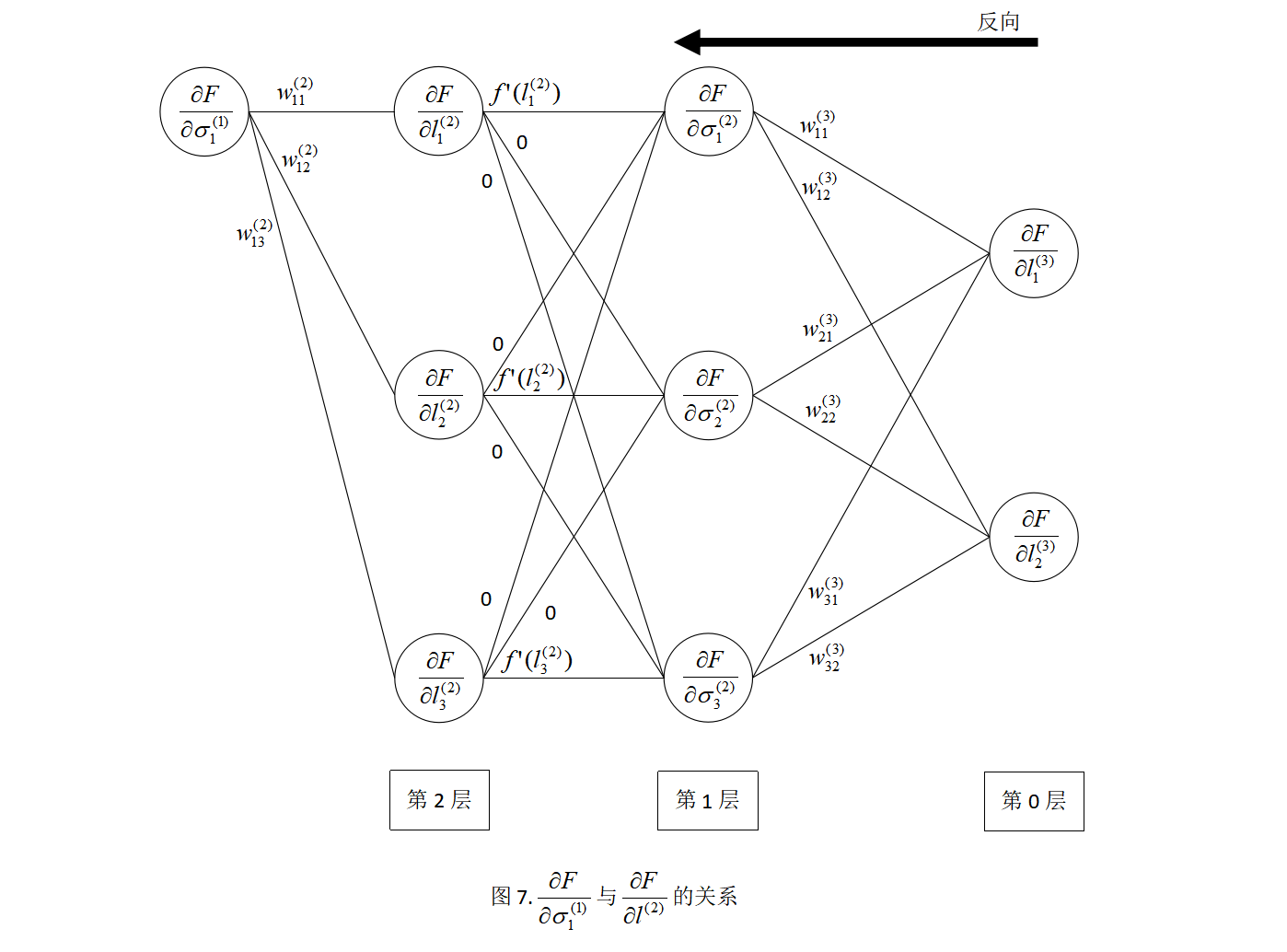

仔细观察后会发现,上述过程可以用一个全连接神经网络表示,如下图3所示。

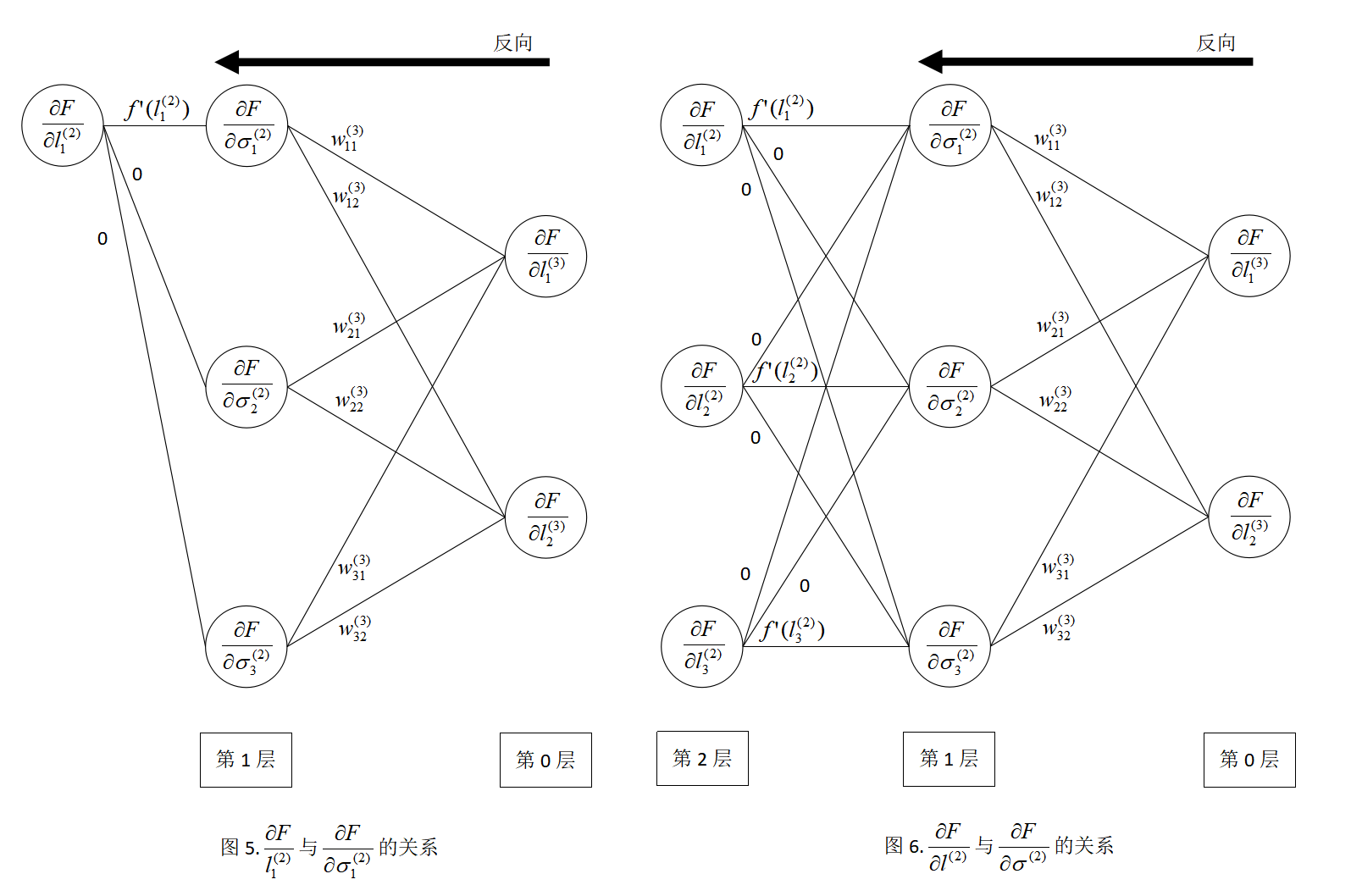

因为 σ 1 ( 2 ) = f ( l 1 ( 2 ) ) \sigma_1^{(2)} = f(l_1^{(2)}) σ1(2)=f(l1(2)),所以: ∂ F ∂ l 1 ( 2 ) = ∂ F ∂ σ 1 ( 2 ) f ′ ( l 1 ( 2 ) ) \frac{\partial F}{\partial l_1^{(2)}} = \frac{\partial F}{\partial \sigma_1^{(2)}}{f}'(l_1^{(2)}) ∂l1(2)∂F=∂σ1(2)∂Ff′(l1(2))

在图2的基础上进行修改,得到图4。

显然,图4不符合全连接神经网络的定义,因为全连接神经网络上一层的神经元都有下一层连接,所以可以做以下(补零操作)处理:

∂ F ∂ l 1 ( 2 ) = ∂ F ∂ σ 1 ( 2 ) ⋅ f ′ ( l 1 ( 2 ) ) + ∂ F ∂ σ 2 ( 2 ) ⋅ 0 + ∂ F ∂ σ 3 ( 2 ) ⋅ 0 \frac{\partial F}{\partial l_1^{(2)}} = \frac{\partial F}{\partial \sigma_1^{(2)}} \cdot {f}'(l_1^{(2)}) + \frac{\partial F}{\partial \sigma_2^{(2)}} \cdot 0 + \frac{\partial F}{\partial \sigma_3^{(2)}} \cdot 0 ∂l1(2)∂F=∂σ1(2)∂F⋅f′(l1(2))+∂σ2(2)∂F⋅0+∂σ3(2)∂F⋅0

可图4可以修改为图5所示的全连接神经网络。同理,可得:

∂ F ∂ l 2 ( 2 ) = ∂ F ∂ σ 1 ( 2 ) ⋅ 0 + ∂ F ∂ σ 2 ( 2 ) ⋅ f ′ ( l 2 ( 2 ) ) + ∂ F ∂ σ 3 ( 2 ) ⋅ 0 \frac{\partial F}{\partial l_2^{(2)}} = \frac{\partial F}{\partial \sigma_1^{(2)}} \cdot 0 + \frac{\partial F}{\partial \sigma_2^{(2)}} \cdot {f}'(l_2^{(2)}) + \frac{\partial F}{\partial \sigma_3^{(2)}} \cdot 0 ∂l2(2)∂F=∂σ1(2)∂F⋅0+∂σ2(2)∂F⋅f′(l2(2))+∂σ3(2)∂F⋅0

∂ F ∂ l 3 ( 2 ) = ∂ F ∂ σ 1 ( 2 ) ⋅ 0 + ∂ F ∂ σ 2 ( 2 ) ⋅ 0 + ∂ F ∂ σ 3 ( 2 ) ⋅ f ′ ( l 3 ( 2 ) ) \frac{\partial F}{\partial l_3^{(2)}} = \frac{\partial F}{\partial \sigma_1^{(2)}} \cdot 0 + \frac{\partial F}{\partial \sigma_2^{(2)}} \cdot 0+ \frac{\partial F}{\partial \sigma_3^{(2)}} \cdot {f}'(l_3^{(2)}) ∂l3(2)∂F=∂σ1(2)∂F⋅0+∂σ2(2)∂F⋅0+∂σ3(2)∂F⋅f′(l3(2))

同理,上述关系可以在图5的基础上修改为图6所示:

计算出了 ∂ F ∂ l 1 ( 2 ) \frac{\partial F}{\partial l_1^{(2)}} ∂l1(2)∂F、 ∂ F ∂ l 2 ( 2 ) \frac{\partial F}{\partial l_2^{(2)}} ∂l2(2)∂F、 ∂ F ∂ l 3 ( 2 ) \frac{\partial F}{\partial l_3^{(2)}} ∂l3(2)∂F,又因为 l 1 ( 2 ) l_1^{(2)} l1(2)、 l 2 ( 2 ) l_2^{(2)} l2(2)、 l 3 ( 2 ) l_3^{(2)} l3(2)是关于 σ 1 ( 1 ) \sigma_1^{(1)} σ1(1)的函数,根据链式法则计算 ∂ F ∂ σ 1 ( 1 ) \frac{\partial F}{\partial \sigma_1^{(1)}} ∂σ1(1)∂F为:

∂ F ∂ σ 1 ( 1 ) \frac{\partial F}{\partial {\sigma_1^{(1)}}} ∂σ1(1)∂F = ∂ F ∂ l 1 ( 2 ) ∂ l 1 ( 2 ) ∂ σ 1 ( 1 ) \frac{\partial F}{\partial {l_1^{(2)}}}\frac{\partial {l_1^{(2)}}}{\partial\sigma_1^{(1)}} ∂l1(2)∂F∂σ1(1)∂l1(2) + ∂ F ∂ l 2 ( 2 ) ∂ l 2 ( 2 ) ∂ σ 1 ( 1 ) \frac{\partial F}{\partial {l_2^{(2)}}}\frac{\partial {l_2^{(2)}}}{\partial\sigma_1^{(1)}} ∂l2(2)∂F∂σ1(1)∂l2(2) + ∂ F ∂ l 3 ( 2 ) ∂ l 3 ( 2 ) ∂ σ 1 ( 1 ) \frac{\partial F}{\partial {l_3^{(2)}}}\frac{\partial {l_3^{(2)}}}{\partial\sigma_1^{(1)}} ∂l3(2)∂F∂σ1(1)∂l3(2) = ∂ F ∂ l 1 ( 2 ) w 11 ( 2 ) \frac{\partial F}{\partial {l_1^{(2)}}}w_{11}^{(2)} ∂l1(2)∂Fw11(2) + ∂ F ∂ l 2 ( 2 ) w 12 ( 2 ) \frac{\partial F}{\partial {l_2^{(2)}}}w_{12}^{(2)} ∂l2(2)∂Fw12(2) + ∂ F ∂ l 3 ( 2 ) w 13 ( 2 ) \frac{\partial F}{\partial {l_3^{(2)}}}w_{13}^{(2)} ∂l3(2)∂Fw13(2)

同理,上述关系可以在图6的基础上修改为图7所示:

同理,根据链式法则计算 ∂ F ∂ σ 2 ( 1 ) \frac{\partial F}{\partial \sigma_2^{(1)}} ∂σ2(1)∂F、 ∂ F ∂ σ 3 ( 1 ) \frac{\partial F}{\partial \sigma_3^{(1)}} ∂σ3(1)∂F,计算过程如下:

∂ F ∂ σ 2 ( 1 ) \frac{\partial F}{\partial {\sigma_2^{(1)}}} ∂σ2(1)∂F = ∂ F ∂ l 1 ( 2 ) ∂ l 1 ( 2 ) ∂ σ 2 ( 1 ) \frac{\partial F}{\partial {l_1^{(2)}}}\frac{\partial {l_1^{(2)}}}{\partial\sigma_2^{(1)}} ∂l1(2)∂F∂σ2(1)∂l1(2) + ∂ F ∂ l 2 ( 2 ) ∂ l 2 ( 2 ) ∂ σ 2 ( 1 ) \frac{\partial F}{\partial {l_2^{(2)}}}\frac{\partial {l_2^{(2)}}}{\partial\sigma_2^{(1)}} ∂l2(2)∂F∂σ2(1)∂l2(2) + ∂ F ∂ l 3 ( 2 ) ∂ l 3 ( 2 ) ∂ σ 2 ( 1 ) \frac{\partial F}{\partial {l_3^{(2)}}}\frac{\partial {l_3^{(2)}}}{\partial\sigma_2^{(1)}} ∂l3(2)∂F∂σ2(1)∂l3(2) = ∂ F ∂ l 1 ( 2 ) w 21 ( 2 ) \frac{\partial F}{\partial {l_1^{(2)}}}w_{21}^{(2)} ∂l1(2)∂Fw21(2) + ∂ F ∂ l 2 ( 2 ) w 22 ( 2 ) \frac{\partial F}{\partial {l_2^{(2)}}}w_{22}^{(2)} ∂l2(2)∂Fw22(2) + ∂ F ∂ l 3 ( 2 ) w 23 ( 2 ) \frac{\partial F}{\partial {l_3^{(2)}}}w_{23}^{(2)} ∂l3(2)∂Fw23(2)

∂ F ∂ σ 3 ( 1 ) \frac{\partial F}{\partial {\sigma_3^{(1)}}} ∂σ3(1)∂F = ∂ F ∂ l 1 ( 2 ) ∂ l 1 ( 2 ) ∂ σ 3 ( 1 ) \frac{\partial F}{\partial {l_1^{(2)}}}\frac{\partial {l_1^{(2)}}}{\partial\sigma_3^{(1)}} ∂l1(2)∂F∂σ3(1)∂l1(2) + ∂ F ∂ l 2 ( 2 ) ∂ l 2 ( 2 ) ∂ σ 3 ( 1 ) \frac{\partial F}{\partial {l_2^{(2)}}}\frac{\partial {l_2^{(2)}}}{\partial\sigma_3^{(1)}} ∂l2(2)∂F∂σ3(1)∂l2(2) + ∂ F ∂ l 3 ( 2 ) ∂ l 3 ( 2 ) ∂ σ 3 ( 1 ) \frac{\partial F}{\partial {l_3^{(2)}}}\frac{\partial {l_3^{(2)}}}{\partial\sigma_3^{(1)}} ∂l3(2)∂F∂σ3(1)∂l3(2) = ∂ F ∂ l 1 ( 2 ) w 31 ( 2 ) \frac{\partial F}{\partial {l_1^{(2)}}}w_{31}^{(2)} ∂l1(2)∂Fw31(2) + ∂ F ∂ l 2 ( 2 ) w 32 ( 2 ) \frac{\partial F}{\partial {l_2^{(2)}}}w_{32}^{(2)} ∂l2(2)∂Fw32(2) + ∂ F ∂ l 3 ( 2 ) w 33 ( 2 ) \frac{\partial F}{\partial {l_3^{(2)}}}w_{33}^{(2)} ∂l3(2)∂Fw33(2)

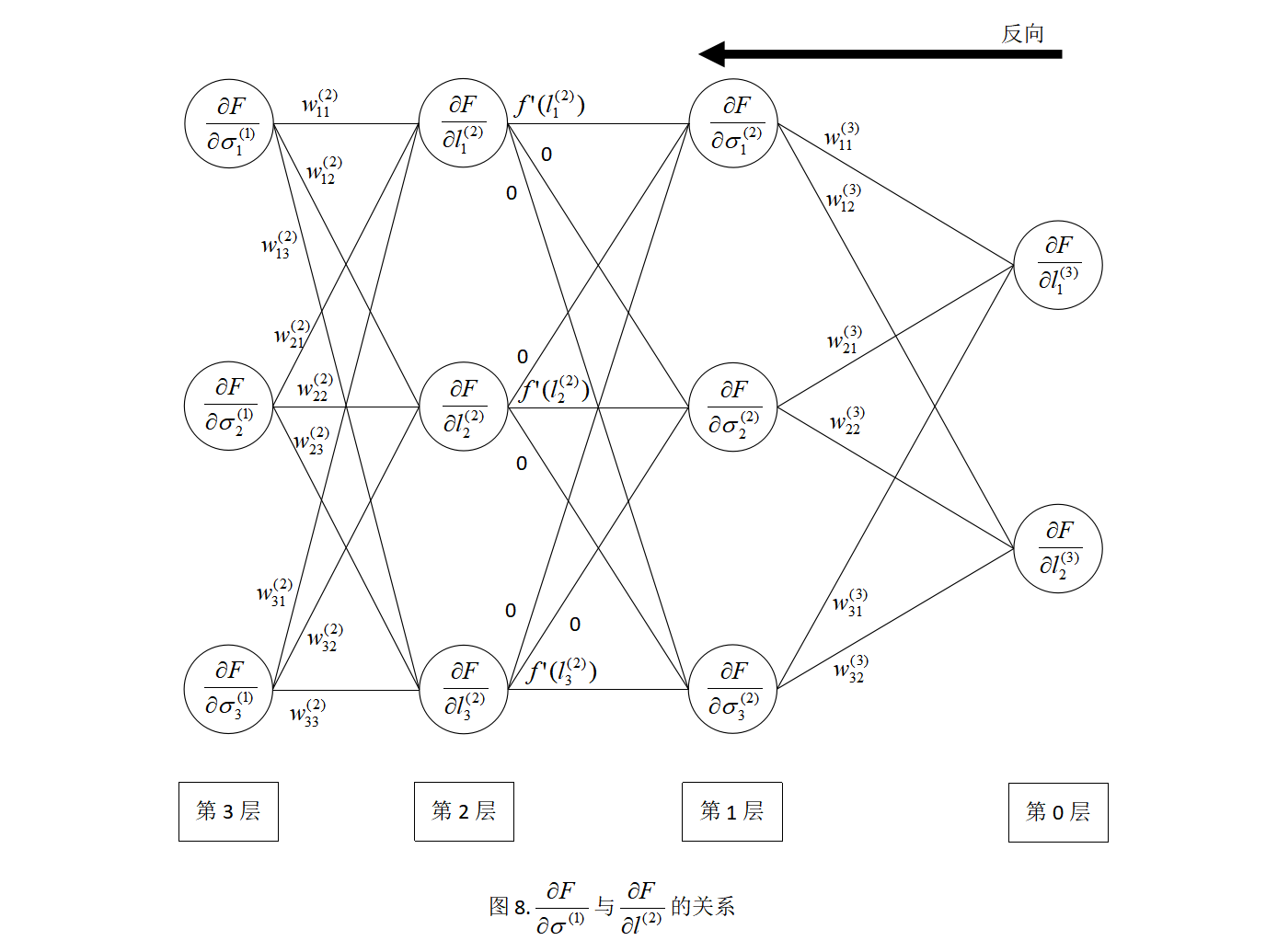

上述关系可以添加到图7的全连接神经网络中,得到如图8所示的新的全连接神经网络。

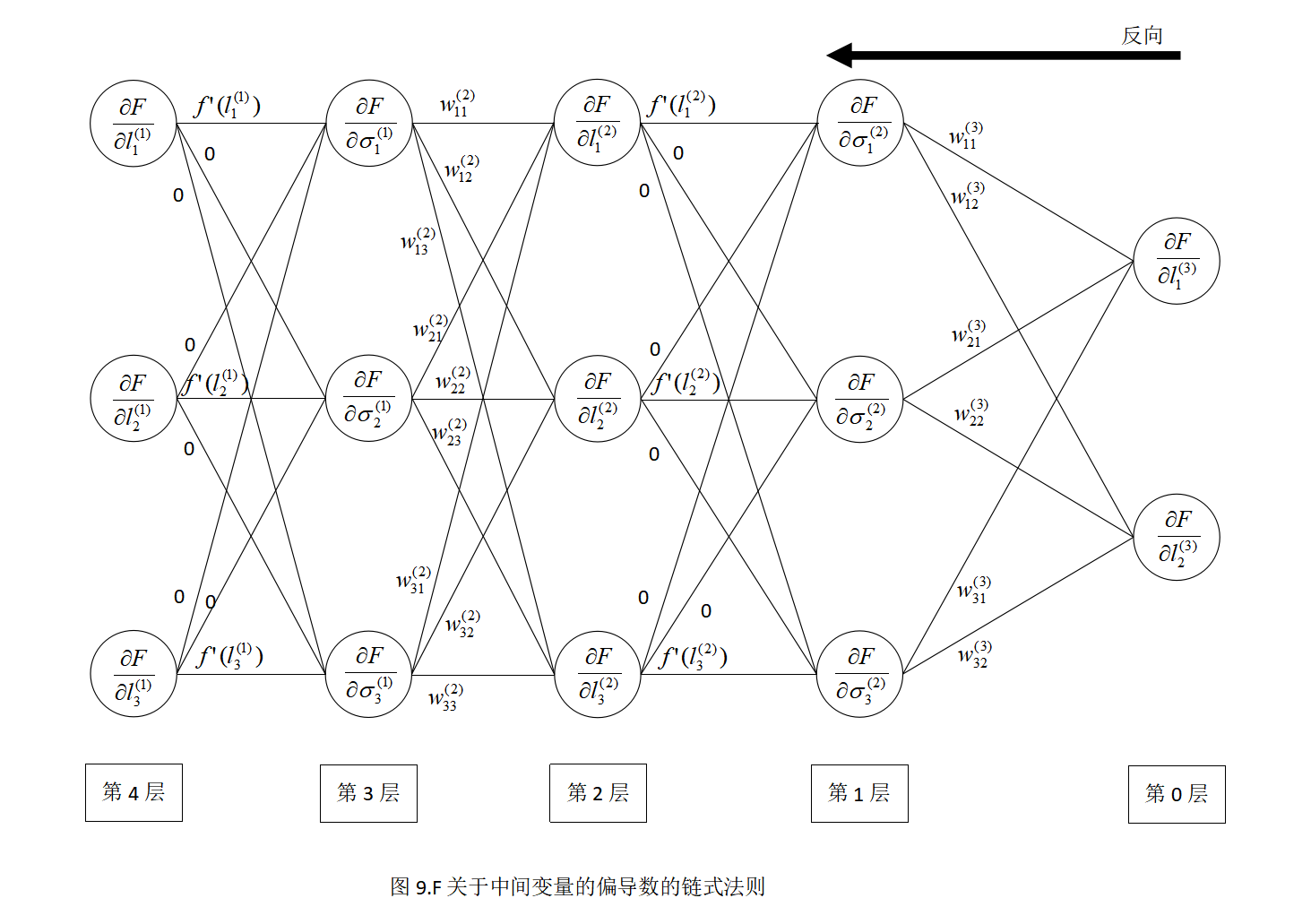

依此类推:我们可以利用导数的链式法则,计算出 ∂ F ∂ l ( 1 ) \frac{\partial F}{\partial l^{(1)}} ∂l(1)∂F与 ∂ F ∂ σ ( 1 ) \frac{\partial F}{\partial \sigma^{(1)}} ∂σ(1)∂F的关系,可以在图8的基础上加上一层表示该关系,这样就得到了 F F F关系中间变量的偏导数,而这些偏导数的关系也可以用一个全连接神经网络表示,如图9所示。

如何利用上述结论,快速计算 F F F在某一特定点的梯度呢?假设要计算 F F F在点:

( w 11 ( 1 ) , w 21 ( 1 ) , b 1 ( 1 ) , w 12 ( 1 ) , w 22 ( 1 ) , b 2 ( 1 ) , w 13 ( 1 ) , w 23 ( 1 ) , b 3 ( 1 ) , w 11 ( 2 ) , w 21 ( 2 ) , w 31 ( 2 ) , b 1 ( 2 ) , w 12 ( 2 ) , w 22 ( 2 ) , w 32 ( 2 ) , b 2 ( 2 ) , w 13 ( 2 ) , w 23 ( 2 ) , w 33 ( 2 ) , b 3 ( 2 ) , (w_{11}^{(1)}, w_{21}^{(1)}, b_{1}^{(1)}, w_{12}^{(1)}, w_{22}^{(1)}, b_{2}^{(1)}, w_{13}^{(1)}, w_{23}^{(1)}, b_{3}^{(1)}, w_{11}^{(2)}, w_{21}^{(2)}, w_{31}^{(2)}, b_{1}^{(2)}, w_{12}^{(2)}, w_{22}^{(2)}, w_{32}^{(2)}, b_{2}^{(2)}, w_{13}^{(2)}, w_{23}^{(2)}, w_{33}^{(2)}, b_{3}^{(2)}, (w11(1),w21(1),b1(1),w12(1),w22(1),b2(1),w13(1),w23(1),b3(1),w11(2),w21(2),w31(2),b1(2),w12(2),w22(2),w32(2),b2(2),w13(2),w23(2),w33(2),b3(2),

w 11 ( 3 ) , w 21 ( 3 ) , w 31 ( 3 ) , b 1 ( 3 ) , w 12 ( 3 ) , w 22 ( 3 ) , w 32 ( 3 ) , b 2 ( 3 ) ) = w_{11}^{(3)}, w_{21}^{(3)}, w_{31}^{(3)}, b_{1}^{(3)}, w_{12}^{(3)}, w_{22}^{(3)}, w_{32}^{(3)}, b_{2}^{(3)}) = w11(3),w21(3),w31(3),b1(3),w12(3),w22(3),w32(3),b2(3))=

( 1 , 2 , 3 , 4 , 5 , 6 , 7 , 8 , 9 , 9 , 6 , 5 , 4 , 7 , 4 , 2 , 1 , 3 , 5 , 8 , 4 , 1 , 6 , 8 , 2 , 4 , 8 , 1 , 3 ) (1,2,3,4,5,6,7,8,9,9,6,5,4,7,4,2,1,3,5,8,4,1,6,8,2,4,8,1,3) (1,2,3,4,5,6,7,8,9,9,6,5,4,7,4,2,1,3,5,8,4,1,6,8,2,4,8,1,3)

处的梯度,为了表示方便将该点记为 w b wb wb,只要计算 F F F在该点处的每一个自变量的偏导数即可,比如 F F F在该点处关于 b 1 ( 3 ) b_1^{(3)} b1(3)的导数,记为:

∂ F ∂ b 1 ( 3 ) ∣ w b , b 1 ( 3 ) = 2 \frac{\partial F}{\partial b_1^{(3)}}_{|wb,b_1^{(3)}=2} ∂b1(3)∂F∣wb,b1(3)=2

根据导数的链式法则:

∂ F ∂ b 1 ( 3 ) = ∂ F ∂ l 1 ( 3 ) ⋅ ∂ l 1 ( 3 ) ∂ b 1 ( 3 ) \frac{\partial F}{\partial b_1^{(3)}} = \frac{\partial F}{\partial l_1^{(3)}} \cdot \frac{\partial l_1^{(3)}}{\partial b_1^{(3)}} ∂b1(3)∂F=∂l1(3)∂F⋅∂b1(3)∂l1(3)

因为: ∂ l 1 ( 3 ) ∂ b 1 ( 3 ) = 1 \frac{\partial l_1^{(3)}}{\partial b_1^{(3)}} = 1 ∂b1(3)∂l1(3)=1

所以: ∂ F ∂ b 1 ( 3 ) = ∂ F ∂ l 1 ( 3 ) \frac{\partial F}{\partial b_1^{(3)}} = \frac{\partial F}{\partial l_1^{(3)}} ∂b1(3)∂F=∂l1(3)∂F

针对其他的偏置的偏导数也类似,为了方便,我们用矩阵表示为:

∂ F ∂ b ( 3 ) = [ ∂ F ∂ b 1 ( 3 ) ∂ F ∂ b 2 ( 3 ) ] = [ ∂ F ∂ l 1 ( 3 ) ∂ F ∂ l 2 ( 3 ) ] \frac{\partial F}{\partial b^{(3)}} = \begin{bmatrix}\frac{\partial F}{\partial b_1^{(3)}} \\ \\ \frac{\partial F}{\partial b_2^{(3)}} \end{bmatrix} = \begin{bmatrix}\frac{\partial F}{\partial l_1^{(3)}} \\ \\ \frac{\partial F}{\partial l_2^{(3)}}\end{bmatrix} ∂b(3)∂F=⎣⎢⎡∂b1(3)∂F∂b2(3)∂F⎦⎥⎤=⎣⎢⎡∂l1(3)∂F∂l2(3)∂F⎦⎥⎤

首先根据前馈网络的计算方法,计算当权重和偏置为 w b wb wb时,关于 F F F的中间变量的值,即每一层的线性组合及相应的激活后的值,如图10所示。

因为: ∂ F ∂ b ( 3 ) = ∂ F ∂ l ( 3 ) \frac{\partial F}{\partial b^{(3)}} = \frac{\partial F}{\partial l^{(3)}} ∂b(3)∂F=∂l(3)∂F

所以:

∂ F ∂ b ( 3 ) ∣ w b , b ( 3 ) = [ 2 3 ] = [ ∂ F ∂ b 1 ( 3 ) ∣ w b , b 1 ( 3 ) = 2 ∂ F ∂ b 2 ( 3 ) ∣ w b , b 2 ( 3 ) = 3 ] = ∂ F ∂ l ( 3 ) ∣ l ( 3 ) = [ 6706 4913 ] = [ ∂ F ∂ l 1 ( 3 ) ∣ l 1 ( 3 ) = 6706 ∂ F ∂ l 2 ( 3 ) ∣ l 2 ( 3 ) = 4913 ] \frac{\partial F}{\partial b^{(3)}}_{|wb,b^{(3)}= \begin{bmatrix}2\\ 3\end{bmatrix}} = \begin{bmatrix}\frac{\partial F}{\partial b_1^{(3)}}_{|wb,b_1^{(3)}=2}\\ \\ \frac{\partial F}{\partial b_2^{(3)}}_{|wb,b_2^{(3)}=3}\end{bmatrix} = \frac{\partial F}{\partial l^{(3)}}_{|l^{(3)}= \begin{bmatrix}6706\\ 4913\end{bmatrix}} = \begin{bmatrix}\frac{\partial F}{\partial l_1^{(3)}}_{|l_1^{(3)}=6706}\\ \\ \frac{\partial F}{\partial l_2^{(3)}}_{|l_2^{(3)}=4913}\end{bmatrix} ∂b(3)∂F∣wb,b(3)=[23]=⎣⎢⎢⎡∂b1(3)∂F∣wb,b1(3)=2∂b2(3)∂F∣wb,b2(3)=3⎦⎥⎥⎤=∂l(3)∂F∣l(3)=[67064913]=⎣⎢⎢⎡∂l1(3)∂F∣l1(3)=6706∂l2(3)∂F∣l2(3)=4913⎦⎥⎥⎤

因为:

∂ F ∂ l 1 ( 3 ) ∣ l 1 ( 3 ) = 6706 = 2 ∗ ( 6706 + 4913 ) = 23238 \frac{\partial F}{\partial l_1^{(3)}}_{|l_1^{(3)}=6706} = 2 *(6706 + 4913) = 23238 ∂l1(3)∂F∣l1(3)=6706=2∗(6706+4913)=23238

∂ F ∂ l 2 ( 3 ) ∣ l 2 ( 3 ) = 4913 = 2 ∗ ( 6706 + 4913 ) = 23238 \frac{\partial F}{\partial l_2^{(3)}}_{|l_2^{(3)}=4913} = 2 *(6706 + 4913) = 23238 ∂l2(3)∂F∣l2(3)=4913=2∗(6706+4913)=23238

所以:

∂ F ∂ b ( 3 ) ∣ w b , b ( 3 ) = [ 2 3 ] = [ 23238 23238 ] \frac{\partial F}{\partial b^{(3)}}_{|wb,b^{(3)}= \begin{bmatrix}2\\ 3\end{bmatrix}} = \begin{bmatrix}23238\\ 23238\end{bmatrix} ∂b(3)∂F∣wb,b(3)=[23]=[2323823238]

现在已经计算出了 ∂ F ∂ l 1 ( 3 ) ∣ l 1 ( 3 ) = 6706 \frac{\partial F}{\partial l_1^{(3)}}_{|l_1^{(3)}=6706} ∂l1(3)∂F∣l1(3)=6706和 ∂ F ∂ l 2 ( 3 ) ∣ l 2 ( 3 ) = 4913 \frac{\partial F}{\partial l_2{(3)}}_{|l_2^{(3)}=4913} ∂l2(3)∂F∣l2(3)=4913,相当于已知了图9所示的反向的全连接神经网络的输入层,我们结合图10就可以快速计算出 F F F在:

σ ( 2 ) = [ σ 1 ( 2 ) σ 2 ( 2 ) σ 3 ( 2 ) ] = [ 512 288 558 ] \sigma^{(2)}=\begin{bmatrix}\sigma_1^{(2)}\\ \sigma_2^{(2)}\\ \sigma_3^{(2)}\end{bmatrix} = \begin{bmatrix}512\\ 288\\ 558\end{bmatrix} σ(2)=⎣⎢⎡σ1(2)σ2(2)σ3(2)⎦⎥⎤=⎣⎡512288558⎦⎤

处的梯度为 ∂ F ∂ σ ( 2 ) \frac{\partial F}{\partial \sigma^{(2)}} ∂σ(2)∂F,然后计算 F F F在:

l ( 2 ) = [ l 1 ( 2 ) l 2 ( 2 ) l 3 ( 2 ) ] = [ 512 288 558 ] l^{(2)}=\begin{bmatrix}l_1^{(2)}\\ l_2^{(2)}\\ l_3^{(2)}\end{bmatrix} = \begin{bmatrix}512\\ 288\\ 558\end{bmatrix} l(2)=⎣⎢⎡l1(2)l2(2)l3(2)⎦⎥⎤=⎣⎡512288558⎦⎤

处的梯度为 ∂ F ∂ l ( 2 ) \frac{\partial F}{\partial l^{(2)}} ∂l(2)∂F,然后计算 F F F在:

σ ( 1 ) = [ σ 1 ( 1 ) σ 2 ( 1 ) σ 3 ( 1 ) ] = [ 11 29 47 ] \sigma^{(1)}=\begin{bmatrix}\sigma_1^{(1)}\\ \sigma_2^{(1)}\\ \sigma_3^{(1)}\end{bmatrix} = \begin{bmatrix}11\\ 29\\ 47\end{bmatrix} σ(1)=⎣⎢⎡σ1(1)σ2(1)σ3(1)⎦⎥⎤=⎣⎡112947⎦⎤

处的梯度为 ∂ F ∂ σ ( 1 ) \frac{\partial F}{\partial \sigma^{(1)}} ∂σ(1)∂F,然后计算 F F F在:

l ( 1 ) = [ l 1 ( 1 ) l 2 ( 1 ) l 3 ( 1 ) ] = [ 11 29 47 ] l^{(1)}=\begin{bmatrix}l_1^{(1)}\\ l_2^{(1)}\\ l_3^{(1)}\end{bmatrix} = \begin{bmatrix}11\\ 29\\ 47\end{bmatrix} l(1)=⎣⎢⎡l1(1)l2(1)l3(1)⎦⎥⎤=⎣⎡112947⎦⎤

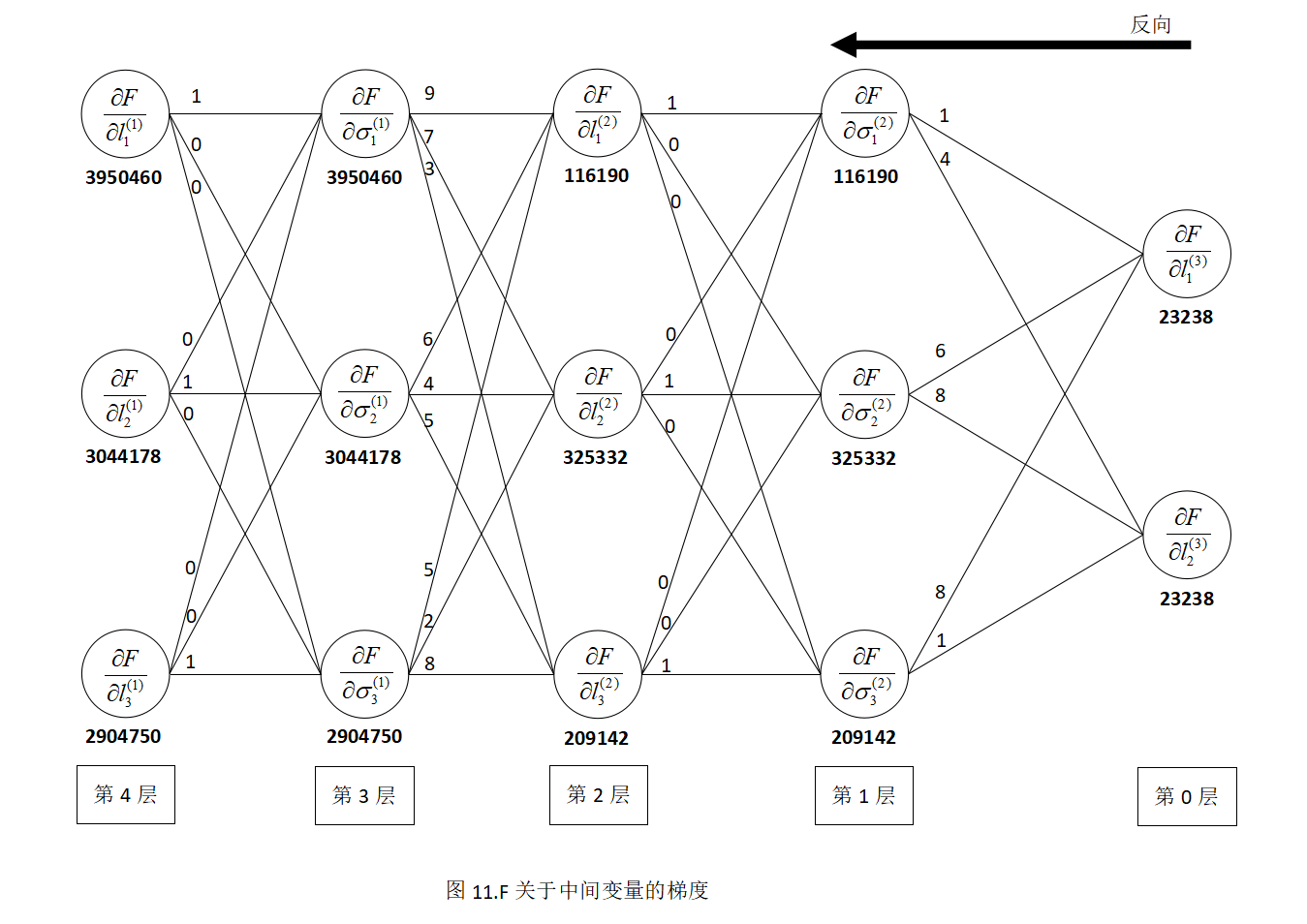

处的梯度为 ∂ F ∂ l ( 1 ) \frac{\partial F}{\partial l^{(1)}} ∂l(1)∂F,结果如图11所示。

因为根据导数的链式法则,函数 F F F关于第2层和第1层的偏置的偏导数满足如下关系:

∂ F ∂ b ( 2 ) = [ ∂ F ∂ b 1 ( 2 ) ∂ F ∂ b 2 ( 2 ) ∂ F ∂ b 3 ( 2 ) ] = [ ∂ F ∂ l 1 ( 2 ) ∂ F ∂ l 2 ( 2 ) ∂ F ∂ l 3 ( 2 ) ] \frac{\partial F}{\partial b^{(2)}} = \begin{bmatrix}\frac{\partial F}{\partial b_1^{(2)}}\\ \\ \frac{\partial F}{\partial b_2^{(2)}}\\ \\ \frac{\partial F}{\partial b_3^{(2)}}\end{bmatrix} = \begin{bmatrix}\frac{\partial F}{\partial l_1^{(2)}}\\ \\ \frac{\partial F}{\partial l_2^{(2)}}\\ \\ \frac{\partial F}{\partial l_3^{(2)}}\end{bmatrix} ∂b(2)∂F=⎣⎢⎢⎢⎢⎢⎢⎡∂b1(2)∂F∂b2(2)∂F∂b3(2)∂F⎦⎥⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎢⎢⎡∂l1(2)∂F∂l2(2)∂F∂l3(2)∂F⎦⎥⎥⎥⎥⎥⎥⎤, ∂ F ∂ b ( 1 ) = [ ∂ F ∂ b 1 ( 1 ) ∂ F ∂ b 2 ( 1 ) ∂ F ∂ b 3 ( 1 ) ] = [ ∂ F ∂ l 1 ( 1 ) ∂ F ∂ l 2 ( 1 ) ∂ F ∂ l 3 ( 1 ) ] \frac{\partial F}{\partial b^{(1)}} = \begin{bmatrix}\frac{\partial F}{\partial b_1^{(1)}}\\ \\ \frac{\partial F}{\partial b_2^{(1)}}\\ \\ \frac{\partial F}{\partial b_3^{(1)}}\end{bmatrix} = \begin{bmatrix}\frac{\partial F}{\partial l_1^{(1)}}\\ \\ \frac{\partial F}{\partial l_2^{(1)}}\\ \\ \frac{\partial F}{\partial l_3^{(1)}}\end{bmatrix} ∂b(1)∂F=⎣⎢⎢⎢⎢⎢⎢⎡∂b1(1)∂F∂b2(1)∂F∂b3(1)∂F⎦⎥⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎢⎢⎡∂l1(1)∂F∂l2(1)∂F∂l3(1)∂F⎦⎥⎥⎥⎥⎥⎥⎤

所以,如图11所示,我们可以快速得到 F F F在点 w b wb wb处关于 b ( 2 ) b^{(2)} b(2)和 b ( 1 ) b^{(1)} b(1)的梯度,结果为:

∂ F ∂ b ( 2 ) ∣ w b , b ( 2 ) = [ 4 1 4 ] = [ ∂ F ∂ b ( 2 ) ∣ w b , b 1 ( 2 ) = 4 ∂ F ∂ b ( 2 ) ∣ w b , b 2 ( 2 ) = 1 ∂ F ∂ b ( 2 ) ∣ w b , b 3 ( 2 ) = 4 ] = [ ∂ F ∂ 1 1 ( 2 ) ∣ l 1 ( 2 ) = 512 ∂ F ∂ l 2 ( 2 ) ∣ l 2 ( 2 ) = 288 ∂ F ∂ l 3 ( 2 ) ∣ l 3 ( 2 ) = 558 ] = [ 116190 325332 209142 ] \frac{\partial F}{\partial b^{(2)}}_{|wb,b^{(2)}=\begin{bmatrix}4\\ 1\\ 4\end{bmatrix}} = \begin{bmatrix}\frac{\partial F}{\partial b^{(2)}}_{|wb,b_1^{(2)}=4}\\ \\ \frac{\partial F}{\partial b^{(2)}}_{|wb,b_2^{(2)}=1}\\ \\ \frac{\partial F}{\partial b^{(2)}}_{|wb,b_3^{(2)}=4}\end{bmatrix} = \begin{bmatrix}\frac{\partial F}{\partial 1_1^{(2)}}_{|l_1^{(2)}=512}\\ \\ \frac{\partial F}{\partial l_2^{(2)}}_{|l_2^{(2)}=288}\\ \\ \frac{\partial F}{\partial l_3^{(2)}}_{|l_3^{(2)}=558}\end{bmatrix} = \begin{bmatrix}116190\\ \\ 325332\\ \\ 209142\end{bmatrix} ∂b(2)∂F∣wb,b(2)=[414]=⎣⎢⎢⎢⎢⎢⎡∂b(2)∂F∣wb,b1(2)=4∂b(2)∂F∣wb,b2(2)=1∂b(2)∂F∣wb,b3(2)=4⎦⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎢⎢⎢⎡∂11(2)∂F∣l1(2)=512∂l2(2)∂F∣l2(2)=288∂l3(2)∂F∣l3(2)=558⎦⎥⎥⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎡116190325332209142⎦⎥⎥⎥⎥⎤

∂ F ∂ b ( 1 ) ∣ w b , b ( 1 ) = [ 3 6 9 ] = [ ∂ F ∂ b ( 1 ) ∣ w b , b 1 ( 1 ) = 3 ∂ F ∂ b ( 1 ) ∣ w b , b 2 ( 1 ) = 6 ∂ F ∂ b ( 1 ) ∣ w b , b 3 ( 1 ) = 9 ] = [ ∂ F ∂ 1 1 ( 1 ) ∣ l 1 ( 1 ) = 11 ∂ F ∂ l 2 ( 1 ) ∣ l 2 ( 1 ) = 29 ∂ F ∂ l 3 ( 1 ) ∣ l 3 ( 1 ) = 47 ] = [ 3950460 3044178 2904750 ] \frac{\partial F}{\partial b^{(1)}}_{|wb,b^{(1)}=\begin{bmatrix}3\\ 6\\ 9\end{bmatrix}} = \begin{bmatrix}\frac{\partial F}{\partial b^{(1)}}_{|wb,b_1^{(1)}=3}\\ \\ \frac{\partial F}{\partial b^{(1)}}_{|wb,b_2^{(1)}=6}\\ \\ \frac{\partial F}{\partial b^{(1)}}_{|wb,b_3^{(1)}=9}\end{bmatrix} = \begin{bmatrix}\frac{\partial F}{\partial 1_1^{(1)}}_{|l_1^{(1)}=11}\\ \\ \frac{\partial F}{\partial l_2^{(1)}}_{|l_2^{(1)}=29}\\ \\ \frac{\partial F}{\partial l_3^{(1)}}_{|l_3^{(1)}=47}\end{bmatrix} = \begin{bmatrix}3950460\\ \\ 3044178\\ \\ 2904750\end{bmatrix} ∂b(1)∂F∣wb,b(1)=[369]=⎣⎢⎢⎢⎢⎢⎡∂b(1)∂F∣wb,b1(1)=3∂b(1)∂F∣wb,b2(1)=6∂b(1)∂F∣wb,b3(1)=9⎦⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎢⎢⎢⎡∂11(1)∂F∣l1(1)=11∂l2(1)∂F∣l2(1)=29∂l3(1)∂F∣l3(1)=47⎦⎥⎥⎥⎥⎥⎥⎥⎤=⎣⎢⎢⎢⎢⎡395046030441782904750⎦⎥⎥⎥⎥⎤

我们已经计算出了函数 F F F关于偏置的偏导数,接着计算 F F F关于权重 w i j ( n ) w_{ij}^{(n)} wij(n)的偏导数,因为 w i j ( n ) w_{ij}^{(n)} wij(n)只与 l j ( n ) l_{j}^{(n)} lj(n)有关,所以根据导数的链式法则得到:

∂ F ∂ w i j ( n ) = ∂ F ∂ l j ( n ) ⋅ ∂ l j ( n ) ∂ w i j ( n ) = ∂ F ∂ l j ( n ) ⋅ σ i ( n − 1 ) \frac{\partial F}{\partial w_{ij}^{(n)}} = \frac{\partial F}{\partial l_{j}^{(n)}} \cdot \frac{\partial l_{j}^{(n)}}{\partial w_{ij}^{(n)}} = \frac{\partial F}{\partial l_{j}^{(n)}} \cdot \sigma_i^{(n - 1)} ∂wij(n)∂F=∂lj(n)∂F⋅∂wij(n)∂lj(n)=∂lj(n)∂F⋅σi(n−1)

其中 σ i ( n − 1 ) \sigma_i^{(n - 1)} σi(n−1)代表每 n − 1 n - 1 n−1层的第 i i i个神经元的值,例如:

∂ F ∂ w 11 ( 2 ) = ∂ F ∂ l 1 ( 2 ) ⋅ ∂ l 1 ( 2 ) ∂ w 11 ( 2 ) = ∂ F ∂ l 1 ( 2 ) ⋅ σ 1 ( 0 ) \frac{\partial F}{\partial w_{11}^{(2)}} = \frac{\partial F}{\partial l_{1}^{(2)}} \cdot \frac{\partial l_{1}^{(2)}}{\partial w_{11}^{(2)}} = \frac{\partial F}{\partial l_{1}^{(2)}} \cdot \sigma_1^{(0)} ∂w11(2)∂F=∂l1(2)∂F⋅∂w11(2)∂l1(2)=∂l1(2)∂F⋅σ1(0)

从另一个角度解释上述公式,在图1中,权重 w 11 ( 1 ) w_{11}^{(1)} w11(1)连接的是输出层的第一个神经元到第1层的第一个神经元,在计算 F F F关于 w 11 ( 1 ) w_{11}^{(1)} w11(1)的偏导数时,只要找到图1(或图2)中 σ 1 ( 0 ) \sigma_1^{(0)} σ1(0)的值,该值为输入层的第1个神经元处的值,再根据图9(或图11),找到 ∂ F ∂ l 1 ( 1 ) \frac{\partial F}{\partial l_{1}^{(1)}} ∂l1(1)∂F的值,然后将其相乘就可以了,其他的权重的偏导类似。

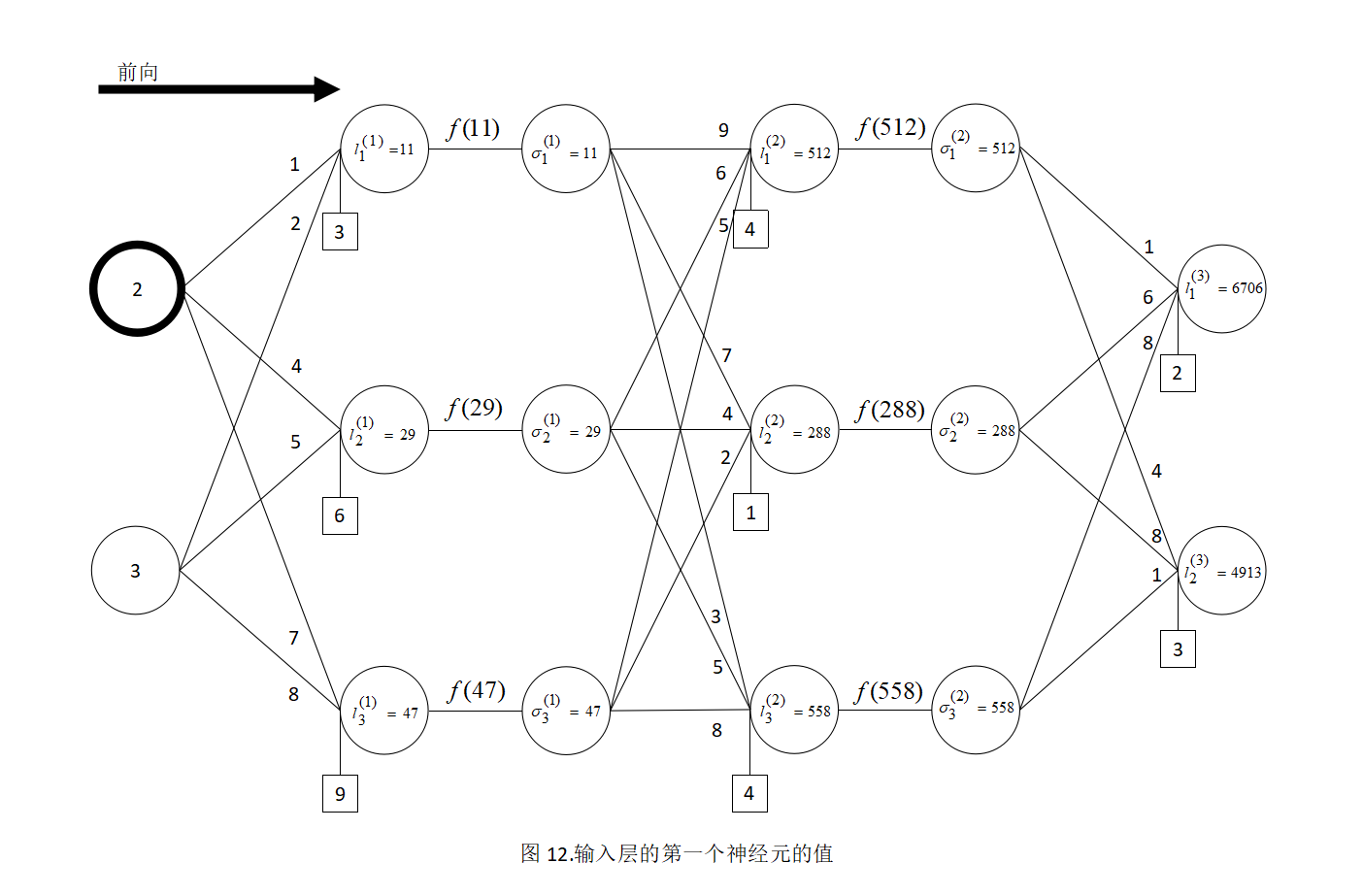

例1,计算 F F F在点 w b wb wb处关于 w 11 ( 1 ) w_{11}^{(1)} w11(1)的偏导数 ∂ F ∂ w 11 ( 1 ) ∣ w b , w 11 ( 1 ) = 1 \frac{\partial F}{\partial w_{11}^{(1)}}_{|wb,w_{11}^{(1)}=1} ∂w11(1)∂F∣wb,w11(1)=1,首先根据图10,找到输入层的第一个神经元的值,如图12所示:

然后从图11中找到 ∂ F ∂ l 1 ( 1 ) \frac{\partial F}{\partial l_{1}^{(1)}} ∂l1(1)∂F处的值,如图13所示:

则:

∂ F ∂ w 11 ( 1 ) ∣ w b , w 11 ( 1 ) = 1 = 2 × 3950460 = 7900920 \frac{\partial F}{\partial w_{11}^{(1)}}_{|wb,w_{11}^{(1)}=1} = 2 \times 3950460 = 7900920 ∂w11(1)∂F∣wb,w11(1)=1=2×3950460=7900920

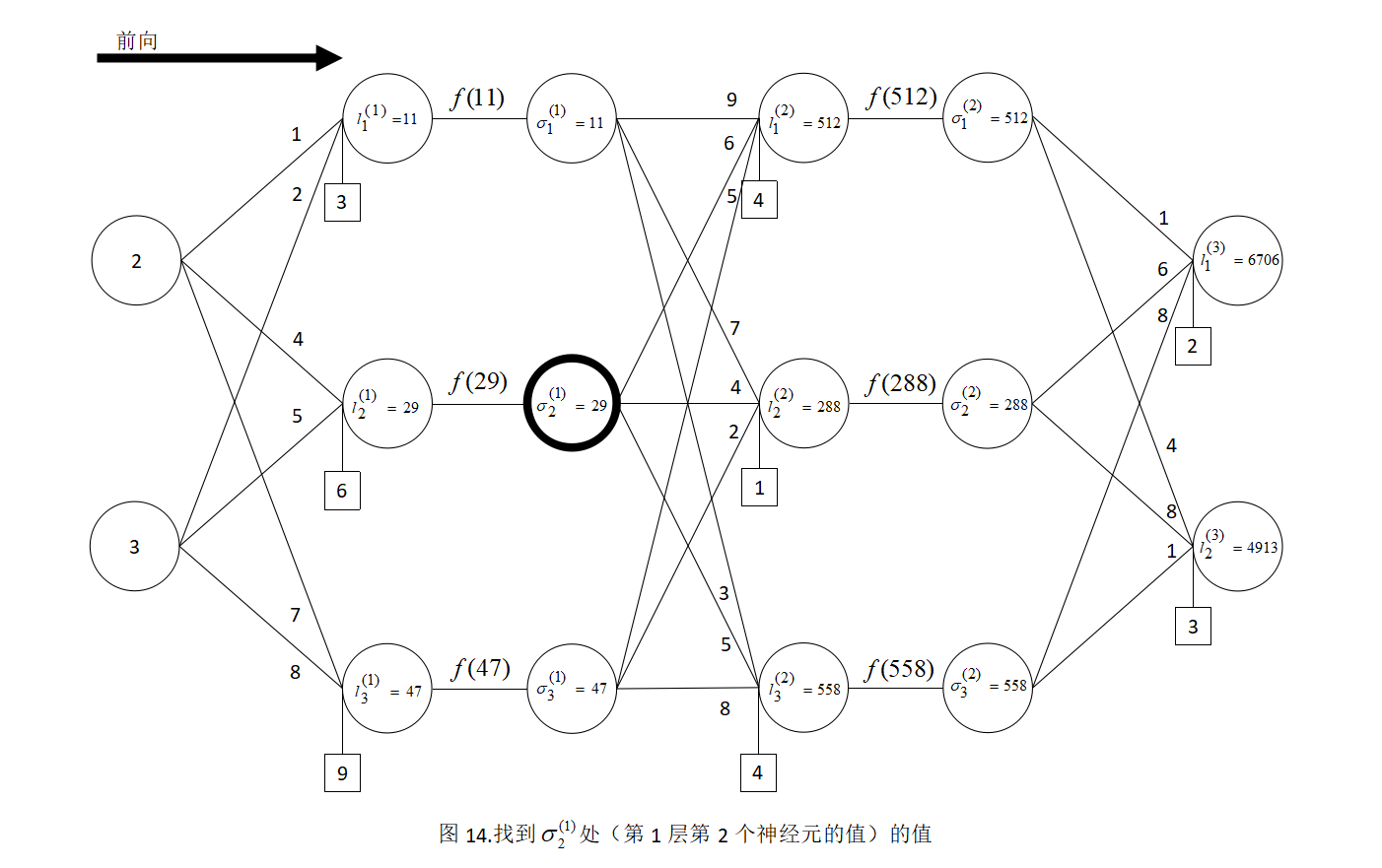

例2,计算 F F F在点 w b wb wb处关于 w 21 ( 2 ) w_{21}^{(2)} w21(2)的偏导数 ∂ F ∂ w 21 ( 2 ) ∣ w b , w 21 ( 2 ) = 6 \frac{\partial F}{\partial w_{21}^{(2)}}_{|wb,w_{21}^{(2)}=6} ∂w21(2)∂F∣wb,w21(2)=6,首先根据图10,找到 σ 2 ( 1 ) \sigma_2^{(1)} σ2(1)处地(第1层第2个神经元的值)的值,如图14所示:

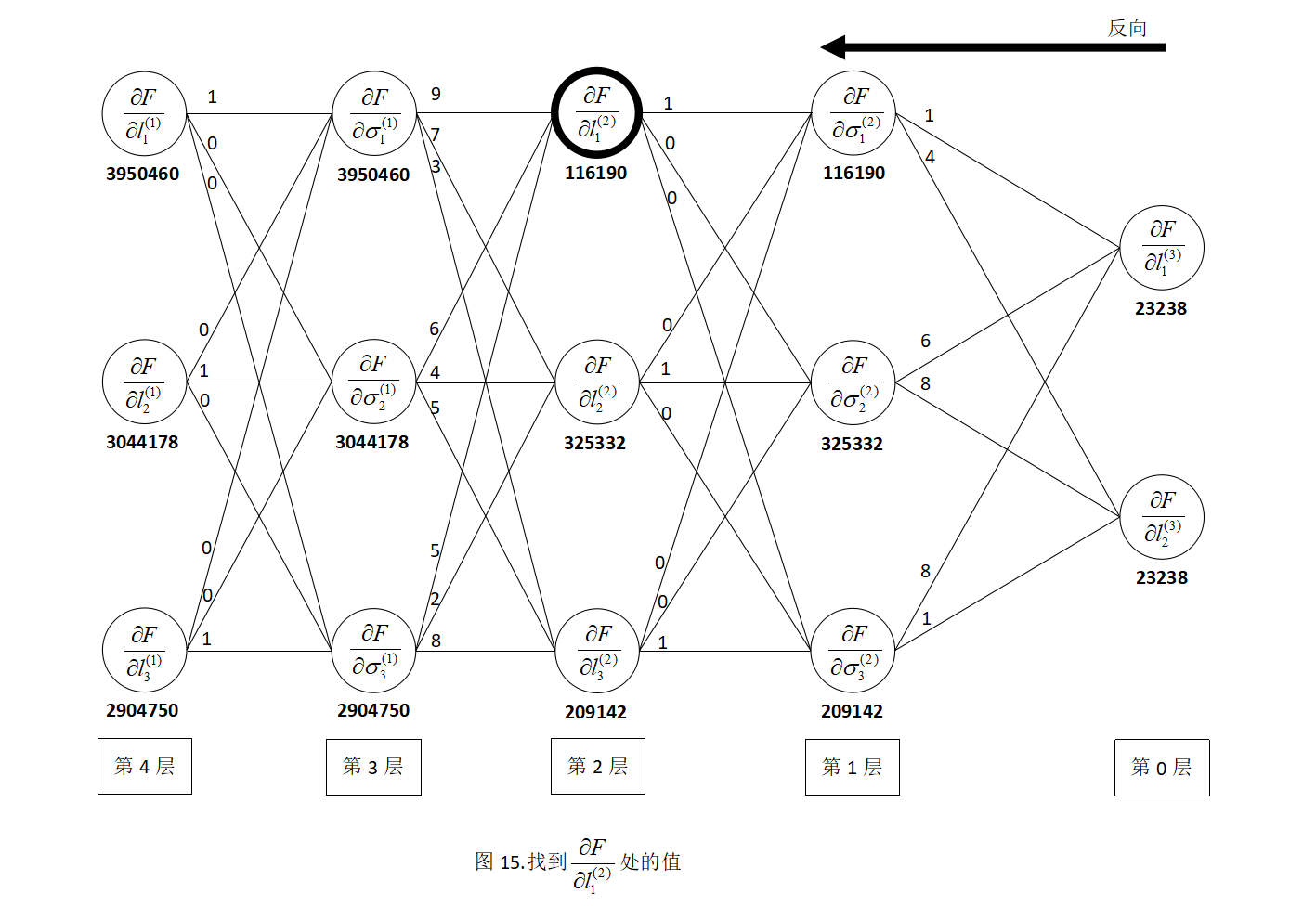

然后从图11中找到 ∂ F ∂ l 1 ( 2 ) \frac{\partial F}{\partial l_{1}^{(2)}} ∂l1(2)∂F处的值,如图15所示:

则:

∂ F ∂ w 21 ( 2 ) ∣ w b , w 21 ( 2 ) = 6 = 29 × 116190 = 3369510 \frac{\partial F}{\partial w_{21}^{(2)}}_{|wb,w_{21}^{(2)}=6} = 29 \times 116190 = 3369510 ∂w21(2)∂F∣wb,w21(2)=6=29×116190=3369510

根据上述规律,可以计算出函数 F F F在点 w b wb wb处关于输入层到第1层的权重的偏导数:

∂ F ∂ w ( 1 ) ∣ w ( 1 ) = [ 1 4 7 2 5 8 ] \frac{\partial F}{\partial w^{(1)}}_{|w^{(1)}=\begin{bmatrix}1 & 4 & 7\\ 2 & 5 & 8\end{bmatrix}} ∂w(1)∂F∣w(1)=[124578]

= [ 2 × 3950460 2 × 3044178 2 × 2904750 3 × 3950460 3 × 3044178 3 × 2904750 ] = \begin{bmatrix}2 \times 3950460 & 2 \times 3044178 & 2 \times 2904750 \\ & & \\3 \times 3950460 & 3 \times 3044178 & 3 \times 2904750 \end{bmatrix} =⎣⎡2×39504603×39504602×30441783×30441782×29047503×2904750⎦⎤

= [ 7900920 6088356 5809500 11851380 9132534 8714250 ] = \begin{bmatrix}7900920 & 6088356 & 5809500 \\ & & \\ 11851380 & 9132534 & 8714250\end{bmatrix} =⎣⎡7900920118513806088356913253458095008714250⎦⎤

函数 F F F在点 w b wb wb处关于第1层到第2层的权重的偏导数:

∂ F ∂ w ( 2 ) ∣ w ( 2 ) = [ 9 7 3 6 4 5 5 2 8 ] \frac{\partial F}{\partial w^{(2)}}_{|w^{(2)}=\begin{bmatrix}9 & 7 & 3 \\ 6 & 4 & 5 \\ 5 & 2 & 8\end{bmatrix}} ∂w(2)∂F∣w(2)=[965742358]

= [ 11 × 116190 11 × 325332 11 × 209142 29 × 116190 29 × 325332 29 × 209142 47 × 116190 47 × 325332 47 × 209142 ] =\begin{bmatrix}11 \times 116190 & 11 \times 325332 & 11 \times 209142 \\ 29 \times 116190 & 29 \times 325332 & 29 \times 209142 \\ 47 \times 116190 & 47 \times 325332 & 47 \times 209142 \end{bmatrix} =⎣⎡11×11619029×11619047×11619011×32533229×32533247×32533211×20914229×20914247×209142⎦⎤

= [ 1278090 3578652 2300562 3369510 9434628 6065118 5460930 15290604 9829674 ] = \begin{bmatrix}1278090 & 3578652 & 2300562 \\ 3369510 & 9434628 & 6065118 \\ 5460930 & 15290604 & 9829674 \end{bmatrix} =⎣⎡1278090336951054609303578652943462815290604230056260651189829674⎦⎤

函数 F F F在点 w b wb wb处关于第2层到第3层的权重的偏导数:

∂ F ∂ w ( 3 ) ∣ w ( 3 ) = [ 1 4 6 8 8 1 ] \frac{\partial F}{\partial w^{(3)}}_{|w^{(3)}=\begin{bmatrix}1 & 4 \\ 6 & 8 \\ 8 & 1 \end{bmatrix}} ∂w(3)∂F∣w(3)=[168481]

= [ 512 × 23238 512 × 23238 288 × 23238 288 × 23238 558 × 23238 558 × 23238 ] = \begin{bmatrix}512 \times 23238 & 512 \times 23238 \\ 288 \times 23238 & 288 \times 23238 \\ 558 \times 23238 & 558 \times 23238\end{bmatrix} =⎣⎡512×23238288×23238558×23238512×23238288×23238558×23238⎦⎤

= [ 11897856 11897856 6692544 6692544 12966804 12966804 ] = \begin{bmatrix}11897856 & 11897856 \\ 6692544 & 6692544 \\ 12966804 & 12966804 \end{bmatrix} =⎣⎡1189785666925441296680411897856669254412966804⎦⎤

至此,已经计算出了 F F F在点 w b wb wb处的梯度,可以利用函数gradients计算以上示例的梯度,代码如下:

# -*- coding: utf-8 -*-import numpy as npimport tensorflow as tf# ---------------------------------------------------------------------------------------------# 输入层,第0层x = tf.placeholder(tf.float32, shape=[None, 2])# 第0层到第1层的权重w1 = tf.Variable(tf.constant([[1.0, 4.0, 7.0],[2.0, 5.0, 8.0]], tf.float32))# 第1层的偏置b1 = tf.Variable(tf.constant([3.0, 6.0, 9.0], tf.float32))# 第1层的线性组合l1 = tf.matmul(x, w1) + b1# 第1层的激活sigmal = l1# ---------------------------------------------------------------------------------------------# 第1层到第2层的权重w2 = tf.Variable([[9.0, 7.0, 3.0],[6.0, 4.0, 5.0],[5.0, 2.0, 8.0]], tf.float32)# 第2层的偏置b2 = tf.Variable(tf.constant([4.0, 1.0, 4.0], tf.float32))# 第2层的线性组合l2 = tf.matmul(sigmal, w2) + b2# 第2层的激活sigma2 = l2# ---------------------------------------------------------------------------------------------# 第2层到第3层的权重w3 = tf.Variable([[1.0, 4.0],[6.0, 8.0],[8.0, 1.0]], tf.float32)# 第3层的偏置b3 = tf.Variable(tf.constant([2.0, 3.0], tf.float32))# 第3层的线性组合l3 = tf.matmul(sigma2, w3) + b3# ---------------------------------------------------------------------------------------------# 构造函数FF = tf.pow(tf.reduce_sum(l3), 2.0)# ---------------------------------------------------------------------------------------------sess = tf.Session()sess.run(tf.global_variables_initializer())# 计算梯度w1_gra, b1_gra, w2_gra, b2_gra, w3_gra, b3_gra = tf.gradients(F, [w1, b1, w2, b2, w3, b3])w1_gra_arr, b1_gra_arr, w2_gra_arr, b2_gra_arr, w3_gra_arr, b3_gra_arr = sess.run([w1_gra, b1_gra, w2_gra, b2_gra, w3_gra, b3_gra], feed_dict={x: np.array([[2.0, 3.0]], np.float32)})# ---------------------------------------------------------------------------------------------print("关于第1层权重的梯度:")print(w1_gra_arr)print("关于第1层偏置的梯度:")print(b1_gra_arr)print("关于第2层权重的梯度:")print(w2_gra_arr)print("关于第2层偏置的梯度:")print(b2_gra_arr)print("关于第3层权重的梯度:")print(w3_gra_arr)print("关于第3层偏置的梯度:")print(b3_gra_arr)

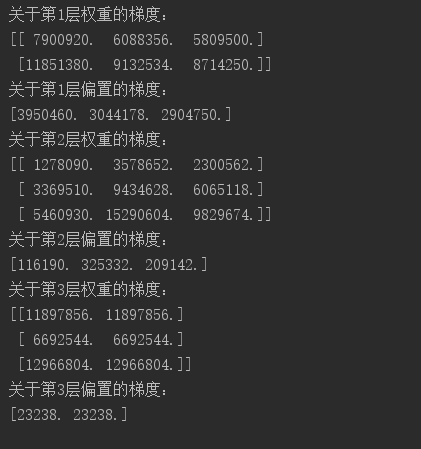

输出如下:

以上示例中的全连接神经网络只有一个输入,如果有多个输入,可以针对每个输入的输出构造一个函数 F i F_i Fi,若函数 F = ∑ i F i F=\sum_iF_i F=∑iFi,则针对权重和偏置的偏导数等于每一个 F i F_i Fi针对权重和偏置导数的和,即只要针对每一个 F i F_i Fi利用梯度返回传播算法计算权重和偏导数,然后求和即可。仔细观察就会发现针对分类问题构造的损失函数可以理解为多个函数的和,所以可以利用梯度反向传播算法训练网络。

本文摘自《图解深度学习与神经网络》

![[Linux] 管道容量以及缓冲区的组成 [Linux] 管道容量以及缓冲区的组成](https://image.dandelioncloud.cn/images/20220719/ec662d888a6e47b880e4613307440a36.png)

还没有评论,来说两句吧...