《Netty源码学习 一:pipleLine、FrameDecoder、Reactor多线程模型思想》

《Netty源码学习 一: pipleLine、FrameDecoder、Reactor多线程模型思想》

- Netty入门代码例子

- Netty中的消息如何在管道pipeline中流转

- 如何理解Netty的编解码器FrameDecoder

- 从NIO Reactor多线程模型理解Netty Reactor模型

Netty入门代码例子

服务端:Server

import java.net.InetSocketAddress;import java.util.concurrent.ExecutorService;import java.util.concurrent.Executors;import org.jboss.netty.bootstrap.ServerBootstrap;import org.jboss.netty.channel.ChannelPipeline;import org.jboss.netty.channel.ChannelPipelineFactory;import org.jboss.netty.channel.Channels;import org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory;import org.jboss.netty.handler.codec.string.StringDecoder;import org.jboss.netty.handler.codec.string.StringEncoder;public class Server {public static void main(String[] args) {//服务类ServerBootstrap bootstrap = new ServerBootstrap();//boss线程监听端口,worker线程负责数据读写ExecutorService boss = Executors.newCachedThreadPool();ExecutorService worker = Executors.newCachedThreadPool();//设置niosocket工厂bootstrap.setFactory(new NioServerSocketChannelFactory(boss, worker));//设置管道的工厂bootstrap.setPipelineFactory(new ChannelPipelineFactory() {@Overridepublic ChannelPipeline getPipeline() throws Exception {ChannelPipeline pipeline = Channels.pipeline();pipeline.addLast("decoder", new StringDecoder());pipeline.addLast("encoder", new StringEncoder());pipeline.addLast("helloHandler", new HelloHandler());return pipeline;}});bootstrap.bind(new InetSocketAddress(10101));System.out.println("start!!!");}}

HelloHandler 继承了SimpleChannelHandler,SimpleChannelHandler用于处理消息接收和写的handler类的父类。为了messageReceived、exceptionCaught、channelConnected、channelDisconnected、channelClosed方法能运行效果更加明显,增加输出语句。

import org.jboss.netty.channel.ChannelHandlerContext;import org.jboss.netty.channel.ChannelStateEvent;import org.jboss.netty.channel.ExceptionEvent;import org.jboss.netty.channel.MessageEvent;import org.jboss.netty.channel.SimpleChannelHandler;public class HelloHandler extends SimpleChannelHandler {/** * 接收消息 */@Overridepublic void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {//由于服务端管道增加了编码解码器,所以接收后的数据处理起来可以直接转换String s = (String) e.getMessage();System.out.println(s);//回写数据ctx.getChannel().write("hi");super.messageReceived(ctx, e);}/** * messageReceived执行出异常,会执行该方法 捕获异常 */@Overridepublic void exceptionCaught(ChannelHandlerContext ctx, ExceptionEvent e) throws Exception {System.out.println("exceptionCaught");super.exceptionCaught(ctx, e);}/** * 新连接 */@Overridepublic void channelConnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelConnected");super.channelConnected(ctx, e);}/** * 必须是链接已经建立,关闭通道的时候才会触发 */@Overridepublic void channelDisconnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelDisconnected");super.channelDisconnected(ctx, e);}/** * channel关闭的时候触发 */@Overridepublic void channelClosed(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelClosed");super.channelClosed(ctx, e);}}

客户端:

import java.net.InetSocketAddress;import java.util.Scanner;import java.util.concurrent.ExecutorService;import java.util.concurrent.Executors;import org.jboss.netty.bootstrap.ClientBootstrap;import org.jboss.netty.channel.Channel;import org.jboss.netty.channel.ChannelFuture;import org.jboss.netty.channel.ChannelPipeline;import org.jboss.netty.channel.ChannelPipelineFactory;import org.jboss.netty.channel.Channels;import org.jboss.netty.channel.socket.nio.NioClientSocketChannelFactory;import org.jboss.netty.handler.codec.string.StringDecoder;import org.jboss.netty.handler.codec.string.StringEncoder;public class Client {public static void main(String[] args) {//服务类ClientBootstrap bootstrap = new ClientBootstrap();//线程池ExecutorService boss = Executors.newCachedThreadPool();ExecutorService worker = Executors.newCachedThreadPool();//socket工厂bootstrap.setFactory(new NioClientSocketChannelFactory(boss, worker));//管道工厂bootstrap.setPipelineFactory(new ChannelPipelineFactory() {@Overridepublic ChannelPipeline getPipeline() throws Exception {ChannelPipeline pipeline = Channels.pipeline();pipeline.addLast("decoder", new StringDecoder());pipeline.addLast("encoder", new StringEncoder());pipeline.addLast("hiHandler", new HiHandler());return pipeline;}});//连接服务端ChannelFuture connect = bootstrap.connect(new InetSocketAddress("127.0.0.1", 10101));Channel channel = connect.getChannel();System.out.println("client start");Scanner scanner = new Scanner(System.in);while(true){System.out.println("请输入");channel.write(scanner.next());}}}

客户端也要有一个handler,继承SimpleChannelHandler 类。

import org.jboss.netty.channel.ChannelHandlerContext;import org.jboss.netty.channel.ChannelStateEvent;import org.jboss.netty.channel.ExceptionEvent;import org.jboss.netty.channel.MessageEvent;import org.jboss.netty.channel.SimpleChannelHandler;public class HiHandler extends SimpleChannelHandler {/** * 接收消息 */@Overridepublic void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {String s = (String) e.getMessage();System.out.println(s);super.messageReceived(ctx, e);}/** * 捕获异常 */@Overridepublic void exceptionCaught(ChannelHandlerContext ctx, ExceptionEvent e) throws Exception {System.out.println("exceptionCaught");super.exceptionCaught(ctx, e);}/** * 新连接 */@Overridepublic void channelConnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelConnected");super.channelConnected(ctx, e);}/** * 必须是链接已经建立,关闭通道的时候才会触发 */@Overridepublic void channelDisconnected(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelDisconnected");super.channelDisconnected(ctx, e);}/** * channel关闭的时候触发 */@Overridepublic void channelClosed(ChannelHandlerContext ctx, ChannelStateEvent e) throws Exception {System.out.println("channelClosed");super.channelClosed(ctx, e);}}

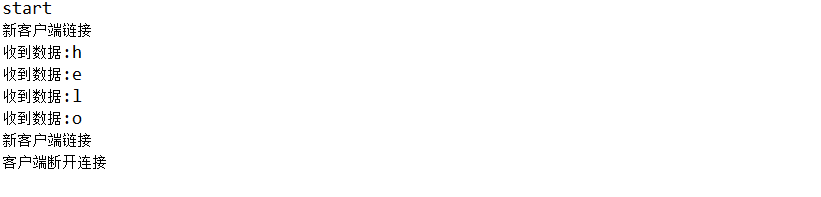

运行结果:

当运行服务端时,服务端时:

客户端的hihandler和服务端的hellohandler都会执行channelConnected方法。

客户端输入hello,服务端接收到消息,执行messageReceived函数,并返回hi。

当客户端突然断开,服务端会执行messageReceived,但是无法读取数据,抛出异常,messageReceived抛出异常后,会被exceptionCaught函数捕获,因此输出exceptionCaught。然后依次执行channelDisconnected、channelClosed方法。注意:channelDisconnected与channelClosed的区别?channelDisconnected只有在连接建立后断开才会调用。而channelClosed无论连接是否成功都会调用关闭资源。

在继承这个SimpleChannelHandler类后,通常下面的三个方法分别有各自用处

{

messageReceived接收消息、发送返回

channelConnected新连接,通常用来检测IP是否是黑名单

channelDisconnected链接关闭,可以再用户断线的时候清楚用户的缓存数据等

}

Netty中的消息如何在管道pipeline中流转

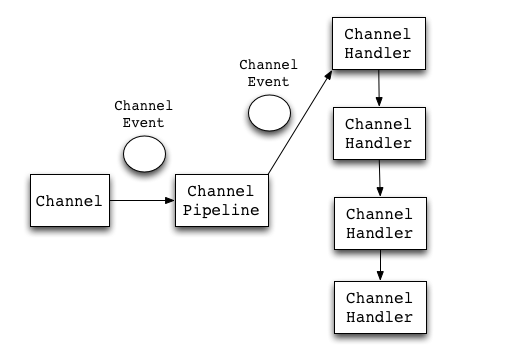

在Netty里,Channel是通讯的载体,而ChannelHandler负责Channel中的逻辑处理。一个Channel包含一个ChannelPipeline,所有ChannelHandler都会注册到ChannelPipeline中,并按顺序组织起来。在Netty中,ChannelEvent是数据或者状态的载体,例如传输的数据对应MessageEvent,状态的改变对应ChannelStateEvent。当对Channel进行操作时,会产生一个ChannelEvent,并发送到ChannelPipeline。ChannelPipeline会选择一个ChannelHandler进行处理。这个ChannelHandler处理之后,可能会产生新的ChannelEvent,并流转到下一个ChannelHandler。

一个数据最开始是一个MessageEvent,它附带了一个未解码的原始二进制消息ChannelBuffer,然后某个Handler将其解码成了一个数据对象,并生成了一个新的MessageEvent,并传递给下一步进行处理。

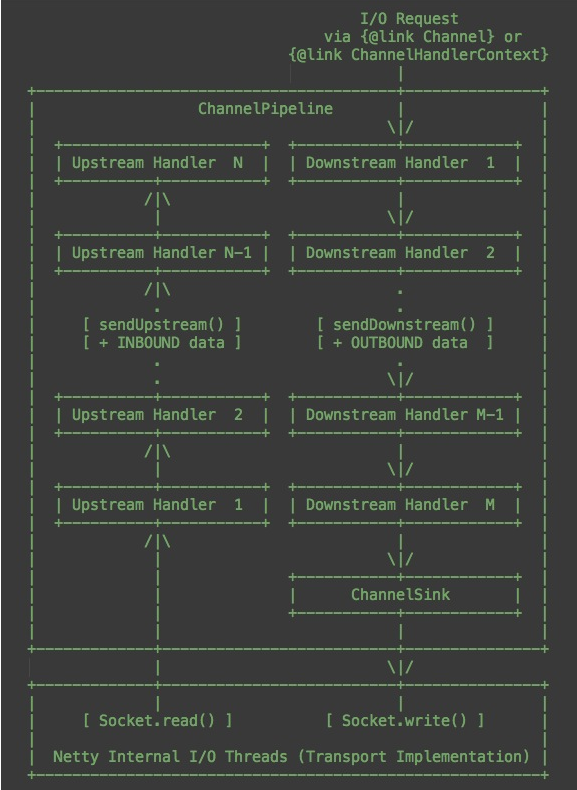

Netty的ChannelPipeline包含两条线路:Upstream和Downstream。Upstream对应上行,接收到的消息、被动的状态改变,都属于Upstream。Downstream则对应下行,发送的消息、主动的状态改变,都属于Downstream。ChannelPipeline接口包含了两个重要的方法:sendUpstream(ChannelEvent e)和sendDownstream(ChannelEvent e),就分别对应了Upstream和Downstream。

对应的,ChannelPipeline里包含的ChannelHandler也包含两类:ChannelUpstreamHandler和ChannelDownstreamHandler。每条线路的Handler是互相独立的。它们都很简单的只包含一个方法:ChannelUpstreamHandler.handleUpstream和ChannelDownstreamHandler.handleDownstream。

Netty官方的javadoc里有一张图(ChannelPipeline接口里):

Downstream中有特殊的ChannelSink,ChannelSink包含一个重要方法ChannelSink.eventSunk,可以接受任意ChannelEvent。“sink”的意思是”下沉”,那么”ChannelSink”好像可以理解为”Channel下沉的地方”?实际上,它的作用确实是这样,也可以换个说法:“处于末尾的万能Handler”。

需要注意的是在一条“流”里,一个ChannelEvent并不会主动的”流”经所有的Handler,而是由上一个Handler显式的调用ChannelPipeline.sendUp(Down)stream产生,并交给下一个Handler处理。也就是说,每个Handler接收到一个ChannelEvent,并处理结束后,如果需要继续处理,那么它需要调用sendUp(Down)stream新发起一个事件。如果它不再发起事件,那么处理就到此结束,即使它后面仍然有Handler没有执行。这个机制可以保证最大的灵活性,当然对Handler的先后顺序也有要求。

例子:server:

import java.net.InetSocketAddress;import java.util.concurrent.ExecutorService;import java.util.concurrent.Executors;import org.jboss.netty.bootstrap.ServerBootstrap;import org.jboss.netty.channel.ChannelPipeline;import org.jboss.netty.channel.ChannelPipelineFactory;import org.jboss.netty.channel.Channels;import org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory;import org.jboss.netty.handler.codec.string.StringDecoder;import org.jboss.netty.handler.codec.string.StringEncoder;public class Server {public static void main(String[] args) {//服务类ServerBootstrap bootstrap = new ServerBootstrap();//boss线程监听端口,worker线程负责数据读写ExecutorService boss = Executors.newCachedThreadPool();ExecutorService worker = Executors.newCachedThreadPool();//设置niosocket工厂bootstrap.setFactory(new NioServerSocketChannelFactory(boss, worker));//设置管道的工厂bootstrap.setPipelineFactory(new ChannelPipelineFactory() {@Overridepublic ChannelPipeline getPipeline() throws Exception {ChannelPipeline pipeline = Channels.pipeline();pipeline.addLast("handler1", new MyHandler1());pipeline.addLast("handler2", new MyHandler2());return pipeline;}});bootstrap.bind(new InetSocketAddress(30000));System.out.println("start!!!");}}

handler1接收消息后利用ChannelHandlerContext 转发消息到下一个handler:

import org.jboss.netty.buffer.ChannelBuffer;import org.jboss.netty.channel.ChannelHandlerContext;import org.jboss.netty.channel.MessageEvent;import org.jboss.netty.channel.SimpleChannelHandler;import org.jboss.netty.channel.UpstreamMessageEvent;public class MyHandler1 extends SimpleChannelHandler {@Overridepublic void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {ChannelBuffer buffer = (ChannelBuffer)e.getMessage();byte[] array = buffer.array();String message = new String(array);System.out.println("handler1:" + message);//传递ctx.sendUpstream(new UpstreamMessageEvent(ctx.getChannel(), "abc", e.getRemoteAddress()));ctx.sendUpstream(new UpstreamMessageEvent(ctx.getChannel(), "efg", e.getRemoteAddress()));}}

handler2:将handler1中转发的消息读出来

import org.jboss.netty.channel.ChannelHandlerContext;import org.jboss.netty.channel.MessageEvent;import org.jboss.netty.channel.SimpleChannelHandler;public class MyHandler2 extends SimpleChannelHandler {@Overridepublic void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {String message = (String)e.getMessage();System.out.println("handler2:" + message);}}

client:

import java.net.Socket;public class Client {public static void main(String[] args) throws Exception {Socket socket = new Socket("127.0.0.1", 30000);socket.getOutputStream().write("hello".getBytes());socket.close();}}

运行结果:

最终需要注意的是:UpstreamHandler是从前往后执行的,DownstreamHandler是从后往前执行的。在Servetr中配置ChannelPipeline添加handler时需要注意顺序了。若将上诉Server中的handler1、handler2添加顺序变换,hander2中不发送sendUpstream(),那么只会在hander2中接收一次消息。

如何理解Netty的编解码器FrameDecoder

FrameDecoder是继承SimpleChannelUpstreamHandler的,FrameDecoder非常特殊的是,在执行FrameDecoder或者其子类的messageReceived方法,return的对象就是间接调用sendUpstream往下传递的对象。看一下FrameDecoder中的messageReceived的实现:

@Overridepublic void messageReceived(ChannelHandlerContext ctx, MessageEvent e) throws Exception {Object m = e.getMessage();if (!(m instanceof ChannelBuffer)) {ctx.sendUpstream(e);return;}ChannelBuffer input = (ChannelBuffer) m;if (!input.readable()) {return;}ChannelBuffer cumulation = cumulation(ctx);if (cumulation.readable()) {cumulation.discardReadBytes();cumulation.writeBytes(input);callDecode(ctx, e.getChannel(), cumulation, e.getRemoteAddress());} else {callDecode(ctx, e.getChannel(), input, e.getRemoteAddress());if (input.readable()) {cumulation.writeBytes(input);}}}

当buffer里面数据未被读取完怎么办?Netty的messageReceived方法中有一个cumulation缓存,cumulation实际上也是一个ChannelBuffer,如果cumulation可读,cumulation.discardReadBytes函数的作用是将0到readIndex之间的空间释放掉,将readIndex和writeIndex都重新标记一下。然后将读到的数据写到buffer里面。如果cumulation不可读,在调callDecode,如果发现从不可读状态到可读状态,则将读到的数据写到缓存区里面。

cumulation的实现:

private ChannelBuffer cumulation(ChannelHandlerContext ctx) {ChannelBuffer c = cumulation;if (c == null) {c = ChannelBuffers.dynamicBuffer(ctx.getChannel().getConfig().getBufferFactory());cumulation = c;}return c;}

callDecode的实现:

private void callDecode(ChannelHandlerContext context, Channel channel,ChannelBuffer cumulation, SocketAddress remoteAddress) throws Exception {while (cumulation.readable()) {int oldReaderIndex = cumulation.readerIndex();Object frame = decode(context, channel, cumulation);if (frame == null) {if (oldReaderIndex == cumulation.readerIndex()) {// Seems like more data is required.// Let us wait for the next notification.break;} else {// Previous data has been discarded.// Probably it is reading on.continue;}} else if (oldReaderIndex == cumulation.readerIndex()) {throw new IllegalStateException("decode() method must read at least one byte " +"if it returned a frame (caused by: " + getClass() + ")");}unfoldAndFireMessageReceived(context, remoteAddress, frame);}if (!cumulation.readable()) {this.cumulation = null;}}

上面是一个循环,首先将读指针备份一下,decode方法是交个子类实现的一个抽象方这个用来实现具体数据分帧的算法,从这个里面看到如果子类没有读到一帧数据,则返回null所以下面有一个判断,是一点数据没有读呢,还是读了一点,如果一点都没有读,就不需要再检测了等下一次messageRecieved进行通知,如果发现读了一点数据,就调用下一次分帧。如果读了一帧数据就发送一个通知,unfold是针对读到的循环数据要不要打开的意思。到最后如果发现不是可读状态,cumulation将会被设置成null。

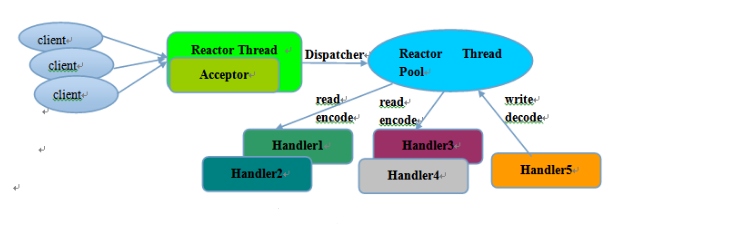

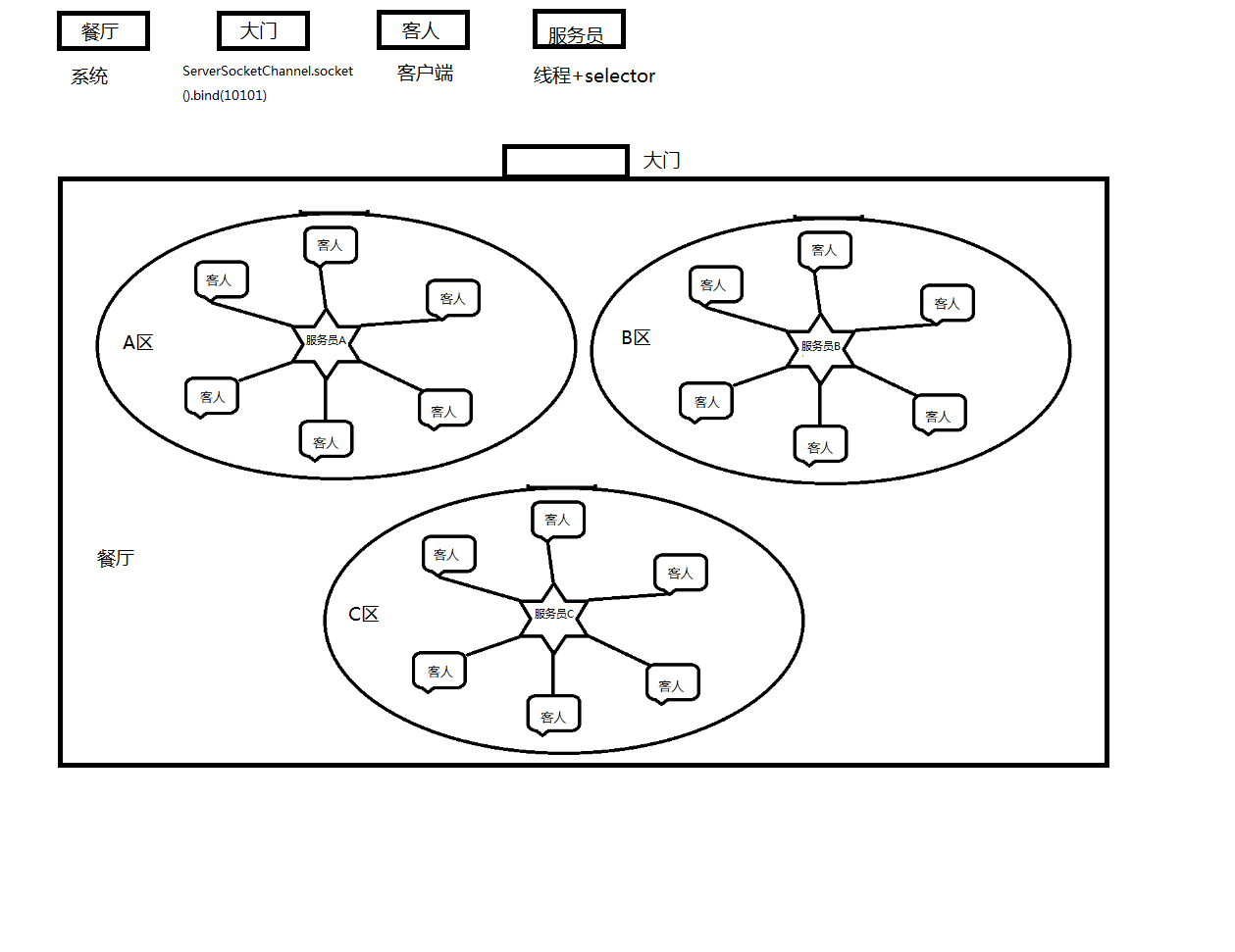

从NIO Reactor多线程模型理解Netty Reactor模型

NIO Reactor多线程模型与Netty实现的思想类似,用一个ServerSocketChannel注册OP_ACCEPT事件到一个Selector,这个Selector负责将这个ServerSocketChannel接收的所有SocketChannel都注册OP_READ到另一个Selector上,并且将一个SocktChannel封装在线程池的任务中。

我们把一个餐厅看做是一个服务器,客人相当于是客户端,大门就是ServerSocketChannel,服务员是一个包含selector的线程。NIO多线程模型就是有多个服务员(多个包含selector的线程)的餐厅。首先我们用一个包含Selector的线程充当门口迎客的服务员,将一个ServerSocketChannel注册OP_ACCEPT事件到这个Selector上(相当于让这个服务员看指定的这扇门),当门口迎客的服务员接收到从这个大门进来的客人,即把这个SocketChannel注册到其他大厅里的服务员上,并且注册OP_READ事件。客户端将自身的SocketChannel也注册成OP_READ,当客户端有需求时(相当于某个区的客人有需求时),这个客人所在的服务员会中select()方法返回,并且能从SelectionKey中找到这个客人发送的数据,并进行处理。

为了防止同时可能有多个迎客服务员给大厅服务员同时注册SocketChannel OP_READ事件,大厅服务员自身有一个并发安全的任务队列,因此迎客服务员给大厅服务员注册SocketChannel的OP_READ事件时,是采用了提交一个Runnanble的task到大厅服务员的任务队列中。

一个大厅里的服务员是如何工作的呢?大厅里的服务员上注册了许多有OP_READ事件的SocketChannel,大厅的服务员本身就是一个线程,他在run方法中不断循环的用select(500)非阻塞的方法查看这些SocketChannel是否有IO操作发送、然后看是否自身的任务队列中是否有需要注册的任务。

在本例中,迎客的服务员怕给大厅的服务员注册SocketChannel采用提交Runnable 任务的方式,大厅服务员自身维护一个任务队列,存放着还没注册OP_READ事件的任务,大厅服务员始终不断的循环在处理Selector中是否有需要处理的IO操作,是否有迎客服务员提交的需要注册OP_READ事件的任务。

一个简易版本的仿Netty服务器端代码如下:

AbstractNioSelector:是门口迎客服务员、大厅服务员的抽象类,同时继承了Runnable接口,并且有一个Executor线程池。子类NioServerBoss(视为迎客服务员)、NioServerWorker(视为大厅服务员)新建一个对象时,就会调用父类的构造器,AbstractNioSelector构造器中有 openSelector();方法,openSelector();方法中又执行 executor.execute(this);,即创建一个子类服务员时,子类服务员就是一个线程,并且已经在线程池中启动。所有的服务员都会不断的停留在

wakenUp.set(false);select(selector);processTaskQueue();process(selector);

中,wakeUp此处是用于在给Selector注册SocketChannel任务时,标识Selector是否占用。

import java.io.IOException;import java.nio.channels.Selector;import java.util.Queue;import java.util.concurrent.ConcurrentLinkedQueue;import java.util.concurrent.Executor;import java.util.concurrent.atomic.AtomicBoolean;import com.cn.pool.NioSelectorRunnablePool;public abstract class AbstractNioSelector implements Runnable {/** * 线程池 */private final Executor executor;/** * 选择器 */protected Selector selector;/** * 选择器wakenUp状态标记 */protected final AtomicBoolean wakenUp = new AtomicBoolean();/** * 任务队列 */private final Queue<Runnable> taskQueue = new ConcurrentLinkedQueue<Runnable>();/** * 线程名称 */private String threadName;/** * 线程管理对象 */protected NioSelectorRunnablePool selectorRunnablePool;AbstractNioSelector(Executor executor, String threadName, NioSelectorRunnablePool selectorRunnablePool) {this.executor = executor;this.threadName = threadName;this.selectorRunnablePool = selectorRunnablePool;openSelector();}/** * 获取selector并启动线程 */private void openSelector() {try {this.selector = Selector.open();} catch (IOException e) {throw new RuntimeException("Failed to create a selector.");}executor.execute(this);}@Overridepublic void run() {Thread.currentThread().setName(this.threadName);while (true) {try {wakenUp.set(false);select(selector);processTaskQueue();process(selector);} catch (Exception e) {// ignore}}}/** * 注册一个任务并激活selector * * @param task */protected final void registerTask(Runnable task) {taskQueue.add(task);Selector selector = this.selector;if (selector != null) {if (wakenUp.compareAndSet(false, true)) {selector.wakeup();}} else {taskQueue.remove(task);}}/** * 执行队列里的任务 */private void processTaskQueue() {for (;;) {final Runnable task = taskQueue.poll();if (task == null) {break;}task.run();}}/** * 获取线程管理对象 * @return */public NioSelectorRunnablePool getSelectorRunnablePool() {return selectorRunnablePool;}/** * select抽象方法 * * @param selector * @return * @throws IOException */protected abstract int select(Selector selector) throws IOException;/** * selector的业务处理 * * @param selector * @throws IOException */protected abstract void process(Selector selector) throws IOException;}

迎客服务员:与大厅服务员中的Selector是做不同的事情的,所以两者的process(Selector selector)方法有所不同。

import java.io.IOException;import java.nio.channels.ClosedChannelException;import java.nio.channels.SelectionKey;import java.nio.channels.Selector;import java.nio.channels.ServerSocketChannel;import java.nio.channels.SocketChannel;import java.util.Iterator;import java.util.Set;import java.util.concurrent.Executor;import com.cn.pool.Boss;import com.cn.pool.NioSelectorRunnablePool;import com.cn.pool.Worker;public class NioServerBoss extends AbstractNioSelector implements Boss{public NioServerBoss(Executor executor, String threadName, NioSelectorRunnablePool selectorRunnablePool) {super(executor, threadName, selectorRunnablePool);}@Overrideprotected void process(Selector selector) throws IOException {Set<SelectionKey> selectedKeys = selector.selectedKeys();if (selectedKeys.isEmpty()) {return;}for (Iterator<SelectionKey> i = selectedKeys.iterator(); i.hasNext();) {SelectionKey key = i.next();i.remove();ServerSocketChannel server = (ServerSocketChannel) key.channel();// 新客户端SocketChannel channel = server.accept();// 设置为非阻塞channel.configureBlocking(false);// 获取一个workerWorker nextworker = getSelectorRunnablePool().nextWorker();// 注册新客户端接入任务nextworker.registerNewChannelTask(channel);System.out.println("新客户端链接");}}public void registerAcceptChannelTask(final ServerSocketChannel serverChannel){final Selector selector = this.selector;registerTask(new Runnable() {@Overridepublic void run() {try {//注册serverChannel到selectorserverChannel.register(selector, SelectionKey.OP_ACCEPT);} catch (ClosedChannelException e) {e.printStackTrace();}}});}@Overrideprotected int select(Selector selector) throws IOException {return selector.select();}}

大厅服务员:轮询该Selector上所有读操作,并读取数据

import java.io.IOException;import java.nio.ByteBuffer;import java.nio.channels.ClosedChannelException;import java.nio.channels.SelectionKey;import java.nio.channels.Selector;import java.nio.channels.SocketChannel;import java.util.Iterator;import java.util.Set;import java.util.concurrent.Executor;import com.cn.pool.NioSelectorRunnablePool;import com.cn.pool.Worker;public class NioServerWorker extends AbstractNioSelector implements Worker{public NioServerWorker(Executor executor, String threadName, NioSelectorRunnablePool selectorRunnablePool) {super(executor, threadName, selectorRunnablePool);}@Overrideprotected void process(Selector selector) throws IOException {Set<SelectionKey> selectedKeys = selector.selectedKeys();if (selectedKeys.isEmpty()) {return;}Iterator<SelectionKey> ite = this.selector.selectedKeys().iterator();while (ite.hasNext()) {SelectionKey key = (SelectionKey) ite.next();// 移除,防止重复处理ite.remove();// 得到事件发生的Socket通道SocketChannel channel = (SocketChannel) key.channel();// 数据总长度int ret = 0;boolean failure = true;ByteBuffer buffer = ByteBuffer.allocate(1024);//读取数据try {ret = channel.read(buffer);failure = false;} catch (Exception e) {// ignore}//判断是否连接已断开if (ret <= 0 || failure) {key.cancel();System.out.println("客户端断开连接");}else{System.out.println("收到数据:" + new String(buffer.array()));//回写数据ByteBuffer outBuffer = ByteBuffer.wrap("收到\n".getBytes());channel.write(outBuffer);// 将消息回送给客户端}}}/** * 加入一个新的socket客户端 */public void registerNewChannelTask(final SocketChannel channel){final Selector selector = this.selector;registerTask(new Runnable() {@Overridepublic void run() {try {//将客户端注册到selector中channel.register(selector, SelectionKey.OP_READ);} catch (ClosedChannelException e) {e.printStackTrace();}}});}@Overrideprotected int select(Selector selector) throws IOException {return selector.select(500);}}

辅助的类:绑定端口到ServerSocketChannel中,本例中,只用了一个ServerSocketChannel,一个迎客服务员。

import java.net.SocketAddress;import java.nio.channels.ServerSocketChannel;import com.cn.pool.Boss;import com.cn.pool.NioSelectorRunnablePool;public class ServerBootstrap {private NioSelectorRunnablePool selectorRunnablePool;public ServerBootstrap(NioSelectorRunnablePool selectorRunnablePool) {this.selectorRunnablePool = selectorRunnablePool;}/** * 绑定端口 * @param localAddress */public void bind(final SocketAddress localAddress){try {// 获得一个ServerSocket通道ServerSocketChannel serverChannel = ServerSocketChannel.open();// 设置通道为非阻塞serverChannel.configureBlocking(false);// 将该通道对应的ServerSocket绑定到port端口serverChannel.socket().bind(localAddress);//获取一个boss线程Boss nextBoss = selectorRunnablePool.nextBoss();//向boss注册一个ServerSocket通道nextBoss.registerAcceptChannelTask(serverChannel);} catch (Exception e) {e.printStackTrace();}}}

大厅服务员、迎客服务员接口:

import java.nio.channels.SocketChannel;public interface Worker {/** * 加入一个新的客户端会话 * @param channel */public void registerNewChannelTask(SocketChannel channel);}import java.nio.channels.ServerSocketChannel;public interface Boss {/** * 加入一个新的ServerSocket * @param serverChannel */public void registerAcceptChannelTask(ServerSocketChannel serverChannel);}

配置有几个大厅服务员、几个迎客服务员NioSelectorRunnablePool 类:将线程池传入大厅服务员、迎客服务员中。

import java.util.concurrent.Executor;import java.util.concurrent.atomic.AtomicInteger;import com.cn.NioServerBoss;import com.cn.NioServerWorker;public class NioSelectorRunnablePool {/** * boss线程数组 */private final AtomicInteger bossIndex = new AtomicInteger();private Boss[] bosses;/** * worker线程数组 */private final AtomicInteger workerIndex = new AtomicInteger();private Worker[] workeres;public NioSelectorRunnablePool(Executor boss, Executor worker) {initBoss(boss, 1);initWorker(worker, Runtime.getRuntime().availableProcessors() * 2);}/** * 初始化boss线程 * @param boss * @param count */private void initBoss(Executor boss, int count) {this.bosses = new NioServerBoss[count];for (int i = 0; i < bosses.length; i++) {bosses[i] = new NioServerBoss(boss, "boss thread " + (i+1), this);}}/** * 初始化worker线程 * @param worker * @param count */private void initWorker(Executor worker, int count) {this.workeres = new NioServerWorker[count];for (int i = 0; i < workeres.length; i++) {workeres[i] = new NioServerWorker(worker, "worker thread " + (i+1), this);}}/** * 获取一个worker * @return */public Worker nextWorker() {return workeres[Math.abs(workerIndex.getAndIncrement() % workeres.length)];}/** * 获取一个boss * @return */public Boss nextBoss() {return bosses[Math.abs(bossIndex.getAndIncrement() % bosses.length)];}}

测试:需要自己新建两个线程池传入NioSelectorRunnablePool中。

import java.net.InetSocketAddress;import java.util.concurrent.Executors;import com.cn.pool.NioSelectorRunnablePool;public class Start {public static void main(String[] args) {//初始化线程NioSelectorRunnablePool nioSelectorRunnablePool = new NioSelectorRunnablePool(Executors.newCachedThreadPool(), Executors.newCachedThreadPool());//获取服务类ServerBootstrap bootstrap = new ServerBootstrap(nioSelectorRunnablePool);//绑定端口bootstrap.bind(new InetSocketAddress(10101));System.out.println("start");}}

运行结果:

还没有评论,来说两句吧...