beeline连接hiveserver2报错:User: root is not allowed to impersonate root

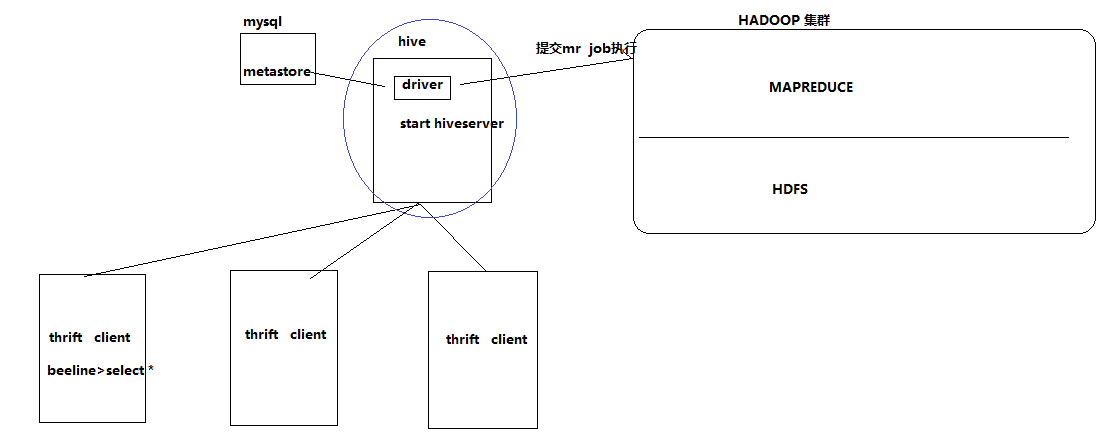

最近在生产中搭建HA机制的集群,碰到不少坑,会在接下来的时间里好好总结下,先说下遇到的一个大坑。我们的需求是:希望通过hive的thrift服务来实现跨语言访问Hive数据仓库。但是第一步,你得需要在节点中打通服务器端(启动hiveserver2的节点)和客户端(启动beeline的节点)的链接。整体的结构如下图所示:

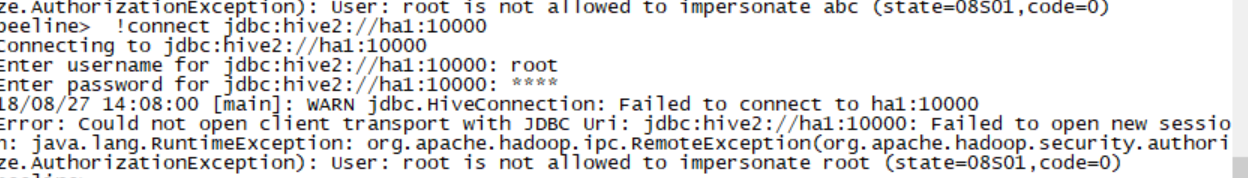

但是整个的配置过程可谓是一波三折,先在数据1节点启动hiveserver2,接着在数据3节点启动beeline链接数据1。出现了以下错误:

坑:

Error: Could not open client transport with JDBC Uri: jdbc:hive2://ha1:10000/hive: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate root(state=08S01,code=0)

| 解决方法:参考网上的一般的解决方法 |

在hadoop的配置文件core-site.xml中添加如下属性:<property><name>hadoop.proxyuser.root.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.root.groups</name><value>*</value></property>

报错:User: root is not allowed to impersonate root(state=08S01,code=0)

就将上面配置hadoop.proxyuser.xxx.hosts和hadoop.proxyuser.xxx.groups中的xxx设置为root(即你的错误日志中显示的User:xxx为什么就设置为什么)。“*”表示可通过超级代理“xxx”操作hadoop的用户、用户组和主机。重启hdfs。

这样改的原因:

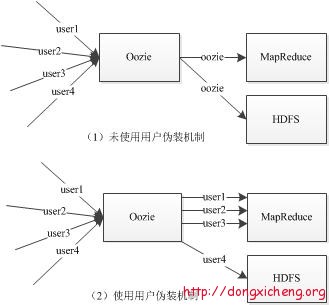

主要原因是hadoop引入了一个安全伪装机制,使得hadoop 不允许上层系统直接将实际用户传递到hadoop层,而是将实际用户传递给一个超级代理,由此代理在hadoop上执行操作,避免任意客户端随意操作hadoop,如下图:

图上的超级代理是“Oozie”,你自己的超级代理是上面设置的“xxx”。

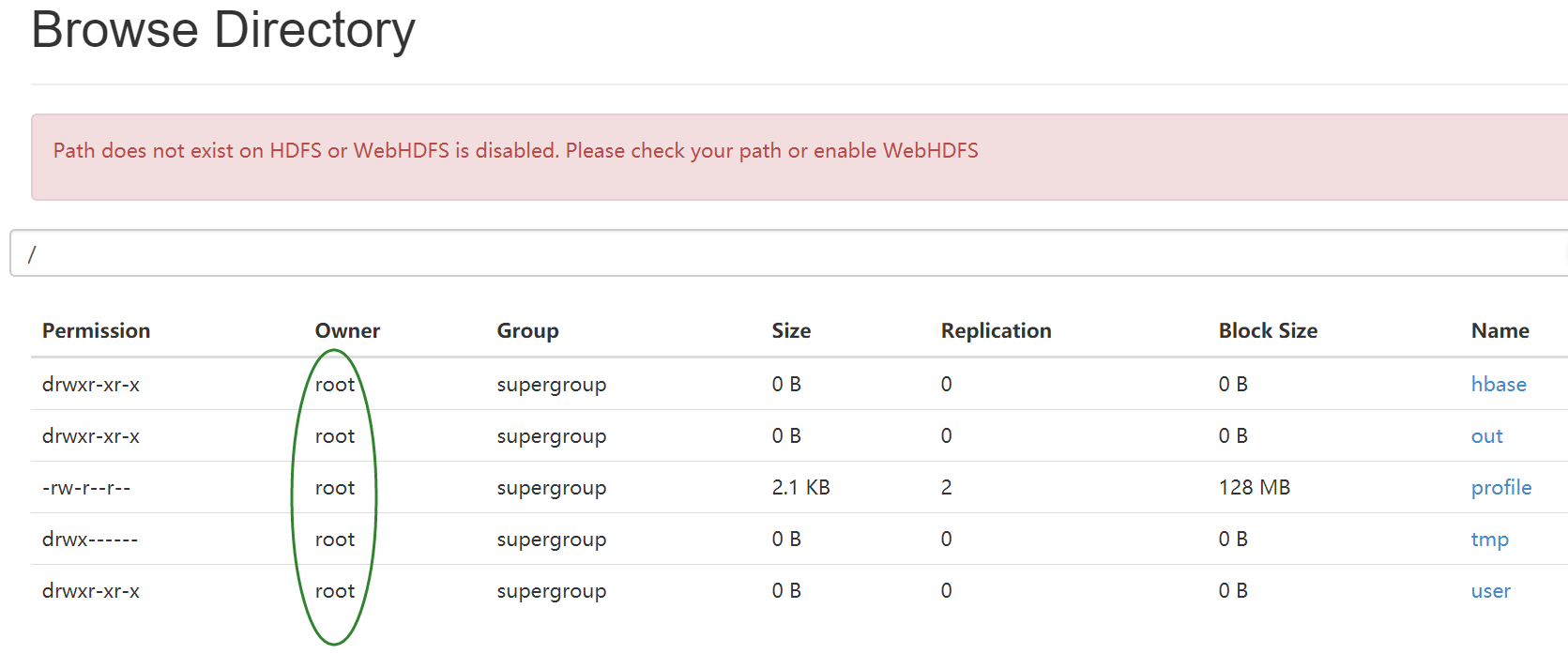

而hadoop内部还是延用linux对应的用户和权限。即你用哪个linux用户启动hadoop,对应的用户也就成为hadoop的内部用户,如下图我的linux用户为root,对应的hadoop中用户也就是root:

如果是这么简单就好了,改完之后错误依旧,只不过是在自己虚拟机搭建的HA集群中可以正常连接,但是生产中的集群依旧不能正常链接,纠结了大半天,参考了网上的各种解决方案如刷新HDFS的用户的配置权限:

bin/hdfs dfsadmin –refreshSuperUserGroupsConfigurationbin/yarn rmadmin –refreshSuperUserGroupsConfiguration

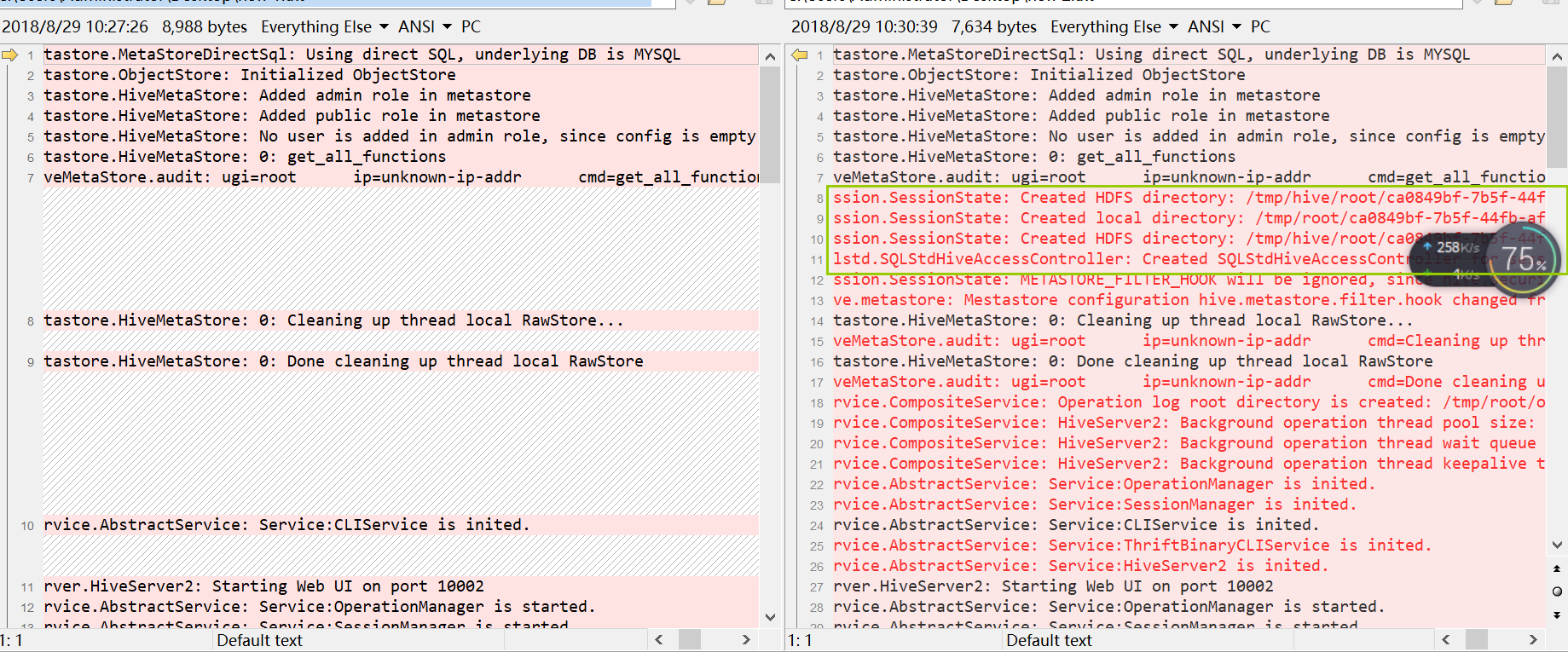

但是都没有效果,后来仔细对比了虚拟机正常连接的集群对应的日志和生产启动失败的集群的日志发现了一些“蛛丝马迹”:

虚拟机的日志(正常启动):

2018-08-29T10:22:11,661 INFO [main] metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL2018-08-29T10:22:11,665 INFO [main] metastore.ObjectStore: Initialized ObjectStore2018-08-29T10:22:11,813 INFO [main] metastore.HiveMetaStore: Added admin role in metastore2018-08-29T10:22:11,814 INFO [main] metastore.HiveMetaStore: Added public role in metastore2018-08-29T10:22:11,834 INFO [main] metastore.HiveMetaStore: No user is added in admin role, since config is empty2018-08-29T10:22:12,032 INFO [main] metastore.HiveMetaStore: 0: get_all_functions2018-08-29T10:22:12,036 INFO [main] HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_all_functions2018-08-29T10:22:13,841 INFO [main] session.SessionState: Created HDFS directory: /tmp/hive/root/ca0849bf-7b5f-44fb-af7e-ebcdfe04d13f2018-08-29T10:22:13,895 INFO [main] session.SessionState: Created local directory: /tmp/root/ca0849bf-7b5f-44fb-af7e-ebcdfe04d13f2018-08-29T10:22:13,908 INFO [main] session.SessionState: Created HDFS directory: /tmp/hive/root/ca0849bf-7b5f-44fb-af7e-ebcdfe04d13f/_tmp_space.db2018-08-29T10:22:13,936 INFO [main] sqlstd.SQLStdHiveAccessController: Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=ca0849bf-7b5f-44fb-af7e-ebcdfe04d13f, clientType=HIVESERVER2]2018-08-29T10:22:13,938 WARN [main] session.SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.2018-08-29T10:22:13,940 INFO [main] hive.metastore: Mestastore configuration hive.metastore.filter.hook changed from org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl to org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook2018-08-29T10:22:13,996 INFO [main] metastore.HiveMetaStore: 0: Cleaning up thread local RawStore...2018-08-29T10:22:13,996 INFO [main] HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=Cleaning up thread local RawStore...2018-08-29T10:22:13,996 INFO [main] metastore.HiveMetaStore: 0: Done cleaning up thread local RawStore2018-08-29T10:22:13,997 INFO [main] HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=Done cleaning up thread local RawStore2018-08-29T10:22:14,606 INFO [main] service.CompositeService: Operation log root directory is created: /tmp/root/operation_logs2018-08-29T10:22:14,618 INFO [main] service.CompositeService: HiveServer2: Background operation thread pool size: 1002018-08-29T10:22:14,624 INFO [main] service.CompositeService: HiveServer2: Background operation thread wait queue size: 1002018-08-29T10:22:14,624 INFO [main] service.CompositeService: HiveServer2: Background operation thread keepalive time: 10 seconds2018-08-29T10:22:14,653 INFO [main] service.AbstractService: Service:OperationManager is inited.2018-08-29T10:22:14,653 INFO [main] service.AbstractService: Service:SessionManager is inited.2018-08-29T10:22:14,653 INFO [main] service.AbstractService: Service:CLIService is inited.2018-08-29T10:22:14,653 INFO [main] service.AbstractService: Service:ThriftBinaryCLIService is inited.2018-08-29T10:22:14,653 INFO [main] service.AbstractService: Service:HiveServer2 is inited.2018-08-29T10:22:14,654 INFO [main] server.HiveServer2: Starting Web UI on port 100022018-08-29T10:22:14,903 INFO [main] service.AbstractService: Service:OperationManager is started.2018-08-29T10:22:14,903 INFO [main] service.AbstractService: Service:SessionManager is started.2018-08-29T10:22:14,915 INFO [main] service.AbstractService: Service:CLIService is started.2018-08-29T10:22:14,916 INFO [main] service.AbstractService: Service:ThriftBinaryCLIService is started.2018-08-29T10:22:14,916 INFO [main] service.AbstractService: Service:HiveServer2 is started.2018-08-29T10:22:14,918 INFO [main] server.Server: jetty-7.6.0.v201201272018-08-29T10:22:15,054 INFO [main] webapp.WebInfConfiguration: Extract jar:file:/root/apps/hive-2.1.1/lib/hive-service-2.1.1.jar!/hive-webapps/hiveserver2/ to /tmp/jetty-0.0.0.0-10002-hiveserver2-_-any-/webapp2018-08-29T10:22:15,090 INFO [Thread-11] thrift.ThriftCLIService: Starting ThriftBinaryCLIService on port 10000 with 5...500 worker threads2018-08-29T10:22:15,413 INFO [main] handler.ContextHandler: started o.e.j.w.WebAppContext{/,file:/tmp/jetty-0.0.0.0-10002-hiveserver2-_-any-/webapp/},jar:file:/root/apps/hive-2.1.1/lib/hive-service-2.1.1.jar!/hive-webapps/hiveserver22018-08-29T10:22:15,583 INFO [main] handler.ContextHandler: started o.e.j.s.ServletContextHandler{/static,jar:file:/root/apps/hive-2.1.1/lib/hive-service-2.1.1.jar!/hive-webapps/static}2018-08-29T10:22:15,594 INFO [main] handler.ContextHandler: started o.e.j.s.ServletContextHandler{/logs,file:/root/apps/hive-2.1.1/logs/}2018-08-29T10:22:15,645 INFO [main] server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:100022018-08-29T10:22:15,660 INFO [main] http.HttpServer: Started HttpServer[hiveserver2] on port 100022018-08-29T10:22:15,660 INFO [main] server.HiveServer2: Web UI has started on port 100022018-08-29T10:26:04,804 INFO [HiveServer2-Handler-Pool: Thread-39] thrift.ThriftCLIService: Client protocol version: HIVE_CLI_SERVICE_PROTOCOL_V92018-08-29T10:26:05,732 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Created HDFS directory: /tmp/hive/root/f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,735 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Created local directory: /tmp/root/f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,745 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Created HDFS directory: /tmp/hive/root/f575ff1d-8cfa-4d94-beb8-bd7365a5bada/_tmp_space.db2018-08-29T10:26:05,748 INFO [HiveServer2-Handler-Pool: Thread-39] session.HiveSessionImpl: Operation log session directory is created: /tmp/root/operation_logs/f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,749 INFO [HiveServer2-Handler-Pool: Thread-39] service.CompositeService: Session opened, SessionHandle [f575ff1d-8cfa-4d94-beb8-bd7365a5bada], current sessions:12018-08-29T10:26:05,864 INFO [HiveServer2-Handler-Pool: Thread-39] conf.HiveConf: Using the default value passed in for log id: f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,864 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Updating thread name to f575ff1d-8cfa-4d94-beb8-bd7365a5bada HiveServer2-Handler-Pool: Thread-392018-08-29T10:26:05,865 INFO [f575ff1d-8cfa-4d94-beb8-bd7365a5bada HiveServer2-Handler-Pool: Thread-39] conf.HiveConf: Using the default value passed in for log id: f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,865 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Resetting thread name to HiveServer2-Handler-Pool: Thread-392018-08-29T10:26:05,883 INFO [HiveServer2-Handler-Pool: Thread-39] conf.HiveConf: Using the default value passed in for log id: f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,883 INFO [f575ff1d-8cfa-4d94-beb8-bd7365a5bada HiveServer2-Handler-Pool: Thread-39] conf.HiveConf: Using the default value passed in for log id: f575ff1d-8cfa-4d94-beb8-bd7365a5bada2018-08-29T10:26:05,883 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Updating thread name to f575ff1d-8cfa-4d94-beb8-bd7365a5bada HiveServer2-Handler-Pool: Thread-392018-08-29T10:26:05,883 INFO [HiveServer2-Handler-Pool: Thread-39] session.SessionState: Resetting thread name to HiveServer2-Handler-Pool: Thread-39

启动失败的日志:

2018-08-29T09:40:46,117 INFO [main] metastore.MetaStoreDirectSql: Using direct SQL, underlying DB is MYSQL2018-08-29T09:40:46,119 INFO [main] metastore.ObjectStore: Initialized ObjectStore2018-08-29T09:40:46,215 INFO [main] metastore.HiveMetaStore: Added admin role in metastore2018-08-29T09:40:46,217 INFO [main] metastore.HiveMetaStore: Added public role in metastore2018-08-29T09:40:46,231 INFO [main] metastore.HiveMetaStore: No user is added in admin role, since config is empty2018-08-29T09:40:46,368 INFO [main] metastore.HiveMetaStore: 0: get_all_functions2018-08-29T09:40:46,371 INFO [main] HiveMetaStore.audit: ugi=root ip=unknown-ip-addr cmd=get_all_functions2018-08-29T09:40:47,422 INFO [main] metastore.HiveMetaStore: 0: Cleaning up thread local RawStore...2018-08-29T09:40:47,423 INFO [main] metastore.HiveMetaStore: 0: Done cleaning up thread local RawStore2018-08-29T09:40:47,825 INFO [main] service.AbstractService: Service:CLIService is inited.2018-08-29T09:40:47,826 INFO [main] server.HiveServer2: Starting Web UI on port 100022018-08-29T09:40:47,906 INFO [main] service.AbstractService: Service:OperationManager is started.2018-08-29T09:40:47,906 INFO [main] service.AbstractService: Service:SessionManager is started.2018-08-29T09:40:47,907 INFO [main] service.AbstractService: Service:CLIService is started.2018-08-29T09:40:47,907 INFO [main] service.AbstractService: Service:ThriftBinaryCLIService is started.2018-08-29T09:40:47,908 INFO [main] service.AbstractService: Service:HiveServer2 is started.2018-08-29T09:40:47,910 INFO [main] server.Server: jetty-7.6.0.v201201272018-08-29T09:40:48,102 INFO [main] server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:100022018-08-29T09:40:48,105 INFO [main] server.HiveServer2: Web UI has started on port 100022018-08-29T09:40:48,105 INFO [main] http.HttpServer: Started HttpServer[hiveserver2] on port 100022018-08-29T09:41:03,343 WARN [HiveServer2-Handler-Pool: Thread-43] service.CompositeService: Failed to open sessionat org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656)at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:327)at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:279)at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:189)at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:423)at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:312)at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1377)at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1362)at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:591)at org.apache.hadoop.ipc.Client.call(Client.java:1469)at org.apache.hadoop.ipc.Client.call(Client.java:1400)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)at com.sun.proxy.$Proxy30.getFileInfo(Unknown Source)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)at com.sun.proxy.$Proxy31.getFileInfo(Unknown Source)at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1977)at org.apache.hadoop.hdfs.DistributedFileSystem$18.doCall(DistributedFileSystem.java:1118)at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1114)at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1400)at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:689)at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:635)at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:563)... 28 more2018-08-29T09:41:03,350 WARN [HiveServer2-Handler-Pool: Thread-43] thrift.ThriftCLIService: Error opening session:at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:336)at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:279)at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:189)at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:423)at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1362)at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)at java.lang.Thread.run(Thread.java:748)Caused by: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate rootat org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:89)at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36)at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63)at java.security.AccessController.doPrivileged(Native Method)at javax.security.auth.Subject.doAs(Subject.java:422)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1656)at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59)at com.sun.proxy.$Proxy37.open(Unknown Source)at org.apache.hive.service.cli.session.SessionManager.createSession(SessionManager.java:327)... 13 moreCaused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate rootat org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:591)at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:526)at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:168)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:498)at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78)... 21 moreCaused by: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: root is not allowed to impersonate rootat org.apache.hadoop.ipc.Client.call(Client.java:1469)at org.apache.hadoop.ipc.Client.call(Client.java:1400)at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:232)at com.sun.proxy.$Proxy30.getFileInfo(Unknown Source)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:752)at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

最后一对比,发现正常链接的日志中有对HDFS某目录的操作,失败的则没有,如下图所示:

所以推测是用户权限的问题(只怪自己不懂内部运行机制,只能这样猜了。。)

于是自己又更改了HDFS中对应的/tmp文件更改权限:

hadoop fs -chmod -r 777 /tmp

后来发现依旧不行。。。。

此刻博主被折磨的不行不行了。。。不过还得接着解决,不然公司的小姐姐就没法接下来的工作,深刻对比了两个集群的状态后(虚拟机和生产集群),突然发现生产中启动hiveserver2的节点的namenode状态为standy(搭建的是HA机制的集群有两个namenode,一个为active状态,一个为standy,standy状态的节点没有对HDFS的操作权限。PS:即使read的权限也没有,毕竟两个namenode只有一个掌控对应的HDFS的权限),而虚拟机中启动hiveserver2的节点的状态为Active。于是感觉希望又来了,果断kill掉生产中对应的active状态的namenode,这样standy状态的namenode也就转化为active状态也就有了操作HDFS的权限,操作过后,总算大功告成,连接生效。

总结:

其实整个过程只要把第一步的超级代理用户配置好,然后在最后一步的启动hiveserver2的NameNode(我们这里称为ha1)的状态改为active状态应该就OK了,因为这样你就能用ha1在hadoop环境下的root用户去操作HDFS,即使这里再出现用户权限不足的问题,那我们可以接着修改对应的文件的访问权限。而当另一个namenode为active状态,ha1为standy状态时,我们就无法用ha1下的root用户去访问HDFS,所以也就造成启动日志中,一直无法加载生成对应的HDFS文件。

其他一些不错的参考:

https://blog.csdn.net/sunnyyoona/article/details/51648871

http://debugo.com/beeline-invalid-url/

https://blog.csdn.net/yunyexiangfeng/article/details/60867563

还没有评论,来说两句吧...