机器学习-决策树

此文章是《Machine Learning in Action》中决策树章节的学习笔记,

决策树可以使用不熟悉的数据集合,并从中提取出一系列规则,在这些机器根据数据集创建规则时,就是机器学习的过程。

优点:计算复杂度不高,输出结果易于理解,对中间值的缺失不敏感,可以处理不相关特征数据缺点:可能会产生过度匹配问题适用数据类型:数值型和标称型

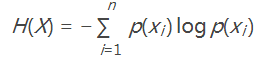

1 香农熵

香农熵百度百科,信息量的度量就等于不确定性的多少。变量的不确定性越大,熵也就越大,把它搞清楚所需要的信息量也就越大。

# -*- coding: utf-8 -*-''' Created on 2018年02月21日 @author: dzm '''from math import logdef createDataSet():''' 数据集, 第一列 不浮出水面是否可以生存 第二列 是否有脚蹼 第三列 属于鱼类 :return: '''dataSet = [[1, 1, 'yes'],[1, 1, 'yes'],[1, 0, 'no'],[0, 1, 'no'],[0, 1, 'no']]labels = ['no surfacing','flippers']#change to discrete valuesreturn dataSet, labelsdef calcShannonEnt(dataSet):''' 计算香农熵 :param dataSet: :return: '''numEntries = len(dataSet)labelCounts = {}for featVec in dataSet: #the the number of unique elements and their occurancecurrentLabel = featVec[-1]if currentLabel not in labelCounts.keys(): labelCounts[currentLabel] = 0labelCounts[currentLabel] += 1shannonEnt = 0.0for key in labelCounts:# 计算分类的概率prob = float(labelCounts[key])/numEntries# 计算香农熵shannonEnt -= prob * log(prob,2) #log base 2return shannonEntif __name__ == '__main__':myDat, labels = createDataSet()print calcShannonEnt(myDat)

2 划分数据集

def splitDataSet(dataSet, axis, value):''' 划分数据集 :param dataSet: 待划分的数据集 :param axis: 划分数据集的特征 :param value: 需要返回的特征值 :return: '''retDataSet = []for featVec in dataSet:if featVec[axis] == value:reducedFeatVec = featVec[:axis] #chop out axis used for splittingreducedFeatVec.extend(featVec[axis+1:])retDataSet.append(reducedFeatVec)return retDataSetdef chooseBestFeatureToSplit(dataSet):''' 选择最好的数据集划分方式 :param dataSet: :return: '''numFeatures = len(dataSet[0]) - 1 #the last column is used for the labels# 计算整个数据集的原始香农熵baseEntropy = calcShannonEnt(dataSet)bestInfoGain = 0.0; bestFeature = -1for i in range(numFeatures): #iterate over all the featuresfeatList = [example[i] for example in dataSet]#create a list of all the examples of this featureuniqueVals = set(featList) #get a set of unique valuesnewEntropy = 0.0for value in uniqueVals:subDataSet = splitDataSet(dataSet, i, value)prob = len(subDataSet)/float(len(dataSet))newEntropy += prob * calcShannonEnt(subDataSet)infoGain = baseEntropy - newEntropy #calculate the info gain; ie reduction in entropyif (infoGain > bestInfoGain): #compare this to the best gain so far# 讲每个特征对应的熵进行比较,选择熵值最小的,作为特征划分的索引值bestInfoGain = infoGain #if better than current best, set to bestbestFeature = ireturn bestFeature #returns an integer

3 决策树

def majorityCnt(classList):''' :param classList: :return: '''classCount={}for vote in classList:if vote not in classCount.keys(): classCount[vote] = 0classCount[vote] += 1sortedClassCount = sorted(classCount.iteritems(), key=operator.itemgetter(1), reverse=True)return sortedClassCount[0][0]def createTree(dataSet,labels):''' 构建决策树 :param dataSet: 数据集 :param labels: 标签列表,包含了数据集中所有特征的标签 :return: '''classList = [example[-1] for example in dataSet]if classList.count(classList[0]) == len(classList):return classList[0]#stop splitting when all of the classes are equalif len(dataSet[0]) == 1: #stop splitting when there are no more features in dataSetreturn majorityCnt(classList)bestFeat = chooseBestFeatureToSplit(dataSet)bestFeatLabel = labels[bestFeat]# 存储数据的信息myTree = {bestFeatLabel:{}}del(labels[bestFeat])featValues = [example[bestFeat] for example in dataSet]uniqueVals = set(featValues)for value in uniqueVals:subLabels = labels[:] #copy all of labels, so trees don't mess up existing labelsmyTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels)return myTree

还没有评论,来说两句吧...