The Louvain method for community detection

The Louvainmethod for community detection

Louvain method是一个非重叠社团发现的算法,该方法具有较快的执行效率。

对应的paper地址:http://arxiv.org/abs/0803.0476

Paper作者对算法的介绍网址:

http://perso.uclouvain.be/vincent.blondel/research/louvain.html

算法代码下载地址(只有c++版本和matlab版本,没有java版本):

https://sites.google.com/site/findcommunities/

找到c++版本时,想改成java版本,无奈发现c++版本里的数据结构略显复杂,失去耐心,就打算自己写一个java版本出来,结果自己写的不仅时间多,而且模块度值与论文中的模块度值也有一定的差距。

于是google到一个别人已经实现的java版本的代码

http://wiki.cns.iu.edu/display/CISHELL/Louvain+Community+Detection

除了实现Louvain方法之外,他还实现了一个slm算法,并将这几个算法实现打包成jar文件,源码可以在下面的网址中下载到

http://www.ludowaltman.nl/slm/

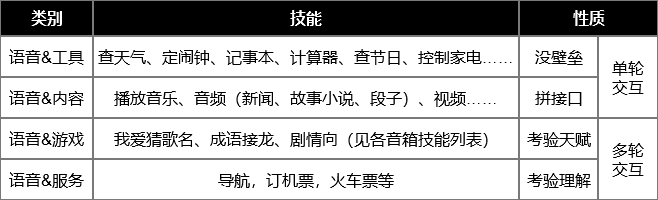

算法的总体思想是:

1.刚开始时,每一个节点形成一个小的簇

2.通过模块度函数值优化,将每一个节点归到‘最好’的簇当中去。该步骤知道所有的节点所属的簇不再变化才停止

3.将网络图进行reduce,把一个簇内的所有节点抽象成一个节点,看抽象之后的网络图是否还有优化的可能性。如果有,对抽象之后的图,继续进行第2步。

经过我对其代码的精简,Louvain算法代码如下:

ModularityOptimizer.java

[java] view plain copy

- /**

- * ModularityOptimizer

- *

- * @author Ludo Waltman

- * @author Nees Jan van Eck

- * @version 1.2.0, 05/14/14

- */

- import java.io.BufferedReader;

- import java.io.BufferedWriter;

- import java.io.FileReader;

- import java.io.FileWriter;

- import java.io.IOException;

- import java.util.Arrays;

- import java.util.Random;

- public class ModularityOptimizer

- {

- public static void main(String[] args) throws IOException

- {

- boolean update;

- double modularity, maxModularity, resolution, resolution2;

- int algorithm, i, j, modularityFunction, nClusters, nIterations, nRandomStarts;

- int[] cluster;

- long beginTime, endTime;

- Network network;

- Random random;

- String inputFileName, outputFileName;

- inputFileName = “fb.txt”;

- outputFileName = “communities.txt”;

- modularityFunction = 1;

- resolution = 1.0;

- algorithm = 1;

- nRandomStarts = 10;

- nIterations = 3;

- System.out.println(“Modularity Optimizer version 1.2.0 by Ludo Waltman and Nees Jan van Eck”);

- System.out.println();

- System.out.println(“Reading input file…”);

- System.out.println();

- network = readInputFile(inputFileName, modularityFunction);

- System.out.format(“Number of nodes: %d%n”, network.getNNodes());

- System.out.format(“Number of edges: %d%n”, network.getNEdges() / 2);

- System.out.println();

- System.out.println(“Running “ + ((algorithm == 1) ? “Louvain algorithm” : ((algorithm == 2) ? “Louvain algorithm with multilevel refinement” : “smart local moving algorithm”)) + “…”);

- System.out.println();

- resolution2 = ((modularityFunction == 1) ? (resolution / network.getTotalEdgeWeight()) : resolution);

- beginTime = System.currentTimeMillis();

- cluster = null;

- nClusters = -1;

- maxModularity = Double.NEGATIVE_INFINITY;

- random = new Random(100);

- for (i = 0; i < nRandomStarts; i++)

- {

- if (nRandomStarts > 1)

- System.out.format(“Random start: %d%n”, i + 1);

- network.initSingletonClusters(); //网络初始化,每个节点一个簇

- j = 0;

- update = true;

- do

- {

- if (nIterations > 1)

- System.out.format(“Iteration: %d%n”, j + 1);

- if (algorithm == 1)

- update = network.runLouvainAlgorithm(resolution2, random);

- j++;

- modularity = network.calcQualityFunction(resolution2);

- if (nIterations > 1)

- System.out.format(“Modularity: %.4f%n”, modularity);

- }

- while ((j < nIterations) && update);

- if (modularity > maxModularity)

- {

- cluster = network.getClusters();

- nClusters = network.getNClusters();

- maxModularity = modularity;

- }

- if (nRandomStarts > 1)

- {

- if (nIterations == 1)

- System.out.format(“Modularity: %.4f%n”, modularity);

- System.out.println();

- }

- }

- endTime = System.currentTimeMillis();

- if (nRandomStarts == 1)

- {

- if (nIterations > 1)

- System.out.println();

- System.out.format(“Modularity: %.4f%n”, maxModularity);

- }

- else

- System.out.format(“Maximum modularity in %d random starts: %.4f%n”, nRandomStarts, maxModularity);

- System.out.format(“Number of communities: %d%n”, nClusters);

- System.out.format(“Elapsed time: %d seconds%n”, Math.round((endTime - beginTime) / 1000.0));

- System.out.println();

- System.out.println(“Writing output file…”);

- System.out.println();

- writeOutputFile(outputFileName, cluster);

- }

- private static Network readInputFile(String fileName, int modularityFunction) throws IOException

- {

- BufferedReader bufferedReader;

- double[] edgeWeight1, edgeWeight2, nodeWeight;

- int i, j, nEdges, nLines, nNodes;

- int[] firstNeighborIndex, neighbor, nNeighbors, node1, node2;

- Network network;

- String[] splittedLine;

- bufferedReader = new BufferedReader(new FileReader(fileName));

- nLines = 0;

- while (bufferedReader.readLine() != null)

- nLines++;

- bufferedReader.close();

- bufferedReader = new BufferedReader(new FileReader(fileName));

- node1 = new int[nLines];

- node2 = new int[nLines];

- edgeWeight1 = new double[nLines];

- i = -1;

- for (j = 0; j < nLines; j++)

- {

- splittedLine = bufferedReader.readLine().split(“\t”);

- node1[j] = Integer.parseInt(splittedLine[0]);

- if (node1[j] > i)

- i = node1[j];

- node2[j] = Integer.parseInt(splittedLine[1]);

- if (node2[j] > i)

- i = node2[j];

- edgeWeight1[j] = (splittedLine.length > 2) ? Double.parseDouble(splittedLine[2]) : 1;

- }

- nNodes = i + 1;

- bufferedReader.close();

- nNeighbors = new int[nNodes];

- for (i = 0; i < nLines; i++)

- if (node1[i] < node2[i])

- {

- nNeighbors[node1[i]]++;

- nNeighbors[node2[i]]++;

- }

- firstNeighborIndex = new int[nNodes + 1];

- nEdges = 0;

- for (i = 0; i < nNodes; i++)

- {

- firstNeighborIndex[i] = nEdges;

- nEdges += nNeighbors[i];

- }

- firstNeighborIndex[nNodes] = nEdges;

- neighbor = new int[nEdges];

- edgeWeight2 = new double[nEdges];

- Arrays.fill(nNeighbors, 0);

- for (i = 0; i < nLines; i++)

- if (node1[i] < node2[i])

- {

- j = firstNeighborIndex[node1[i]] + nNeighbors[node1[i]];

- neighbor[j] = node2[i];

- edgeWeight2[j] = edgeWeight1[i];

- nNeighbors[node1[i]]++;

- j = firstNeighborIndex[node2[i]] + nNeighbors[node2[i]];

- neighbor[j] = node1[i];

- edgeWeight2[j] = edgeWeight1[i];

- nNeighbors[node2[i]]++;

- }

- {

- nodeWeight = new double[nNodes];

- for (i = 0; i < nEdges; i++)

- nodeWeight[neighbor[i]] += edgeWeight2[i];

- network = new Network(nNodes, firstNeighborIndex, neighbor, edgeWeight2, nodeWeight);

- }

- return network;

- }

- private static void writeOutputFile(String fileName, int[] cluster) throws IOException

- {

- BufferedWriter bufferedWriter;

- int i;

- bufferedWriter = new BufferedWriter(new FileWriter(fileName));

- for (i = 0; i < cluster.length; i++)

- {

- bufferedWriter.write(Integer.toString(cluster[i]));

- bufferedWriter.newLine();

- }

- bufferedWriter.close();

- }

- }

Network.java

[java] view plain copy

- /**

- * Network

- *

- * @author LudoWaltman

- * @author Nees Janvan Eck

- * @version 1.2.0,05/14/14

- */

- import java.io.Serializable;

- import java.util.Random;

- public class Network implements Cloneable, Serializable

- {

- private staticfinal long serialVersionUID = 1;

- private intnNodes;

- private int[]firstNeighborIndex;

- private int[]neighbor;

- private double[]edgeWeight;

- private double[]nodeWeight;

- private intnClusters;

- private int[]cluster;

- private double[]clusterWeight;

- private int[]nNodesPerCluster;

- private int[][]nodePerCluster;

- private booleanclusteringStatsAvailable;

- publicNetwork(int nNodes, int[] firstNeighborIndex, int[] neighbor, double[]edgeWeight, double[] nodeWeight)

- {

- this(nNodes,firstNeighborIndex, neighbor, edgeWeight, nodeWeight, null);

- }

- publicNetwork(int nNodes, int[] firstNeighborIndex, int[] neighbor, double[]edgeWeight, double[] nodeWeight, int[] cluster)

- {

- int i,nEdges;

- this.nNodes= nNodes;

- this.firstNeighborIndex = firstNeighborIndex;

- this.neighbor = neighbor;

- if(edgeWeight == null)

- {

- nEdges =neighbor.length;

- this.edgeWeight = new double[nEdges];

- for (i =0; i < nEdges; i++)

- this.edgeWeight[i] = 1;

- }

- else

- this.edgeWeight = edgeWeight;

- if(nodeWeight == null)

- {

- this.nodeWeight = new double[nNodes];

- for (i =0; i < nNodes; i++)

- this.nodeWeight[i] = 1;

- }

- else

- this.nodeWeight = nodeWeight;

- }

- public intgetNNodes()

- {

- returnnNodes;

- }

- public intgetNEdges()

- {

- returnneighbor.length;

- }

- public doublegetTotalEdgeWeight()

- {

- doubletotalEdgeWeight;

- int i;

- totalEdgeWeight = 0;

- for (i = 0;i < neighbor.length; i++)

- totalEdgeWeight += edgeWeight[i];

- returntotalEdgeWeight;

- }

- public double[] getEdgeWeights()

- {

- returnedgeWeight;

- }

- public double[]getNodeWeights()

- {

- returnnodeWeight;

- }

- public intgetNClusters()

- {

- returnnClusters;

- }

- public int[]getClusters()

- {

- returncluster;

- }

- public voidinitSingletonClusters()

- {

- int i;

- nClusters =nNodes;

- cluster =new int[nNodes];

- for (i = 0;i < nNodes; i++)

- cluster[i] = i;

- deleteClusteringStats();

- }

- public voidmergeClusters(int[] newCluster)

- {

- int i, j, k;

- if (cluster== null)

- return;

- i = 0;

- for (j = 0;j < nNodes; j++)

- {

- k =newCluster[cluster[j]];

- if (k> i)

- i =k;

- cluster[j] = k;

- }

- nClusters =i + 1;

- deleteClusteringStats();

- }

- public NetworkgetReducedNetwork()

- {

- double[]reducedNetworkEdgeWeight1, reducedNetworkEdgeWeight2;

- int i, j, k,l, m, reducedNetworkNEdges1, reducedNetworkNEdges2;

- int[]reducedNetworkNeighbor1, reducedNetworkNeighbor2;

- NetworkreducedNetwork;

- if (cluster== null)

- returnnull;

- if(!clusteringStatsAvailable)

- calcClusteringStats();

- reducedNetwork = new Network();

- reducedNetwork.nNodes = nClusters;

- reducedNetwork.firstNeighborIndex = new int[nClusters + 1];

- reducedNetwork.nodeWeight = new double[nClusters];

- reducedNetworkNeighbor1 = new int[neighbor.length];

- reducedNetworkEdgeWeight1 = new double[edgeWeight.length];

- reducedNetworkNeighbor2 = new int[nClusters - 1];

- reducedNetworkEdgeWeight2 = new double[nClusters];

- reducedNetworkNEdges1 = 0;

- for (i = 0;i < nClusters; i++)

- {

- reducedNetworkNEdges2 = 0;

- for (j = 0; j <nodePerCluster[i].length; j++)

- {

- k =nodePerCluster[i][j]; //k是簇i中第j个节点的id

- for(l = firstNeighborIndex[k]; l < firstNeighborIndex[k + 1]; l++)

- {

- m = cluster[neighbor[l]]; //m是k的在l位置的邻居节点所属的簇id

- if (m != i)

- {

- if (reducedNetworkEdgeWeight2[m] == 0)

- {

- reducedNetworkNeighbor2[reducedNetworkNEdges2] = m;

- reducedNetworkNEdges2++;

- }

- reducedNetworkEdgeWeight2[m] += edgeWeight[l];

- }

- }

- reducedNetwork.nodeWeight[i] += nodeWeight[k];

- }

- for (j =0; j < reducedNetworkNEdges2; j++)

- {

- reducedNetworkNeighbor1[reducedNetworkNEdges1 + j] = reducedNetworkNeighbor2[j];

- reducedNetworkEdgeWeight1[reducedNetworkNEdges1 + j] =reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]];

- reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]] = 0;

- }

- reducedNetworkNEdges1 += reducedNetworkNEdges2;

- reducedNetwork.firstNeighborIndex[i + 1] = reducedNetworkNEdges1;

- }

- reducedNetwork.neighbor = new int[reducedNetworkNEdges1];

- reducedNetwork.edgeWeight = new double[reducedNetworkNEdges1];

- System.arraycopy(reducedNetworkNeighbor1, 0, reducedNetwork.neighbor, 0,reducedNetworkNEdges1);

- System.arraycopy(reducedNetworkEdgeWeight1, 0,reducedNetwork.edgeWeight, 0, reducedNetworkNEdges1);

- returnreducedNetwork;

- }

- public doublecalcQualityFunction(double resolution)

- {

- doublequalityFunction, totalEdgeWeight;

- int i, j, k;

- if (cluster== null)

- returnDouble.NaN;

- if(!clusteringStatsAvailable)

- calcClusteringStats();

- qualityFunction = 0;

- totalEdgeWeight = 0;

- for (i = 0;i < nNodes; i++)

- {

- j =cluster[i];

- for (k =firstNeighborIndex[i]; k < firstNeighborIndex[i + 1]; k++)

- {

- if(cluster[neighbor[k]] == j)

- qualityFunction += edgeWeight[k];

- totalEdgeWeight += edgeWeight[k];

- }

- }

- for (i = 0;i < nClusters; i++)

- qualityFunction -= clusterWeight[i] * clusterWeight[i] * resolution;

- qualityFunction /= totalEdgeWeight;

- returnqualityFunction;

- }

- public booleanrunLocalMovingAlgorithm(double resolution)

- {

- returnrunLocalMovingAlgorithm(resolution, new Random());

- }

- public booleanrunLocalMovingAlgorithm(double resolution, Random random)

- {

- booleanupdate;

- doublemaxQualityFunction, qualityFunction;

- double[]clusterWeight, edgeWeightPerCluster;

- intbestCluster, i, j, k, l, nNeighboringClusters, nStableNodes, nUnusedClusters;

- int[]neighboringCluster, newCluster, nNodesPerCluster, nodeOrder, unusedCluster;

- if ((cluster== null) || (nNodes == 1))

- returnfalse;

- update =false;

- clusterWeight = new double[nNodes];

- nNodesPerCluster = new int[nNodes];

- for (i = 0;i < nNodes; i++)

- {

- clusterWeight[cluster[i]] += nodeWeight[i];

- nNodesPerCluster[cluster[i]]++;

- }

- nUnusedClusters = 0;

- unusedCluster = new int[nNodes];

- for (i = 0;i < nNodes; i++)

- if(nNodesPerCluster[i] == 0)

- {

- unusedCluster[nUnusedClusters] = i;

- nUnusedClusters++;

- }

- nodeOrder =new int[nNodes];

- for (i = 0;i < nNodes; i++)

- nodeOrder[i] = i;

- for (i = 0;i < nNodes; i++)

- {

- j = random.nextInt(nNodes);

- k =nodeOrder[i];

- nodeOrder[i] = nodeOrder[j];

- nodeOrder[j] = k;

- }

- edgeWeightPerCluster = new double[nNodes];

- neighboringCluster = new int[nNodes - 1];

- nStableNodes = 0;

- i = 0;

- do

- {

- j =nodeOrder[i];

- nNeighboringClusters = 0;

- for (k =firstNeighborIndex[j]; k < firstNeighborIndex[j + 1]; k++)

- {

- l =cluster[neighbor[k]];

- if(edgeWeightPerCluster[l] == 0)

- {

- neighboringCluster[nNeighboringClusters] = l;

- nNeighboringClusters++;

- }

- edgeWeightPerCluster[l]+= edgeWeight[k];

- }

- clusterWeight[cluster[j]] -= nodeWeight[j];

- nNodesPerCluster[cluster[j]]—;

- if(nNodesPerCluster[cluster[j]] == 0)

- {

- unusedCluster[nUnusedClusters] = cluster[j];

- nUnusedClusters++;

- }

- bestCluster = -1;

- maxQualityFunction = 0;

- for (k =0; k < nNeighboringClusters; k++)

- {

- l =neighboringCluster[k];

- qualityFunction = edgeWeightPerCluster[l] - nodeWeight[j] *clusterWeight[l] * resolution;

- if((qualityFunction > maxQualityFunction) || ((qualityFunction ==maxQualityFunction) && (l < bestCluster)))

- {

- bestCluster = l;

- maxQualityFunction = qualityFunction;

- }

- edgeWeightPerCluster[l] = 0;

- }

- if(maxQualityFunction == 0)

- {

- bestCluster = unusedCluster[nUnusedClusters - 1];

- nUnusedClusters—;

- }

- clusterWeight[bestCluster] += nodeWeight[j];

- nNodesPerCluster[bestCluster]++;

- if(bestCluster == cluster[j])

- nStableNodes++;

- else

- {

- cluster[j] = bestCluster;

- nStableNodes = 1;

- update = true;

- }

- i = (i< nNodes - 1) ? (i + 1) : 0;

- }

- while(nStableNodes < nNodes); //优化步骤是直到所有的点都稳定下来才结束

- newCluster =new int[nNodes];

- nClusters =0;

- for (i = 0;i < nNodes; i++)

- if(nNodesPerCluster[i] > 0)

- {

- newCluster[i] = nClusters;

- nClusters++;

- }

- for (i = 0;i < nNodes; i++)

- cluster[i] = newCluster[cluster[i]];

- deleteClusteringStats();

- returnupdate;

- }

- public booleanrunLouvainAlgorithm(double resolution)

- {

- return runLouvainAlgorithm(resolution,new Random());

- }

- public booleanrunLouvainAlgorithm(double resolution, Random random)

- {

- booleanupdate, update2;

- NetworkreducedNetwork;

- if ((cluster== null) || (nNodes == 1))

- returnfalse;

- update =runLocalMovingAlgorithm(resolution, random);

- if(nClusters < nNodes)

- {

- reducedNetwork = getReducedNetwork();

- reducedNetwork.initSingletonClusters();

- update2= reducedNetwork.runLouvainAlgorithm(resolution, random);

- if(update2)

- {

- update = true;

- mergeClusters(reducedNetwork.getClusters());

- }

- }

- deleteClusteringStats();

- return update;

- }

- privateNetwork()

- {

- }

- private voidcalcClusteringStats()

- {

- int i, j;

- clusterWeight = new double[nClusters];

- nNodesPerCluster = new int[nClusters];

- nodePerCluster = new int[nClusters][];

- for (i = 0;i < nNodes; i++)

- {

- clusterWeight[cluster[i]] += nodeWeight[i];

- nNodesPerCluster[cluster[i]]++;

- }

- for (i = 0;i < nClusters; i++)

- {

- nodePerCluster[i] = new int[nNodesPerCluster[i]];

- nNodesPerCluster[i] = 0;

- }

- for (i = 0;i < nNodes; i++)

- {

- j =cluster[i];

- nodePerCluster[j][nNodesPerCluster[j]] = i;

- nNodesPerCluster[j]++;

- }

- clusteringStatsAvailable = true;

- }

- private voiddeleteClusteringStats()

- {

- clusterWeight = null;

- nNodesPerCluster = null;

- nodePerCluster = null;

- clusteringStatsAvailable = false;

- }

- }

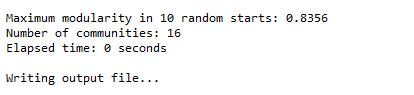

算法的效果

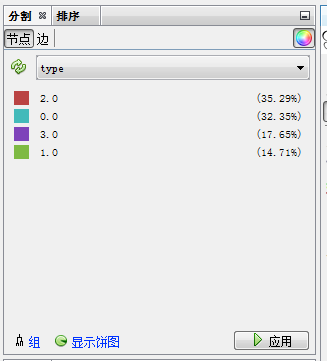

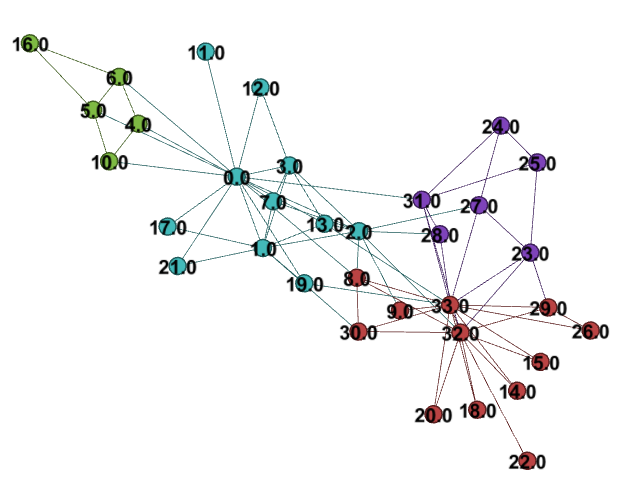

对karate数据集

分簇结果

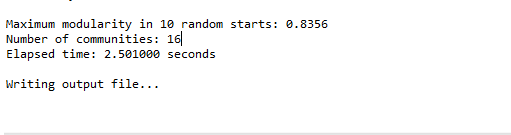

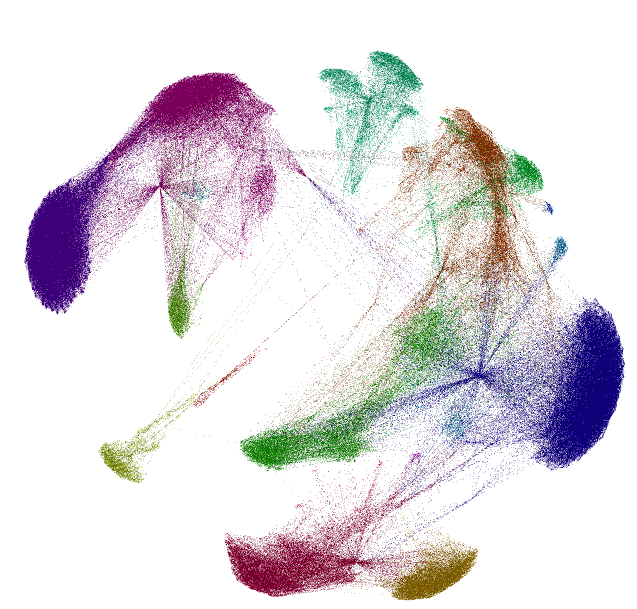

在facebook,4039个数据集上的结果

下载的java 版本代码不好理解,尤其是 firstneighborindex 等数组表示什么意思等,于是我借鉴它,实现了一个新的版本,这里面的代码容易理解一些,并且给里面的变量、函数以及关键步骤都加了一些注释

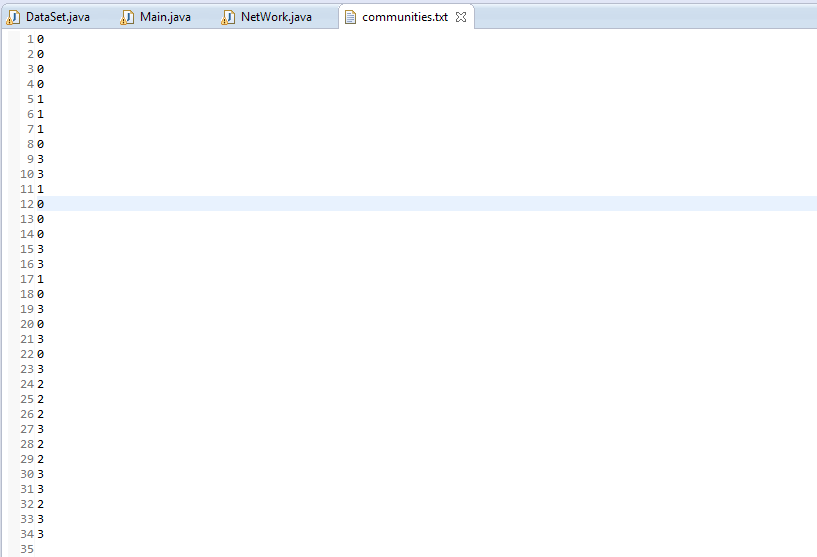

而且在代码中增加了输出分簇之后的网络图的功能,输出一个gml文件,输入节点的节点和边信息都保留了,初次之外,加上了分簇的结果,即每一个节点属于哪一个簇,在node中加了一个type字段来表示,这样就可以在gephi中直接依据type字段来查看分割好的社团。

与下载的不一致的是,我们的输入文件第一行需要给出网络中节点的数量

以下是程序的代码

DataSet.java

[java] view plain copy

- packagecommunitydetection;

- importjava.io.*;

- importjava.util.*;

- public classDataSet {

- double weight[][];

- LinkedList neighborlist[];

- double totalEdgeWeights = 0;

- int nodecount;

- double nodeweight[];

- DataSet(String filename) throws IOException

- {

- BufferedReader reader = newBufferedReader(new FileReader(filename));

- String line = reader.readLine();

- nodecount = Integer.parseInt(line); //读文件,文件第一行为节点的数量

- weight = new double[nodecount][nodecount];

- neighborlist = new LinkedList[nodecount];

- for(int i=0 ;i < nodecount;i++)

- neighborlist[i] = newLinkedList

(); - nodeweight = new double[nodecount];

- for(int i=0;i<nodecount;i++)

- nodeweight[i] = 0;

- while((line = reader.readLine())!=null)

- {

- String args[] =line.split(“\t”);

- int node1 =Integer.parseInt(args[0]);

- int node2 = Integer.parseInt(args[1]);

- if(node1 != node2)

- {

- neighborlist[node1].add(node2);

- neighborlist[node2].add(node1);

- }

- if(args.length > 2)

- {

- double we =Double.parseDouble(args[2]);

- weight[node1][node2] = we;

- weight[node2][node1] = we;

- totalEdgeWeights += we;

- nodeweight[node1] += we;

- if(node2 != node1)

- nodeweight[node2] += we;

- }

- else

- {

- weight[node1][node2] = 1;

- weight[node2][node1] = 1;

- totalEdgeWeights += 1;

- nodeweight[node1] += 1;

- if(node2 != node1)

- nodeweight[node2] += 1;

- }

- }

- reader.close();

- }

- }

NetWork.java

[java] view plain copy

- package communitydetection;

- import java.util.*;

- import java.io.*;

- public class NetWork {

- double weight[][]; //图中两节点的连接边的权重

- LinkedListneighborlist[]; //每个节点的邻居节点有哪些

- doublenodeweight[]; //每个节点的权值

- intnodecount; //图中节点的总数量

- intcluster[]; //记录每个节点属于哪一个簇

- intclustercount; //簇的总数量

- doubleclusterweight[]; //每一个簇的权重

- booleanclusteringStatsAvailable ; //当前的网络信息是否完全

- intnodecountsPercluster[]; //每个簇有多少个节点

- intnodePercluster[][]; //nodePercluster[i][j]表示簇i中第j个节点的id

- NetWork(Stringfilename) throws IOException

- {

- DataSetds = new DataSet(filename); //ds是用来读取输入文件内容的

- weight= ds.weight;

- neighborlist= ds.neighborlist;

- nodecount= ds.nodecount;

- nodeweight= ds.nodeweight;

- initSingletonClusters();

- }

- NetWork()

- {

- }

- publicdouble getTotalEdgeWeight() //获取网络图中所有的边的权值之和, 返回的结果实际上是2倍

- { //因为每一条边被计算了2次

- doubletotalEdgeWeight;

- int i;

- totalEdgeWeight = 0;

- for (i= 0; i < nodecount; i++)

- {

- for(intj=0;j<neighborlist[i].size();j++)

- {

- int neighborid =(Integer)neighborlist[i].get(j);

- totalEdgeWeight +=weight[i][neighborid];

- }

- }

- returntotalEdgeWeight;

- }

- publicvoid initSingletonClusters() //给每个节点指派一个簇

- {

- int i;

- clustercount = nodecount;

- cluster = new int[nodecount];

- for (i= 0; i < nodecount; i++)

- cluster[i] = i;

- deleteClusteringStats();

- }

- privatevoid calcClusteringStats() //统计好每个簇有多少个节点,以及每个簇中的节点都有哪些

- {

- int i,j;

- clusterweight = new double[clustercount];

- nodecountsPercluster = new int[clustercount];

- nodePercluster = new int[clustercount][];

- for (i= 0; i < nodecount; i++)

- {

- //计算每一个簇的权值,簇的权值是其中的节点的权值之和

- clusterweight[cluster[i]] += nodeweight[i];

- nodecountsPercluster[cluster[i]]++;

- }

- for (i= 0; i < clustercount; i++)

- {

- nodePercluster[i] = new int[nodecountsPercluster[i]];

- nodecountsPercluster[i] = 0;

- }

- for (i= 0; i < nodecount; i++)

- {

- j= cluster[i]; //j是簇编号, 记录每一个簇中的第几个节点的id

- nodePercluster[j][nodecountsPercluster[j]] = i;

- nodecountsPercluster[j]++;

- }

- clusteringStatsAvailable = true;

- }

- privatevoid deleteClusteringStats()

- {

- clusterweight = null;

- nodecountsPercluster = null;

- nodePercluster = null;

- clusteringStatsAvailable = false;

- }

- publicint[] getClusters()

- {

- returncluster;

- }

- publicdouble calcQualityFunction(double resolution) //计算模块度的值, 如果所有边的权重之和为m

- { //那么resolution就是1/(2m)

- doublequalityFunction, totalEdgeWeight;

- int i,j, k;

- if(cluster == null)

- return Double.NaN;

- if(!clusteringStatsAvailable)

- calcClusteringStats();

- qualityFunction = 0;

- totalEdgeWeight = 0;

- for (i= 0; i < nodecount; i++)

- {

- j= cluster[i];

- for( k=0;k<neighborlist[i].size();k++)

- {

- int neighborid =(Integer)neighborlist[i].get(k);

- if(cluster[neighborid] == j)

- qualityFunction += weight[i][neighborid];

- totalEdgeWeight += weight[i][neighborid];

- } //最终的totalEdgeWeight也是

- //图中所有边权重之和的2倍

- }

- for (i= 0; i < clustercount; i++)

- qualityFunction -= clusterweight[i] * clusterweight[i] * resolution;

- qualityFunction /= totalEdgeWeight;

- returnqualityFunction;

- }

- public intgetNClusters()

- {

- returnclustercount;

- }

- public voidmergeClusters(int[] newCluster) //newcluster是reduced之后的reducednetwork中的所属簇信息

- {

- int i,j, k;

- if(cluster == null)

- return;

- i = 0;

- for (j= 0; j < nodecount; j++)

- {

- k= newCluster[cluster[j]]; //reducednetwork中的newcluster的一个下标相当于

- if(k > i) //reduce之前的网络中的每一个簇

- i = k;

- cluster[j] = k;

- }

- clustercount = i + 1;

- deleteClusteringStats();

- }

- publicNetWork getReducedNetwork() //将整个网络进行reduce操作

- {

- double[] reducedNetworkEdgeWeight2;

- int i,j, k, l, m,reducedNetworkNEdges2;

- int[] reducedNetworkNeighbor2;

- NetWork reducedNetwork;

- if (cluster== null)

- return null;

- if(!clusteringStatsAvailable)

- calcClusteringStats();

- reducedNetwork = new NetWork();

- reducedNetwork.nodecount = clustercount; //reduce之后,原来的一个簇就是现在的一个节点

- reducedNetwork.neighborlist = new LinkedList[clustercount];

- for(i=0;i<clustercount;i++)

- reducedNetwork.neighborlist[i] = newLinkedList();

- reducedNetwork.nodeweight = new double[clustercount];

- reducedNetwork.weight = new double[clustercount][clustercount];

- reducedNetworkNeighbor2 = new int[clustercount -1];

- reducedNetworkEdgeWeight2 = new double[clustercount];

- for (i= 0; i < clustercount; i++)

- {

- reducedNetworkNEdges2 = 0;

- for (j = 0; j < nodePercluster[i].length; j++)

- {

- k = nodePercluster[i][j]; //k是原来的簇i中第j个节点的id

- for( l=0;l<neighborlist[k].size();l++)

- {

- int nodeid =(Integer)neighborlist[k].get(l);

- m = cluster[nodeid];

- if( m != i) //reduce之前簇i与簇m相连,应为它们之间有边存在,edge(k,nodeid)

- {

- if(reducedNetworkEdgeWeight2[m]== 0)

- {

- //以前的簇邻居变成了现在的节点邻居

- reducedNetworkNeighbor2[reducedNetworkNEdges2]= m;

- reducedNetworkNEdges2++;

- //reducedNetworkEdges记录在新的图中新的节点i(原来的簇i)有多少个邻居

- }

- reducedNetworkEdgeWeight2[m]+= weight[k][nodeid];

- //新的节点i与新的邻居节点m的之间的边权重的更新

- }

- }

- reducedNetwork.nodeweight[i] += nodeweight[k];

- //现在的节点i是以前的簇i, 以前的节点k在以前的簇i中,所以现在的节点i的权重包含以前节点k的权重

- }

- for (j = 0; j < reducedNetworkNEdges2; j++)

- {

- reducedNetwork.neighborlist[i].add(reducedNetworkNeighbor2[j]);

- reducedNetwork.weight[i][reducedNetworkNeighbor2[j]] =reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]];

- reducedNetworkEdgeWeight2[reducedNetworkNeighbor2[j]] = 0;

- // =0是为了数组复用,不然每次都要开辟新的数组存放与新的邻居节点之间的边的权值

- }

- }

- returnreducedNetwork;

- }

- publicboolean runLocalMovingAlgorithm(double resolution)

- {

- returnrunLocalMovingAlgorithm(resolution, new Random());

- }

- //将每一个节点移入到’最好’的簇当中去

- publicboolean runLocalMovingAlgorithm(double resolution, Random random)

- {

- boolean update;

- doublemaxQualityFunction, qualityFunction;

- double[] clusterWeight, edgeWeightPerCluster;

- intbestCluster, i, j, k, l, nNeighboringClusters, nStableNodes, nUnusedClusters;

- int[]neighboringCluster, newCluster, nNodesPerCluster, nodeOrder, unusedCluster;

- if((cluster == null) || (nodecount == 1))

- return false;

- update= false;

- clusterWeight = new double[nodecount];

- nNodesPerCluster = new int[nodecount]; //记录每一个簇中有多少个节点

- for (i = 0; i < nodecount; i++)

- {

- clusterWeight[cluster[i]] += nodeweight[i];

- nNodesPerCluster[cluster[i]]++;

- }

- nUnusedClusters = 0;

- unusedCluster = new int[nodecount]; //统计在整个过程当中,哪些簇一个节点也没有

- for (i= 0; i <nodecount; i++) //这些簇在之后会被消除

- if(nNodesPerCluster[i] == 0)

- {

- unusedCluster[nUnusedClusters] = i;

- nUnusedClusters++;

- }

- nodeOrder = new int[nodecount];

- for (i= 0; i < nodecount; i++)

- nodeOrder[i] = i;

- for (i= 0; i < nodecount; i++) //nodeOrder将节点顺序打乱,防止由于顺序问题得不到最好的结果

- {

- j= random.nextInt(nodecount);

- k= nodeOrder[i];

- nodeOrder[i] = nodeOrder[j];

- nodeOrder[j] = k;

- }

- edgeWeightPerCluster = new double[nodecount];

- neighboringCluster = new int[nodecount -1];

- nStableNodes = 0;

- i = 0;

- do

- {

- j= nodeOrder[i]; //j是某一个节点的编号

- nNeighboringClusters = 0;

- for(k = 0; k<neighborlist[j].size();k++)

- {

- int nodeid =(Integer)neighborlist[j].get(k); //nodeid是j的一个邻居节点的编号

- l = cluster[nodeid]; //l是nodeid所属的簇编号

- if(edgeWeightPerCluster[l] == 0) //统计与节点j相连的簇有哪些

- {

- neighboringCluster[nNeighboringClusters] = l;

- nNeighboringClusters++;

- }

- edgeWeightPerCluster[l] +=weight[j][nodeid];

- //edgeWeightperCluster[l]记录的是如果将节点j加入到簇l当中,簇l内部的边权值的增量大小

- }

- //节点j之前所属的簇做相应的变更

- clusterWeight[cluster[j]] -= nodeweight[j];

- nNodesPerCluster[cluster[j]]—;

- if(nNodesPerCluster[cluster[j]] == 0)

- {

- unusedCluster[nUnusedClusters] = cluster[j];

- nUnusedClusters++;

- }

- bestCluster = -1; //最好的加入的簇下标

- maxQualityFunction = 0;

- for (k = 0; k < nNeighboringClusters; k++)

- {

- l = neighboringCluster[k];

- qualityFunction = edgeWeightPerCluster[l] - nodeweight[j] *clusterWeight[l] * resolution;

- if ((qualityFunction > maxQualityFunction) || ((qualityFunction ==maxQualityFunction) && (l < bestCluster)))

- {

- bestCluster = l;

- maxQualityFunction = qualityFunction;

- }

- edgeWeightPerCluster[l] = 0;

- // =0是为了数组复用,

- }

- if(maxQualityFunction == 0) //无论到哪一簇都不会有提升

- {

- bestCluster = unusedCluster[nUnusedClusters - 1];

- nUnusedClusters—;

- }

- clusterWeight[bestCluster] += nodeweight[j];

- nNodesPerCluster[bestCluster]++; //最佳簇的节点数量+1

- if(bestCluster == cluster[j])

- nStableNodes++; //还在原来的簇当中,表示该节点是稳定的,稳定节点的数量+1

- else

- {

- cluster[j] = bestCluster;

- nStableNodes = 1;

- update = true; //能移动到新的簇当中去,然后需要重新考虑每个节点是否稳定

- }

- i= (i < nodecount - 1) ? (i + 1) : 0;

- }

- while(nStableNodes < nodecount); //优化步骤是直到所有的点都稳定下来才结束

- newCluster = new int[nodecount];

- clustercount = 0;

- for (i= 0; i < nodecount; i++)

- if(nNodesPerCluster[i] > 0)

- { //统计以前的簇编号还有多少能用,然后从0开始重新对它们编号

- newCluster[i] = clustercount;

- clustercount++;

- }

- for (i= 0; i < nodecount; i++)

- cluster[i] = newCluster[cluster[i]];

- deleteClusteringStats();

- returnupdate;

- }

- publicboolean runLouvainAlgorithm(double resolution)

- {

- returnrunLouvainAlgorithm(resolution, new Random());

- }

- publicboolean runLouvainAlgorithm(double resolution, Random random)

- {

- boolean update, update2;

- NetWork reducedNetwork;

- if((cluster == null) || (nodecount == 1))

- return false;

- update= runLocalMovingAlgorithm(resolution, random);

- //update表示是否有节点变动,即是否移动到了新的簇当中去

- if(clustercount < nodecount) //簇的数量小于节点的数量,说明可以进行reduce操作,ruduce不会改变

- { //modularity的值

- reducedNetwork = getReducedNetwork();

- reducedNetwork.initSingletonClusters();

- update2 = reducedNetwork.runLouvainAlgorithm(resolution, random);

- //update2表示在reduce之后的网络中是否有节点移动到新的簇当中去

- if(update2)

- {

- update = true;

- mergeClusters(reducedNetwork.getClusters());

- //有变动的话,至少是簇的顺序变掉了,或者是簇的数量减少了

- }

- }

- deleteClusteringStats();

- returnupdate;

- }

- publicvoid generategml() throws IOException

- {

- BufferedWriter writer = newBufferedWriter(new FileWriter(“generated.gml”));

- writer.append(“graph\n”);

- writer.append(“[\n”);

- for(int i=0;i<nodecount;i++)

- {

- writer.append(“ node\n”);

- writer.append(“ [\n”);

- writer.append(“ id “+i+”\n”);

- writer.append(“ type “+cluster[i]+”\n”);

- writer.append(“ ]\n”);

- }

- for(int i=0;i<nodecount;i++)

- for(int j=i+1;j<nodecount;j++)

- {

- if(weight[i][j] != 0)

- {

- writer.append(“ edge\n”);

- writer.append(“ [\n”);

- writer.append(“ source “+i+”\n”);

- writer.append(“ target “+j+”\n”);

- writer.append(“ ]\n”);

- }

- }

- writer.append(“]\n”);

- writer.close();

- }

- }

Main.java

[java] view plain copy

- package communitydetection;

- import java.io.*;

- import java.util.*;

- public class Main {

- staticvoid detect() throws IOException

- {

- NetWork network;

- Stringfilename = “karate_club_network.txt”;

- doublemodularity, resolution, maxModularity;

- doublebeginTime, endTime;

- int[]cluster;

- intnRandomStarts = 5;

- intnIterations = 3;

- network= new NetWork(filename);

- resolution= 1.0/network.getTotalEdgeWeight();

- beginTime= System.currentTimeMillis();

- cluster = null;

- int nClusters= -1;

- inti,j;

- maxModularity = Double.NEGATIVE_INFINITY;

- Randomrandom = new Random(100);

- for (i= 0; i < nRandomStarts; i++)

- {

- if( (nRandomStarts > 1))

- System.out.format(“Random start: %d%n”, i + 1);

- network.initSingletonClusters(); //网络初始化,每个节点一个簇

- j= 0;

- boolean update = true; //update表示网络是否有节点移动

- do

- {

- if ( (nIterations > 1))

- System.out.format(“Iteration: %d%n”, j + 1);

- update = network.runLouvainAlgorithm(resolution, random);

- j++;

- modularity = network.calcQualityFunction(resolution);

- if ((nIterations > 1))

- System.out.format(“Modularity: %.4f%n”, modularity);

- }

- while ((j < nIterations) && update);

- if(modularity > maxModularity)

- {

- cluster = network.getClusters();

- nClusters = network.getNClusters();

- maxModularity = modularity;

- }

- if((nRandomStarts > 1))

- {

- if (nIterations == 1)

- System.out.format(“Modularity: %.4f%n”, modularity);

- System.out.println();

- }

- }

- endTime = System.currentTimeMillis();

- network.generategml();

- if(nRandomStarts == 1)

- {

- if(nIterations > 1)

- System.out.println();

- System.out.format(“Modularity: %.4f%n”, maxModularity);

- }

- else

- System.out.format(“Maximum modularity in %d random starts:%.4f%n”, nRandomStarts, maxModularity);

- System.out.format(“Number of communities: %d%n”, nClusters);

- System.out.format(“Elapsed time: %f seconds%n”, (endTime -beginTime) / 1000.0);

- System.out.println();

- System.out.println(“Writingoutput file…”);

- System.out.println();

- // writeOutputFile(“communities.txt”, cluster);

- }

- //将每一个节点所属的簇的下标写到文件当中去

- privatestatic void writeOutputFile(String fileName, int[] cluster) throws IOException

- {

- BufferedWriter bufferedWriter;

- int i;

- bufferedWriter = new BufferedWriter(new FileWriter(fileName));

- for (i= 0; i < cluster.length; i++)

- {

- bufferedWriter.write(Integer.toString(cluster[i]));

- bufferedWriter.newLine();

- }

- bufferedWriter.close();

- }

- publicstatic void main(String args[]) throws IOException

- {

- detect();

- }

- }

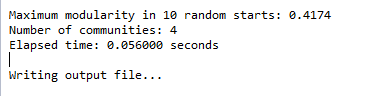

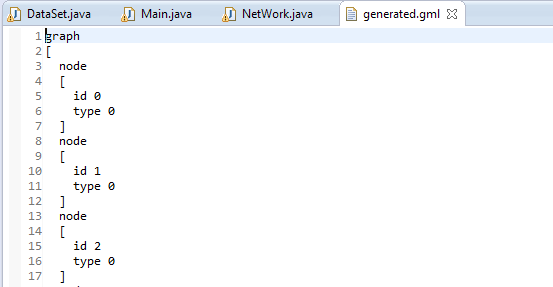

算法运行结果

Karate结果

分簇结果

生成的gml文件

导入到Gephi中,按照节点的type分割的结果

Facebook上4039个数据的结果

将生成的gml文件导入到Gephi中,并依据节点的type分割的结果

还没有评论,来说两句吧...