CUDA中并行规约(Parallel Reduction)的优化

Parallel Reduction是NVIDIA-CUDA自带的例子,也几乎是所有CUDA学习者的的必看算法。在这个算法的优化中,Mark Harris为我们实现了7种不同的优化版本,将Bandwidth几乎提高到了峰值。相信我们通过仔细研读这个过程,一定能对CUDA程序的优化有更加深刻的认识。

下面我们来一一细看这几种优化方案,数据和思想均摘录自官方SDK中Samples的算法说明。

Parallel Reduction

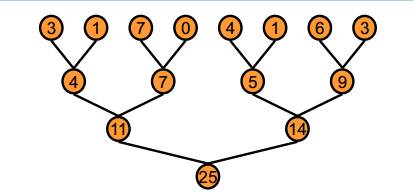

Parallel Reduction可以理解为将一个数组中的所有数相加求和的过程并行化。一般来讲,我们并行化的思路是基于“树”的二元规约,如下图:

但是这样的算法会产生一个问题,就是我们怎样让不同blocks中的线程通信呢?CUDA本身并不支持全局同步(global synchronization)。但是,CUDA的kernel运行时有一个特性,即同一时间只能有一个kernel运行,这样我们便可以将每一层规约作为一个kernel来重复递归调用。如下图:

我们的目标就是基于这个算法进行优化,达到“榨干CUDA性能”的目的。我们选取Bandwidth作为测量标准(因为Bandwidth侧重于测量memory-bound kernels,而GFLOP/s侧重于测量compute-bound kernels)。我们最终目标是实现最大的Data Bandwidth。测试环境为G80 GPU,384-bit memory interface, 900 MHz DDR,Bandwidth峰值384 * 1800 / 8 = 86.4 GB/s。

对于基本概念,放上一张图供参考:

Reduction #1: Interleaved Addressing

Interleaved Addressing的核心思想在于交错寻址,即典型的树状模型。示意图如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | / This reduction interleaves which threads are active by using the modulo operator. This operator is very expensive on GPUs, and the interleaved inactivity means that no whole warps are active, which is also very inefficient / template & lt ; class T & gt ; global void reduce0 ( T g_idata , T g_odata , unsigned int n ) { T sdata = SharedMemory & lt ; T & gt ; ( ) ; // load shared mem unsigned int tid = threadIdx . x ; unsigned int i = blockIdx . x blockDim . x + threadIdx . x ; sdata [ tid ] = ( i & lt ; n ) ? g_idata [ i ] : 0 ; syncthreads ( ) ; // do reduction in shared mem for ( unsigned int s = 1 ; s & lt ; blockDim . x ; s = 2 ) { // modulo arithmetic is slow! if ( ( tid % ( 2 s ) ) == 0 ) { sdata [ tid ] + = sdata [ tid + s ] ; } syncthreads ( ) ; } // write result for this block to global mem if ( tid == 0 ) g_odata [ blockIdx . x ] = sdata [ 0 ] ; } |

存在的问题:

上述代码中for循环内部,容易出现线程束的分化(Warp Divergence),即同一个Warp中的线程需要执行不同的逻辑分支(详见这里),这是非常低效的,而且 & 运算也是非常慢的。测试结果如下(4M element):

1 2 3 4 5 | Time ( 2 ^ 22 ints ) Bandwidth — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — Kernel 1 ( interleaved addressing with 8.054 ms 2.083 GB / s divergent branching ) |

注意:Block Size = 128 threads for all tests.

Reduction #2: Interleaved Addressing

为了尽量减少1中的线程束的分化,我们这一步将分化的分支替换为跨步寻址(strided index):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | / This version uses contiguous threads, but its interleaved addressing results in many shared memory bank conflicts. / template & lt ; class T & gt ; global void reduce1 ( T g_idata , T g_odata , unsigned int n ) { T sdata = SharedMemory & lt ; T & gt ; ( ) ; // load shared mem unsigned int tid = threadIdx . x ; unsigned int i = blockIdx . x blockDim . x + threadIdx . x ; sdata [ tid ] = ( i & lt ; n ) ? g_idata [ i ] : 0 ; syncthreads ( ) ; // do reduction in shared mem for ( unsigned int s = 1 ; s & lt ; blockDim . x ; s = 2 ) { int index = 2 s * tid ; if ( index & lt ; blockDim . x ) { sdata [ index ] + = sdata [ index + s ] ; } syncthreads ( ) ; } // write result for this block to global mem if ( tid == 0 ) g_odata [ blockIdx . x ] = sdata [ 0 ] ; } |

示意图如下(注意下图与上图中Thread ID的区别):

这里我们遇到一个新的问题,即Shared Memory Bank Conflicts。为了达到高带宽,Shared Memory被划分成许多大小相同的内存块,叫做Banks。Banks可以同步访问,即不同的地址对不同的Banks可以同时读写。但是,如果两个内存请求的地址落到同一个Bank上,将会导致Bank Conflicts,严重影响并行程序的性能。

运行结果如下(4M element):

1 2 3 4 5 6 7 8 9 10 | Time ( 2 ^ 22 ints ) Bandwidth Step Speedup Culmulative Speedup — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — - Kernel 1 ( interleaved addressing with 8.054 ms 2.083 GB / s divergent branching ) Kernel 2 ( interleaved addressing with 3.456 ms 4.854 GB / s 2.33x 2.33x bank conflicts ) |

Reduction #3: Sequential Addressing

我们知道,CUDA中对数据的连续读取效率要比其它方式高。因此我们这一步优化主要是将取址方式变为连续的。我们只需要将2中跨步寻址(strided index)替换为基于threadID的逆向for循环即可。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | / This version uses sequential addressing — no divergence or bank conflicts. / template & lt ; class T & gt ; global void reduce2 ( T g_idata , T g_odata , unsigned int n ) { T sdata = SharedMemory & lt ; T & gt ; ( ) ; // load shared mem unsigned int tid = threadIdx . x ; unsigned int i = blockIdx . x blockDim . x + threadIdx . x ; sdata [ tid ] = ( i & lt ; n ) ? g_idata [ i ] : 0 ; syncthreads ( ) ; // do reduction in shared mem for ( unsigned int s = blockDim . x / 2 ; s & gt ; 0 ; s & gt ; & gt ; = 1 ) { if ( tid & lt ; s ) { sdata [ tid ] + = sdata [ tid + s ] ; } syncthreads ( ) ; } // write result for this block to global mem if ( tid == 0 ) g_odata [ blockIdx . x ] = sdata [ 0 ] ; } |

示意图如下:

但新的问题又出现了,我们发现在for循环中,因为 if (tid < s) 的缘故,在第一次循环的时候有一半的线程都处于闲置状态!如果我们能全部利用的话,相信性能还会提升很多。这也是我们以后要进行优化的地方,避免线程闲置。

本次运行结果如下(4M element):

1 2 3 4 5 6 7 8 9 10 11 12 13 | Time ( 2 ^ 22 ints ) Bandwidth Step Speedup Culmulative Speedup — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — - Kernel 1 ( interleaved addressing with 8.054 ms 2.083 GB / s divergent branching ) Kernel 2 ( interleaved addressing with 3.456 ms 4.854 GB / s 2.33x 2.33x bank conflicts ) Kernel 3 ( sequential addressing ) 1.722 ms 9.741 GB / s 2.01x 4.68x |

Reduction #4: First Add During Load

在以前的所有版本中,我们都是事先将global的数据读入共享内存 sdata[tid] = (i < n) ? g_idata[i] : 0; ,我们可不可以在这一步进行优化呢?当然,我们这一步优化的目的是在将数据读入到共享内存时同时进行第一次(第一层)规约。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | / This version uses n/2 threads — it performs the first level of reduction when reading from global memory. / template & lt ; class T & gt ; global void reduce3 ( T g_idata , T g_odata , unsigned int n ) { T sdata = SharedMemory & lt ; T & gt ; ( ) ; // perform first level of reduction, // reading from global memory, writing to shared memory unsigned int tid = threadIdx . x ; unsigned int i = blockIdx . x ( blockDim . x* 2 ) + threadIdx . x ; T mySum = ( i & lt ; n ) ? g_idata [ i ] : 0 ; if ( i + blockDim . x & lt ; n ) mySum + = g_idata [ i + blockDim . x ] ; sdata [ tid ] = mySum ; syncthreads ( ) ; // do reduction in shared mem for ( unsigned int s = blockDim . x / 2 ; s & gt ; 0 ; s & gt ; & gt ; = 1 ) { if ( tid & lt ; s ) { sdata [ tid ] = mySum = mySum + sdata [ tid + s ] ; } syncthreads ( ) ; } // write result for this block to global mem if ( tid == 0 ) g_odata [ blockIdx . x ] = sdata [ 0 ] ; } |

本次运行结果如下(4M element):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | Time ( 2 ^ 22 ints ) Bandwidth Step Speedup Culmulative Speedup — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — - Kernel 1 ( interleaved addressing with 8.054 ms 2.083 GB / s divergent branching ) Kernel 2 ( interleaved addressing with 3.456 ms 4.854 GB / s 2.33x 2.33x bank conflicts ) Kernel 3 ( sequential addressing ) 1.722 ms 9.741 GB / s 2.01x 4.68x Kernel 4 ( first add during 0.965 ms 17.377 GB / s 1.78x 8.34x global load ) |

Reduction #5: Unroll The Loop

这时我们的数据带宽已经达到了17 GB/s,而我们清楚Reduction的算术强度(arithmetic intensity)很低,因此系统的瓶颈可能是由于Parallel Slowdown,即系统对于指令、调度的花费超过了实际数据处理的花费。在本例中即address arithmetic and loop overhead。

我们的解决办法是将for循环展开(Unroll the loop)。我们知道,在Reduce的过程中,活动的线程数是越来越少的,当活动的线程数少于32个时,我们将只有一个线程束(Warp)。在单个Warp中,指令的执行遵循SIMD(Single Instruction Multiple Data)模式,也就是说在活动线程数少于32个时,我么不需要进行同步控制,即我们不需要if (tid < s) 。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 | / This version unrolls the last warp to avoid synchronization where it isn’t needed. Note, this kernel needs a minimum of 64sizeof(T) bytes of shared memory. In other words if blockSize <= 32, allocate 64sizeof(T) bytes. If blockSize > 32, allocate blockSizesizeof(T) bytes. / template < class T , unsigned int blockSize > global void reduce4 ( T g_idata , T g_odata , unsigned int n ) { T sdata = SharedMemory < T > ( ) ; // perform first level of reduction, // reading from global memory, writing to shared memory unsigned int tid = threadIdx . x ; unsigned int i = blockIdx . x ( blockDim . x 2 ) + threadIdx . x ; T mySum = ( i < n ) ? g_idata [ i ] : 0 ; if ( i + blockSize < n ) mySum + = g_idata [ i + blockSize ] ; sdata [ tid ] = mySum ; syncthreads ( ) ; // do reduction in shared mem for ( unsigned int s = blockDim . x / 2 ; s > 32 ; s >> = 1 ) { if ( tid < s ) { sdata [ tid ] = mySum = mySum + sdata [ tid + s ] ; } syncthreads ( ) ; } if ( tid < 32 ) { // now that we are using warp-synchronous programming (below) // we need to declare our shared memory volatile so that the compiler // doesn’t reorder stores to it and induce incorrect behavior. volatile T * smem = sdata ; if ( blockSize >= 64 ) { smem [ tid ] = mySum = mySum + smem [ tid + 32 ] ; } if ( blockSize >= 32 ) { smem [ tid ] = mySum = mySum + smem [ tid + 16 ] ; } if ( blockSize >= 16 ) { smem [ tid ] = mySum = mySum + smem [ tid + 8 ] ; } if ( blockSize >= 8 ) { smem [ tid ] = mySum = mySum + smem [ tid + 4 ] ; } if ( blockSize >= 4 ) { smem [ tid ] = mySum = mySum + smem [ tid + 2 ] ; } if ( blockSize >= 2 ) { smem [ tid ] = mySum = mySum + smem [ tid + 1 ] ; } } // write result for this block to global mem if ( tid == 0 ) g_odata [ blockIdx . x ] = sdata [ 0 ] ; } |

注意,这在所有的warps中都省去了无用过的过程,不只是最后一个warp。如果不进行循环展开,则所有的warps都会执行for中的每一次循环和每一次if判断。

本次运行结果如下(4M element):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | Time ( 2 ^ 22 ints ) Bandwidth Step Speedup Culmulative Speedup — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — - Kernel 1 ( interleaved addressing with 8.054 ms 2.083 GB / s divergent branching ) Kernel 2 ( interleaved addressing with 3.456 ms 4.854 GB / s 2.33x 2.33x bank conflicts ) Kernel 3 ( sequential addressing ) 1.722 ms 9.741 GB / s 2.01x 4.68x Kernel 4 ( first add during 0.965 ms 17.377 GB / s 1.78x 8.34x global load ) Kernel 5 ( unroll last warp ) 0.536 ms 31.289 GB / s 1.8x 15.01x |

今天我们暂时先分析到这里,SDK的示例中还有第六种和第七种优化方案,分别是Completely Unrolled和Multiple Adds / Thread,最后性能提升达30+x,我们以后有机会再仔细进行分析

还没有评论,来说两句吧...