【模式识别】K-近邻分类算法KNN

K-近邻(K-Nearest Neighbors, KNN)是一种很好理解的分类算法,简单说来就是从训练样本中找出K个与其最相近的样本,然后看这K个样本中哪个类别的样本多,则待判定的值(或说抽样)就属于这个类别。

KNN算法的步骤

- 计算已知类别数据集中每个点与当前点的距离;

- 选取与当前点距离最小的K个点;

- 统计前K个点中每个类别的样本出现的频率;

- 返回前K个点出现频率最高的类别作为当前点的预测分类。

OpenCV中使用CvKNearest

OpenCV中实现CvKNearest类可以实现简单的KNN训练和预测。

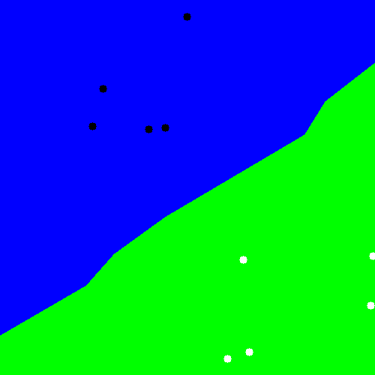

int main(){float labels[10] = {0,0,0,0,0,1,1,1,1,1};Mat labelsMat(10, 1, CV_32FC1, labels);cout<<labelsMat<<endl;float trainingData[10][2];srand(time(0));for(int i=0;i<5;i++){trainingData[i][0] = rand()%255+1;trainingData[i][1] = rand()%255+1;trainingData[i+5][0] = rand()%255+255;trainingData[i+5][1] = rand()%255+255;}Mat trainingDataMat(10, 2, CV_32FC1, trainingData);cout<<trainingDataMat<<endl;CvKNearest knn;knn.train(trainingDataMat,labelsMat,Mat(), false, 2 );// Data for visual representationint width = 512, height = 512;Mat image = Mat::zeros(height, width, CV_8UC3);Vec3b green(0,255,0), blue (255,0,0);for (int i = 0; i < image.rows; ++i){for (int j = 0; j < image.cols; ++j){const Mat sampleMat = (Mat_<float>(1,2) << i,j);Mat response;float result = knn.find_nearest(sampleMat,1);if (result !=0){image.at<Vec3b>(j, i) = green;}elseimage.at<Vec3b>(j, i) = blue;}}// Show the training datafor(int i=0;i<5;i++){circle( image, Point(trainingData[i][0], trainingData[i][1]),5, Scalar( 0, 0, 0), -1, 8);circle( image, Point(trainingData[i+5][0], trainingData[i+5][1]),5, Scalar(255, 255, 255), -1, 8);}imshow("KNN Simple Example", image); // show it to the userwaitKey(10000);}

使用的是之前BP神经网络中的例子,分类结果如下:

预测函数find_nearest()除了输入sample参数外还有些其他的参数:

float CvKNearest::find_nearest(const Mat& samples, int k, Mat* results=0,const float** neighbors=0, Mat* neighborResponses=0, Mat* dist=0 )

即,samples为样本数*特征数的浮点矩阵;K为寻找最近点的个数;results与预测结果;neibhbors为k*样本数的指针数组(输入为const,实在不知为何如此设计);neighborResponse为样本数*k的每个样本K个近邻的输出值;dist为样本数*k的每个样本K个近邻的距离。

另一个例子

OpenCV refman也提供了一个类似的示例,使用CvMat格式的输入参数:

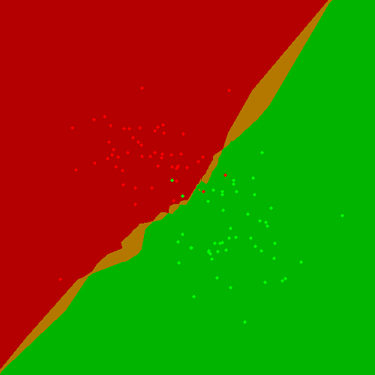

int main( int argc, char** argv ){const int K = 10;int i, j, k, accuracy;float response;int train_sample_count = 100;CvRNG rng_state = cvRNG(-1);CvMat* trainData = cvCreateMat( train_sample_count, 2, CV_32FC1 );CvMat* trainClasses = cvCreateMat( train_sample_count, 1, CV_32FC1 );IplImage* img = cvCreateImage( cvSize( 500, 500 ), 8, 3 );float _sample[2];CvMat sample = cvMat( 1, 2, CV_32FC1, _sample );cvZero( img );CvMat trainData1, trainData2, trainClasses1, trainClasses2;// form the training samplescvGetRows( trainData, &trainData1, 0, train_sample_count/2 );cvRandArr( &rng_state, &trainData1, CV_RAND_NORMAL, cvScalar(200,200), cvScalar(50,50) );cvGetRows( trainData, &trainData2, train_sample_count/2, train_sample_count );cvRandArr( &rng_state, &trainData2, CV_RAND_NORMAL, cvScalar(300,300), cvScalar(50,50) );cvGetRows( trainClasses, &trainClasses1, 0, train_sample_count/2 );cvSet( &trainClasses1, cvScalar(1) );cvGetRows( trainClasses, &trainClasses2, train_sample_count/2, train_sample_count );cvSet( &trainClasses2, cvScalar(2) );// learn classifierCvKNearest knn( trainData, trainClasses, 0, false, K );CvMat* nearests = cvCreateMat( 1, K, CV_32FC1);for( i = 0; i < img->height; i++ ){for( j = 0; j < img->width; j++ ){sample.data.fl[0] = (float)j;sample.data.fl[1] = (float)i;// estimate the response and get the neighbors’ labelsresponse = knn.find_nearest(&sample,K,0,0,nearests,0);// compute the number of neighbors representing the majorityfor( k = 0, accuracy = 0; k < K; k++ ){if( nearests->data.fl[k] == response)accuracy++;}// highlight the pixel depending on the accuracy (or confidence)cvSet2D( img, i, j, response == 1 ?(accuracy > 5 ? CV_RGB(180,0,0) : CV_RGB(180,120,0)) :(accuracy > 5 ? CV_RGB(0,180,0) : CV_RGB(120,120,0)) );}}// display the original training samplesfor( i = 0; i < train_sample_count/2; i++ ){CvPoint pt;pt.x = cvRound(trainData1.data.fl[i*2]);pt.y = cvRound(trainData1.data.fl[i*2+1]);cvCircle( img, pt, 2, CV_RGB(255,0,0), CV_FILLED );pt.x = cvRound(trainData2.data.fl[i*2]);pt.y = cvRound(trainData2.data.fl[i*2+1]);cvCircle( img, pt, 2, CV_RGB(0,255,0), CV_FILLED );}cvNamedWindow( "classifier result", 1 );cvShowImage( "classifier result", img );cvWaitKey(0);cvReleaseMat( &trainClasses );cvReleaseMat( &trainData );return 0;}

分类结果:

KNN的思想很好理解,也非常容易实现,同时分类结果较高,对异常值不敏感。但计算复杂度较高,不适于大数据的分类问题。

还没有评论,来说两句吧...