Python求解回归问题

title: Python求解回归问题

cover: https://gitee.com/Asimok/picgo/raw/master/img/MacBookPro/20210801104603.png

categories: 机器学习

tags:

- Python

- 机器学习

keywords: ‘机器学习,Python’

date: 2021-8-1

Python求解回归问题

y=wx+b

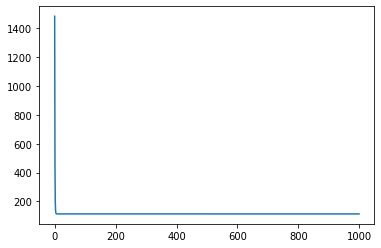

import numpy as npimport matplotlib.pyplot as plt# 计算给定(w,b)的平均误差def compute_error_for_line_given_points(b, w, points):totalError = 0for i in range(0, len(points)):x = points[i, 0]y = points[i, 1]# computer mean-squared-errortotalError += (y - (w * x + b)) ** 2# average loss for each pointreturn totalError / float(len(points))def step_gradient(b_current, w_current, points, learningRate):b_gradient = 0w_gradient = 0N = float(len(points))for i in range(0, len(points)):x = points[i, 0]y = points[i, 1]# 求导数 除N取平均值# grad_b = 2(wx+b-y)b_gradient += (2/N) * ((w_current * x + b_current) - y)# grad_w = 2(wx+b-y)*xw_gradient += (2/N) * x * ((w_current * x + b_current) - y)# update b' w'# 梯度指向极大值方向 因此反方向更新梯度new_b = b_current - (learningRate * b_gradient)new_w = w_current - (learningRate * w_gradient)temploss = compute_error_for_line_given_points(new_b, new_w, points)loss.append(temploss)return [new_b, new_w]def gradient_descent_runner(points, starting_b, starting_w, learning_rate, num_iterations):b = starting_bw = starting_w# update for several timesfor i in range(num_iterations):b, w = step_gradient(b, w, np.array(points), learning_rate)return [b, w]def run():points = np.genfromtxt("data.csv", delimiter=",")learning_rate = 0.0001initial_b = 0 # initial y-intercept guessinitial_w = 0 # initial slope guessnum_iterations = 1000print("Starting gradient descent at b = {0}, w = {1}, error = {2}".format(initial_b, initial_w,compute_error_for_line_given_points(initial_b, initial_w, points)))print("Running...")[b, w] = gradient_descent_runner(points, initial_b, initial_w, learning_rate, num_iterations)print("After {0} iterations b = {1}, w = {2}, error = {3}".format(num_iterations, b, w,compute_error_for_line_given_points(b, w, points)))loss = []run()Starting gradient descent at b = 0, w = 0, error = 5565.107834483211Running...After 1000 iterations b = 0.08893651993741346, w = 1.4777440851894448, error = 112.61481011613473x = [i for i in range(0, len(loss))]plt.plot(x,loss)[<matplotlib.lines.Line2D at 0x7f82b2c8bd90>]

还没有评论,来说两句吧...