代码

package com.zxl.flinkimport org.apache.flink.streaming.api.scala.StreamExecutionEnvironment/** * flink的流计算的WordCount */object FlinkStreamWordCount { def main(args: Array[String]): Unit = { //1、初始化Flink流计算的环境 val streamEnv: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment //修改并行度 streamEnv.setParallelism(1) //默认所有算子的并行度为1 //2、导入隐式转换 import org.apache.flink.streaming.api.scala._ //3、读取数据,读取sock流中的数据 //val stream: DataStream[String] = streamEnv.socketTextStream("hadoop101",8888) //DataStream ==> spark 中Dstream //nc -lk 8888 val stream: DataStream[String] = streamEnv.socketTextStream("localhost",8888) //DataStream ==> spark 中Dstream //4、转换和处理数据 val result: DataStream[(String, Int)] = stream.flatMap(_.split(" ")) .map((_, 1)).setParallelism(2) .keyBy(0)//分组算子 : 0 或者 1 代表下标。前面的DataStream[二元组] , 0代表单词 ,1代表单词出现的次数 .sum(1).setParallelism(2) //聚会累加算子 //5、打印结果 result.print("结果").setParallelism(1) //6、启动流计算程序 streamEnv.execute("wordcount") }}

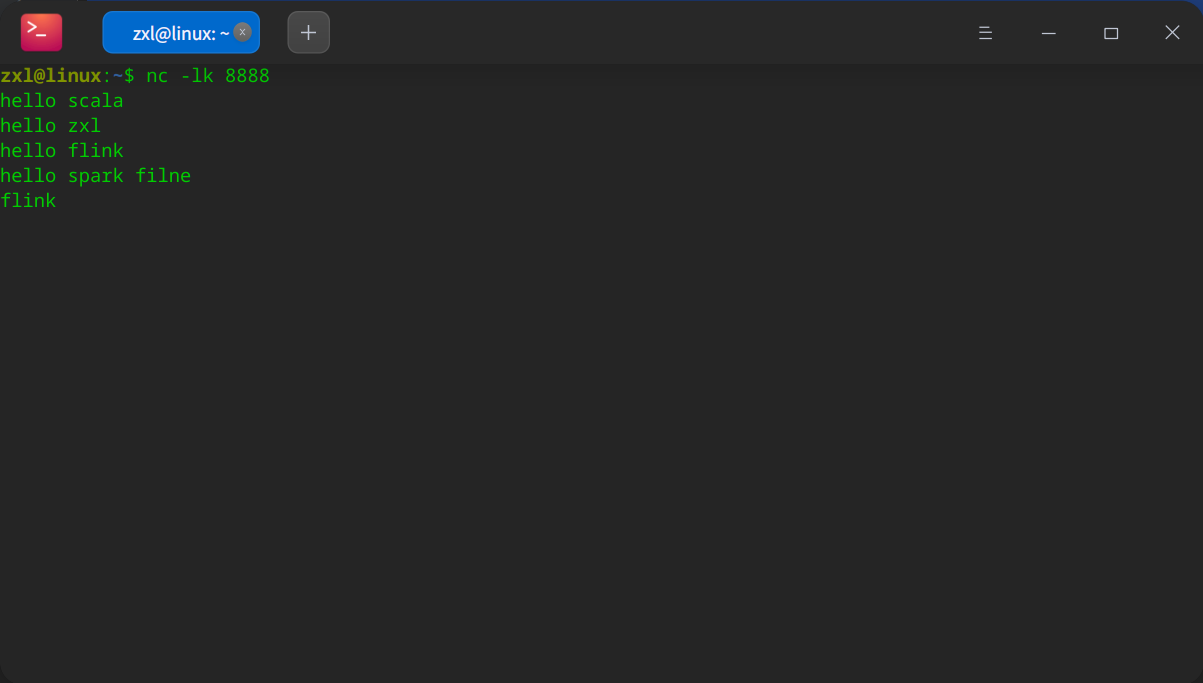

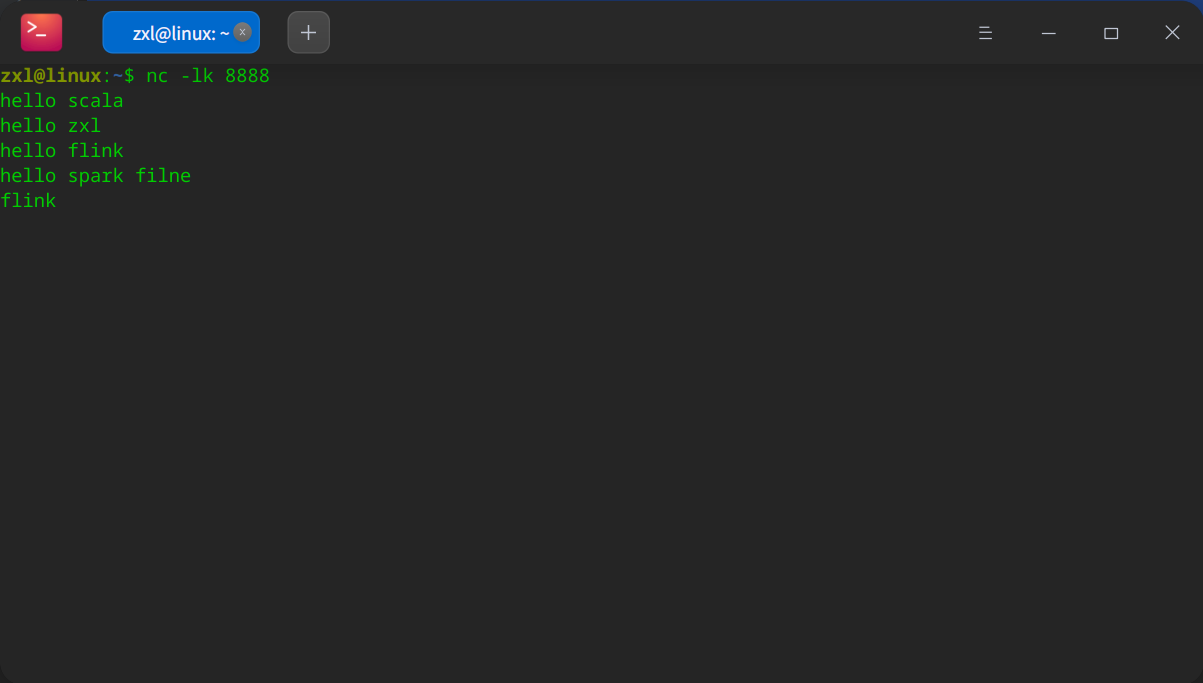

调试

发数据

console日志输出

结果> (scala,1)结果> (hello,1)结果> (zxl,1)结果> (hello,2)结果> (hello,3)结果> (flink,1)结果> (hello,4)结果> (spark,1)结果> (filne,1)结果> (flink,2)

附pom文件

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.zxl</groupId> <artifactId>Flink-SXT</artifactId> <version>1.0-SNAPSHOT</version><dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-scala_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-scala_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-kafka_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>0.11.0.3</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-connector-filesystem_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.bahir</groupId> <artifactId>flink-connector-redis_2.11</artifactId> <version>1.0</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>5.1.44</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-planner_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-table-api-scala-bridge_2.11</artifactId> <version>1.9.1</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-cep-scala_2.11</artifactId> <version>1.9.1</version> </dependency></dependencies> <build> <plugins> <!-- 该插件用于将Scala代码编译成class文件 --> <plugin> <groupId>net.alchim31.maven</groupId> <artifactId>scala-maven-plugin</artifactId> <version>3.4.6</version> <executions> <execution> <!-- 声明绑定到maven的compile阶段 --> <goals> <goal>testCompile</goal> </goals> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-assembly-plugin</artifactId> <version>3.0.0</version> <configuration> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> </plugins> </build></project>

还没有评论,来说两句吧...