canal 环境搭建(docker)

canal 环境搭建(docker)

官网:https://github.com/alibaba/canal/wiki/Docker-QuickStart

docker 仓库:https://hub.docker.com/r/canal/canal-server/tags

配置文件:https://github.com/alibaba/canal/wiki/AdminGuide

*********************

架构设计

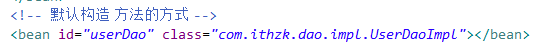

单机:canal server、canal client单节点直连

![2021062515525815.png][]

客户端连接

public class CanalConnectors {public static CanalConnector newSingleConnector(SocketAddress address, String destination, String username, String password) {SimpleCanalConnector canalConnector = new SimpleCanalConnector(address, username, password, destination);canalConnector.setSoTimeout(60000);canalConnector.setIdleTimeout(3600000);return canalConnector;}

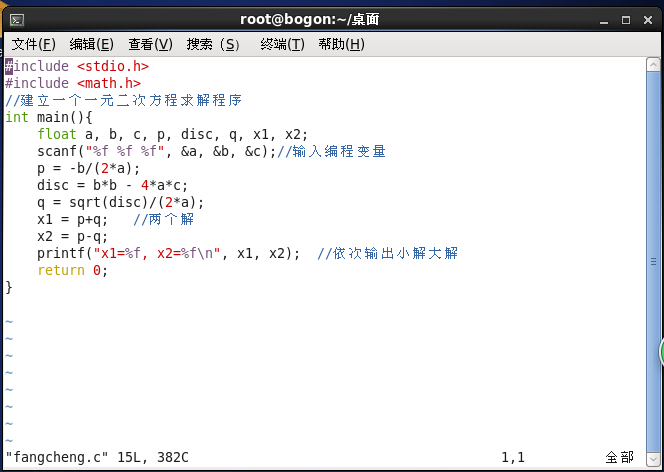

canal server高可用:canal server集群部署、canal client直连canal server服务列表

![watermark_type_ZmFuZ3poZW5naGVpdGk_shadow_10_text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzkzMTYyNQ_size_16_color_FFFFFF_t_70][]

canal server:集群部署,将节点信息存储在在zookeeper中,可实现高可用

canal client:通过canal server静态服务列表建立连接

public class CanalConnectors {public static CanalConnector newClusterConnector(List<? extends SocketAddress> addresses, String destination, String username, String password) {ClusterCanalConnector canalConnector = new ClusterCanalConnector(username, password, destination, new SimpleNodeAccessStrategy(addresses));canalConnector.setSoTimeout(60000);canalConnector.setIdleTimeout(3600000);return canalConnector;}

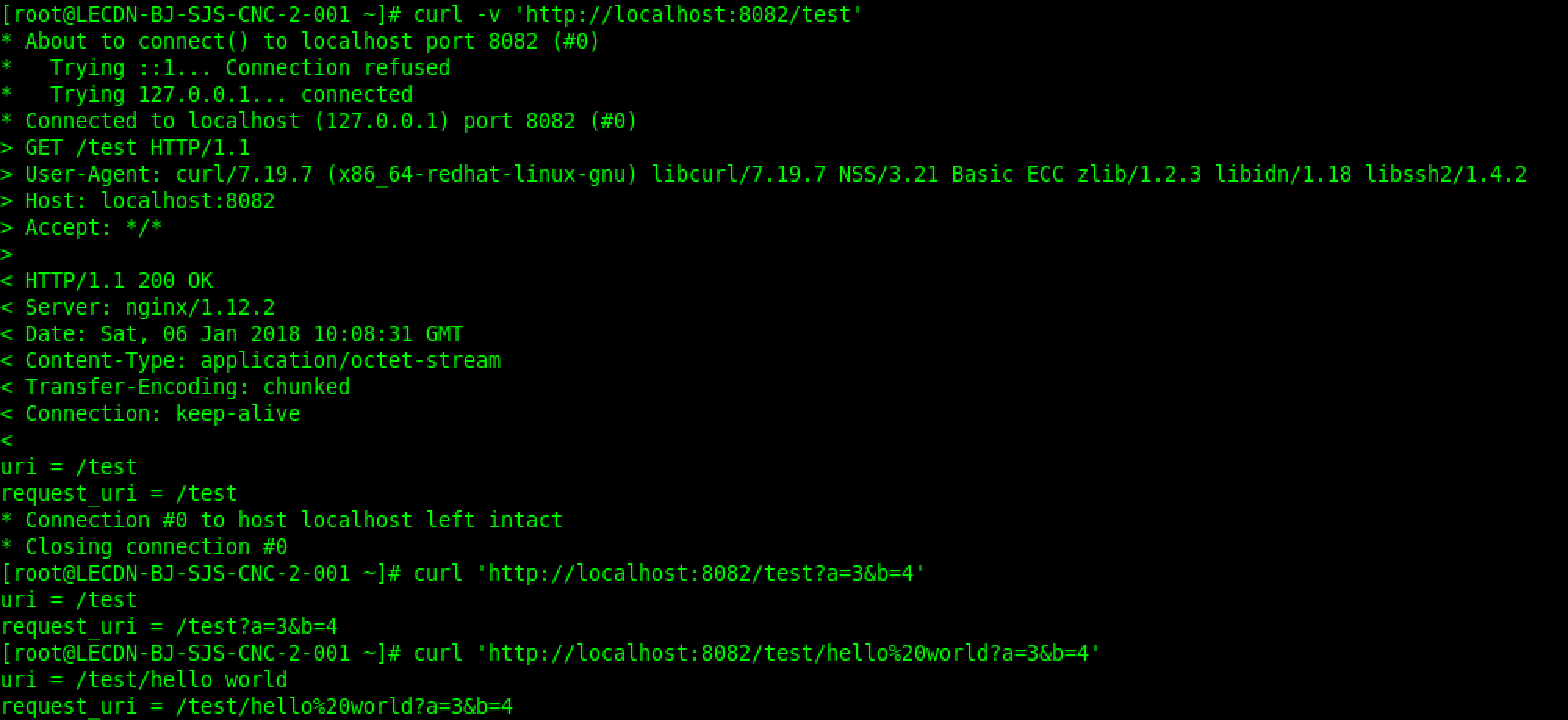

canal server、canal client高可用:canal server集群部署、canal client开启多个

![20210625160946400.png][]

canal server:集群部署,节点信息存储在zookeeper中,可实现高可用

canal client:canal client注册到zookeeper中,从zookeeper中获取canal server信息

public class CanalConnectors {public static CanalConnector newClusterConnector(String zkServers, String destination, String username, String password) {ClusterCanalConnector canalConnector = new ClusterCanalConnector(username, password, destination, new ClusterNodeAccessStrategy(destination, ZkClientx.getZkClient(zkServers)));canalConnector.setSoTimeout(60000);canalConnector.setIdleTimeout(3600000);return canalConnector;}

*********************

canal server 配置

canal配置加载方式:ManagerCanalInstanceGenerator、SpringCanalInstanceGenerator

![watermark_type_ZmFuZ3poZW5naGVpdGk_shadow_10_text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzkzMTYyNQ_size_16_color_FFFFFF_t_70 1][]

ManagerCanalInstanceGenerator:可视化界面配置canal参数

SpringCanalnstanceGenerator:本地文件配置(xxx-instance.xml、canal.properties、instance.properties)

*********************

xxx-instance.xml

创建CanalIstanceWithSpring实例,可选文件如下

memory-instance.xml:元数据在内存存储

file-instane.xml:**元数据持久化到文件,**log parser position优先在内存中查找,查找不到则到文件中查找

default-instance.xml:元数据保存到zookeeper,log parser position优先在内存中查找,查找不到则到zookeeper中查找

group-instance.xml:将多个parser组合成一个parser,可用于将分库分表后的数据导入同一地方存储分析,元数据默认保存在内存中

public class CanalInstanceWithSpring extends AbstractCanalInstance {private static final Logger logger = LoggerFactory.getLogger(CanalInstanceWithSpring.class);public CanalInstanceWithSpring() {}**************AbstractCanalInstancepublic class AbstractCanalInstance extends AbstractCanalLifeCycle implements CanalInstance {private static final Logger logger = LoggerFactory.getLogger(AbstractCanalInstance.class);protected Long canalId; //canal标识protected String destination; //instance实例的名称,一个canal下可有多个instanceprotected CanalEventStore<Event> eventStore; //eventStore,存储拉取的数据protected CanalEventParser eventParser; //解析数据源protected CanalEventSink<List<Entry>> eventSink; //处理转换数据protected CanalMetaManager metaManager; //元数据管理器,parser log position、cursor position等数据protected CanalAlarmHandler alarmHandler; //报警处理类protected CanalMQConfig mqConfig; //mq配置,支持rocketmq、kafka、rabbitmqpublic AbstractCanalInstance() {}

*********************

properties 文件

canal.properties:配置canal server上instance的公共属性

instance.properties:配置instance的属性,若有同名配置,instance.properties优先级更高

canal.properties

########################################################## common argument ##############################################################canal.id = 1 #canal server的唯一标识,默认为1# canal server用户名、密码,canal user、password如果不设置,则不开启密码功能canal.user = canalcanal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458canal.ip = #canal server绑定的ip地址canal.port = 11111 #canal server tcp连接端口,供客户端使用,默认为11111canal.metrics.pull.port = 11112 #canal server指标数据端口,默认为11112canal.register.ip = #canal server注册到zookeeper中的ip信息canal.zkServers = #canal server连接的zookeeper集群,如:10.20.144.22:2181,10.20.144.51:2181canal.zookeeper.flush.period = 1000 #数据持久化到zookeeper的周期,默认为1000毫秒# canal admin配置canal.admin.manager = 127.0.0.1:8089canal.admin.port = 11110canal.admin.user = admincanal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441# admin自动注册#canal.admin.register.auto = true#canal.admin.register.cluster =#canal.admin.register.name =canal.withoutNetty = false# canal服务端模式,可选值:tcp, kafka, rocketMQ, rabbitMQcanal.serverMode = tcp# flush meta cursor/parse position to file# 将元数据cursor、parse position保存到文件canal.file.data.dir = ${canal.conf.dir}canal.file.flush.period = 1000# eventStore内存空间设置canal.instance.memory.batch.mode = MEMSIZE #ITEMSIZE:buffer.size表示记录数量#MEMSIZE(默认值):buffer.size * buffer.memunit限制存储空间大小canal.instance.memory.buffer.size = 16384 #记录数或者记录大小canal.instance.memory.buffer.memunit = 1024 #存储单位,默认为1Kbcanal.instance.memory.rawEntry = true #存储原始字符串,不做序列化处理## 心跳检查mysql是否可用canal.instance.detecting.enable = false #是否开启心跳检查,默认为false#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()#心跳检查sqlcanal.instance.detecting.interval.time = 3 #心跳检查时间间隔,默认为3canal.instance.detecting.retry.threshold = 3 #心跳检查重试次数,默认为3canal.instance.detecting.heartbeatHaEnable = false #心跳检查mysql不可用时,是否自动切换到内分的数据库#默认为false# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery# 支持的最大事务长度,超过该长度后,可能会切割存储到eventStore中,无法保证事务的完整可见性canal.instance.transaction.size = 1024# mysql fallback connected to new master should fallback times# canal server切换新的mysql后,需要往前查找binlog的事件,默认为60s# mysql 主从库同步存在延时,需要往前查找,保证数据不丢失canal.instance.fallbackIntervalInSeconds = 60# 网络配置canal.instance.network.receiveBufferSize = 16384 #canal server接受数据的最大缓存(从mysql解析的数据)canal.instance.network.sendBufferSize = 16384 #canal server发送数据的最大缓存(发送给canal cilent的数据)canal.instance.network.soTimeout = 30 #canal server读取数据超时时间,默认为30s# binlog过滤配置(binlog filter config)canal.instance.filter.druid.ddl = true #是否使用druid解析ddl语句,来获取数据库名、表名canal.instance.filter.query.dcl = false #是否忽略dcl语句(grant、commit、rollback)canal.instance.filter.query.dml = false #是否忽略dml语句(insert、delete、update等)canal.instance.filter.query.ddl = false #是否忽略ddl语句(create table、create view等)canal.instance.filter.table.error = false #是否忽略binlog表结构获取失败的异常#主要解决回溯binlog时,对应的表已被删除,#或者表结构和binlog不一致的情况canal.instance.filter.rows = false #是否忽略dml导致的数据变更,默认为false#主要针对用户只订阅ddl、dcl操作canal.instance.filter.transaction.entry = false #是否忽略事务头,事务尾,默认为falsecanal.instance.filter.dml.insert = false #是否忽略dml insert操作,默认为falsecanal.instance.filter.dml.update = false #是否忽略dml update操作,默认为falsecanal.instance.filter.dml.delete = false #是否忽略dml delete操作,默认为false# binlog format/image检查(binlog format/image check)canal.instance.binlog.format = ROW,STATEMENT,MIXED #默认支持ROW,STATEMENT、MIXEDcanal.instance.binlog.image = FULL,MINIMAL,NOBLOB #默认支持FULL、MINIMAL、NOBLOB# binlog ddl isolationcanal.instance.get.ddl.isolation = false #ddl语句是否使用单独的batch返回,默认为false#如果和其他ddl/dml在同一batch返回,并发处理时前后顺序不能保证,可能会改变表结构# 并行配置canal.instance.parser.parallel = true #eventParser是否并行解析binlog,默认为truecanal.instance.parser.parallelThreadSize = 16 #并行处理线程数,默认为60%可用线程数canal.instance.parser.parallelBufferSize = 256 #并行解析的ringBuffer队列数,需为2的指数# table meta tsdb info# tableMetaTSDB:处理ddl语句造成的表结构变更canal.instance.tsdb.enable = true #是否开启tablemeta tsdb# 全局tsdb配置文件canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xmlcanal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xmlcanal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}#主要针对h2-tsdb.xml时对应h2文件的存放目录,默认为conf/xx/h2.mv.dbcanal.instance.tsdb.url =jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;#jdbc url的配置,h2的地址为默认值,如果是mysql需要自行定义canal.instance.tsdb.dbUsername = canal #用户名canal.instance.tsdb.dbPassword = canal #密码canal.instance.tsdb.snapshot.interval = 24 #快照间隔,默认为24小时canal.instance.tsdb.snapshot.expire = 360 #快照失效时间,默认为360小时(15天)########################################################## destinations ##############################################################canal.destinations = example #当前服务器上部署的instance列表canal.conf.dir = ../conf #conf所在目录# auto scan instance dir add/remove and start/stop instancecanal.auto.scan = true #是否开启instance自动扫描,默认为true#新增instance目录,自动加载配置,lazy=true时,自动启动#删除instance目录时,卸载对应配置,如果已经启动,则停止对应instancecanal.auto.scan.interval = 5 #自动扫描的时间间隔,默认为5s# set this value to 'true' means that when binlog pos not found, skip to latest.# WARN: pls keep 'false' in production env, or if you know what you want.# true:如果没有找到binlog position,从最新点位开启解析# 生产环境中建议设置为falsecanal.auto.reset.latest.pos.mode = falsecanal.instance.global.mode = spring #全局配置加载方式,默认为spring(使用本地文件加载)canal.instance.global.lazy = false #全局lazy模式,默认为falsecanal.instance.global.manager.address = ${canal.admin.manager} #全局manager配置地址,可视化配置canal server时使用# 全局spring.xml配置文件位置,创建CanalInstanceWithSpring对象canal.instance.global.spring.xml = classpath:spring/memory-instance.xmlcanal.instance.global.spring.xml = classpath:spring/file-instance.xmlcanal.instance.global.spring.xml = classpath:spring/default-instance.xml########################################################### MQ Properties ################################################################ aliyun ak/sk , support rds/mq# 阿里云mq配置canal.aliyun.accessKey =canal.aliyun.secretKey =canal.aliyun.uid=canal.mq.flatMessage = truecanal.mq.canalBatchSize = 50canal.mq.canalGetTimeout = 100# Set this value to "cloud", if you want open message trace feature in aliyun.canal.mq.accessChannel = localcanal.mq.database.hash = truecanal.mq.send.thread.size = 30canal.mq.build.thread.size = 8########################################################### Kafka ################################################################ kafka 配置kafka.bootstrap.servers = 127.0.0.1:9092kafka.acks = allkafka.compression.type = nonekafka.batch.size = 16384kafka.linger.ms = 1kafka.max.request.size = 1048576kafka.buffer.memory = 33554432kafka.max.in.flight.requests.per.connection = 1kafka.retries = 0kafka.kerberos.enable = falsekafka.kerberos.krb5.file = "../conf/kerberos/krb5.conf"kafka.kerberos.jaas.file = "../conf/kerberos/jaas.conf"########################################################### RocketMQ ################################################################ rocketMQ 配置rocketmq.producer.group = testrocketmq.enable.message.trace = falserocketmq.customized.trace.topic =rocketmq.namespace =rocketmq.namesrv.addr = 127.0.0.1:9876rocketmq.retry.times.when.send.failed = 0rocketmq.vip.channel.enabled = falserocketmq.tag =########################################################### RabbitMQ ################################################################ rabbitMQ 配置rabbitmq.host =rabbitmq.virtual.host =rabbitmq.exchange =rabbitmq.username =rabbitmq.password =rabbitmq.deliveryMode =

instance.properties

################################################## canal.instance.mysql.slaveId=0 #canal server id,需保证当前mysql集群中该值唯一canal.instance.gtidon=false #是否使用mysql gtid订阅模式# eventParser 解析的master binlog信息canal.instance.master.address=127.0.0.1:3306 #主机地址canal.instance.master.journal.name= #binlog文件canal.instance.master.position= #binlog起始解析的偏移量canal.instance.master.timestamp= #binlog起始解析的时间戳canal.instance.master.gtid= #起始解析的gtid位点# mysql备机信息canal.instance.standby.address =canal.instance.standby.journal.name =canal.instance.standby.position =canal.instance.standby.timestamp =canal.instance.standby.gtid=# rds oss binlog# 阿里云binlog在18小时候会自动清理上传到阿里云,如果不需要下载oss上的binlog,可不配置canal.instance.rds.accesskey= #阿里云账号的ackKey信息canal.instance.rds.secretkey= #阿里云账号的secretKey信息canal.instance.rds.instanceId= #阿里云账号的instanceId信息# table meta tsdb info,可覆盖canal properties上的配置canal.instance.tsdb.enable=truecanal.instance.tsdb.url=jdbc:mysql://127.0.0.1:3306/canal_tsdbcanal.instance.tsdb.dbUsername=canalcanal.instance.tsdb.dbPassword=canal# mysql数据库用户名、密码、编码集canal.instance.dbUsername=canalcanal.instance.dbPassword=canalcanal.instance.connectionCharset = UTF-8# enable druid Decrypt database passwordcanal.instance.enableDruid=false#canal.instance.pwdPublicKey=MFwwDQYJKoZIhvcNAQEBBQADSwAwSAJBALK4BUxdDltRRE5/zXpVEVPUgunvscYFtEip3pmLlhrWpacX7y7GCMo2/JM6LeHmiiNdH1FWgGCpUfircSwlWKUCAwEAAQ==canal.instance.filter.regex=.*\\..* #需要解析的表,默认.*\\..*,解析所有表canal.instance.filter.black.regex=mysql\\.slave_.* #不需要解析的表# 示例.*\\..*:所有表database\\..*:数据库database下的所有表database\\.test.*:数据库database下test开头的表database\\.test:数据库database小的表testdatabase\\.test.*,database\\.t2:数据库database下test开头的表,database下的t2# 解析的字段# table field filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)canal.instance.filter.field=test1.t_product:id/subject/keywords,test2.t_company:id/name/contact/ch# table field black filter(format: schema1.tableName1:field1/field2,schema2.tableName2:field1/field2)canal.instance.filter.black.field=test1.t_product:subject/product_image,test2.t_company:id/name/contact/ch# mq配置canal.mq.topic=example #静态topic:数据发送到固定topiccanal.mq.dynamicTopic=mytest1.user,mytest2\\..*,.*\\..* #动态topic:根据数据库名、表名设置说明:canal.mq.dynamicTopic格式为schema、schema.tableName、topicName:schema、topic:schema.tableName(匹配的数据库、表发送到指定的topicName上)# 示例mytest1.user:发送到mytest1_user topic上mytest2\\..*:发送到mytest2_tableName topic上test:test库中的所有数据都发送到test topic上topicName:test\\..*:test下的所有表数据都发送到topicName上test,test1\\.*:数据库test1中的表发送到test1_tableName topic上数据库test中的所有表发送到test topic上其余所有数据发送到canal.mq.topic指定的topic上# 发送分区设置canal.mq.partition=0 #发送到固定分区0# hash partition configcanal.mq.partitionsNum=3 #分区数canal.mq.partitionHash=test.table:id^name,.*\\..* #根据数据库名、表名选择发送的分区# 为不同的topic动态设置partition numcanal.mq.dynamicTopicPartitionNum=test.*:4,mycanal:6 #动态分区数,#test.*:4 test开头topic分区数为4,mycanal:6 topic mycanal分区数为6# 示例test.table:不设置任何字段,hash字段默认为表名test.table:id:test.table发送的分区hash字段为idtest.table:id^name:test.table发送的分区使用id、name hash计算得到.*\\..*:id:所有表的hash字段都为id.*\\..*:$pk$:所有表的hash字段为表的主键(自动搜索主键)partitionHash为空:发送到0分区test.table,test.table2:id:test.table根据tableName hashtest.table2根据id hash其余全部发送到对应topic的0分区

*********************

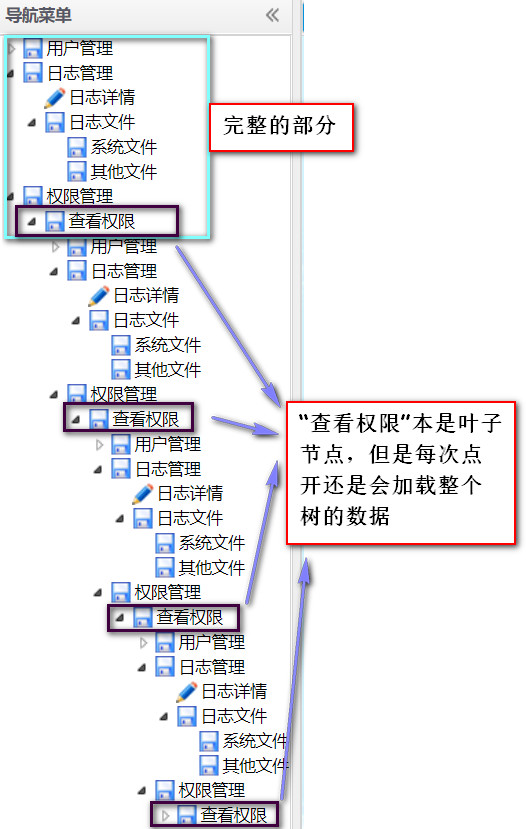

tsdb 相关配置

![20210627161332846.png][]

sql:创建存储tsdb信息的表

CREATE TABLE IF NOT EXISTS `meta_snapshot` (`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',`gmt_create` datetime NOT NULL COMMENT '创建时间',`gmt_modified` datetime NOT NULL COMMENT '修改时间',`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',`binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlog节点id',`binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog应用的时间戳',`data` longtext DEFAULT NULL COMMENT '表结构数据',`extra` text DEFAULT NULL COMMENT '额外的扩展信息',PRIMARY KEY (`id`),UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),KEY `destination` (`destination`),KEY `destination_timestamp` (`destination`,`binlog_timestamp`),KEY `gmt_modified` (`gmt_modified`)) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='表结构记录表快照表';CREATE TABLE IF NOT EXISTS `meta_history` (`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键',`gmt_create` datetime NOT NULL COMMENT '创建时间',`gmt_modified` datetime NOT NULL COMMENT '修改时间',`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',`binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlog节点id',`binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog应用的时间戳',`use_schema` varchar(1024) DEFAULT NULL COMMENT '执行sql时对应的schema',`sql_schema` varchar(1024) DEFAULT NULL COMMENT '对应的schema',`sql_table` varchar(1024) DEFAULT NULL COMMENT '对应的table',`sql_text` longtext DEFAULT NULL COMMENT '执行的sql',`sql_type` varchar(256) DEFAULT NULL COMMENT 'sql类型',`extra` text DEFAULT NULL COMMENT '额外的扩展信息',PRIMARY KEY (`id`),UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),KEY `destination` (`destination`),KEY `destination_timestamp` (`destination`,`binlog_timestamp`),KEY `gmt_modified` (`gmt_modified`)) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='表结构变化明细表';

h2-tsdb.xml:使用h2存储tsdb信息

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.springframework.org/schema/beans"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:tx="http://www.springframework.org/schema/tx"xmlns:aop="http://www.springframework.org/schema/aop" xmlns:lang="http://www.springframework.org/schema/lang"xmlns:context="http://www.springframework.org/schema/context"xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-2.0.xsdhttp://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-2.0.xsdhttp://www.springframework.org/schema/lang http://www.springframework.org/schema/lang/spring-lang-2.0.xsdhttp://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-2.0.xsdhttp://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-2.5.xsd"default-autowire="byName"><!-- properties --><bean class="com.alibaba.otter.canal.instance.spring.support.PropertyPlaceholderConfigurer" lazy-init="false"><property name="ignoreResourceNotFound" value="true" /><property name="systemPropertiesModeName" value="SYSTEM_PROPERTIES_MODE_OVERRIDE"/><!-- 允许system覆盖 --><property name="locationNames"><list><value>classpath:canal.properties</value><value>classpath:${canal.instance.destination:}/instance.properties</value></list></property></bean><!-- 基于db的实现 --><bean id="tableMetaTSDB" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.DatabaseTableMeta" destroy-method="destory"><property name="metaHistoryDAO" ref="metaHistoryDAO"/><property name="metaSnapshotDAO" ref="metaSnapshotDAO"/></bean><bean id="dataSource" class="com.alibaba.druid.pool.DruidDataSource" destroy-method="close"><property name="driverClassName" value="org.h2.Driver" /><property name="url" value="${canal.instance.tsdb.url:}" /><property name="username" value="${canal.instance.tsdb.dbUsername:}" /><property name="password" value="${canal.instance.tsdb.dbPassword:}" /><property name="maxActive" value="30" /><property name="initialSize" value="0" /><property name="minIdle" value="1" /><property name="maxWait" value="10000" /><property name="timeBetweenEvictionRunsMillis" value="60000" /><property name="minEvictableIdleTimeMillis" value="300000" /><property name="testWhileIdle" value="true" /><property name="testOnBorrow" value="false" /><property name="testOnReturn" value="false" /><property name="useUnfairLock" value="true" /><property name="validationQuery" value="SELECT 1" /></bean><bean id="sqlSessionFactory" class="org.mybatis.spring.SqlSessionFactoryBean"><property name="dataSource" ref="dataSource"/><property name="configLocation" value="classpath:spring/tsdb/sql-map/sqlmap-config.xml"/></bean><bean id="metaHistoryDAO" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.dao.MetaHistoryDAO"><property name="sqlSessionFactory" ref="sqlSessionFactory"/></bean><bean id="metaSnapshotDAO" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.dao.MetaSnapshotDAO"><property name="sqlSessionFactory" ref="sqlSessionFactory"/></bean></beans>

mysql-tsdb.xml:使用mysql存储tsdb信息

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.springframework.org/schema/beans"xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:tx="http://www.springframework.org/schema/tx"xmlns:aop="http://www.springframework.org/schema/aop" xmlns:lang="http://www.springframework.org/schema/lang"xmlns:context="http://www.springframework.org/schema/context"xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-2.0.xsdhttp://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-2.0.xsdhttp://www.springframework.org/schema/lang http://www.springframework.org/schema/lang/spring-lang-2.0.xsdhttp://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-2.0.xsdhttp://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-2.5.xsd"default-autowire="byName"><!-- properties --><bean class="com.alibaba.otter.canal.instance.spring.support.PropertyPlaceholderConfigurer" lazy-init="false"><property name="ignoreResourceNotFound" value="true" /><property name="systemPropertiesModeName" value="SYSTEM_PROPERTIES_MODE_OVERRIDE"/><!-- 允许system覆盖 --><property name="locationNames"><list><value>classpath:canal.properties</value><value>classpath:${canal.instance.destination:}/instance.properties</value></list></property></bean><!-- 基于db的实现 --><bean id="tableMetaTSDB" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.DatabaseTableMeta" destroy-method="destory"><property name="metaHistoryDAO" ref="metaHistoryDAO"/><property name="metaSnapshotDAO" ref="metaSnapshotDAO"/></bean><bean id="dataSource" class="com.alibaba.druid.pool.DruidDataSource" destroy-method="close"><property name="driverClassName" value="com.mysql.jdbc.Driver" /><property name="url" value="${canal.instance.tsdb.url:}" /><property name="username" value="${canal.instance.tsdb.dbUsername:}" /><property name="password" value="${canal.instance.tsdb.dbPassword:}" /><property name="maxActive" value="30" /><property name="initialSize" value="0" /><property name="minIdle" value="1" /><property name="maxWait" value="10000" /><property name="timeBetweenEvictionRunsMillis" value="60000" /><property name="minEvictableIdleTimeMillis" value="300000" /><property name="validationQuery" value="SELECT 1" /><property name="exceptionSorterClassName" value="com.alibaba.druid.pool.vendor.MySqlExceptionSorter" /><property name="validConnectionCheckerClassName" value="com.alibaba.druid.pool.vendor.MySqlValidConnectionChecker" /><property name="testWhileIdle" value="true" /><property name="testOnBorrow" value="false" /><property name="testOnReturn" value="false" /><property name="useUnfairLock" value="true" /></bean><bean id="sqlSessionFactory" class="org.mybatis.spring.SqlSessionFactoryBean"><property name="dataSource" ref="dataSource"/><property name="configLocation" value="classpath:spring/tsdb/sql-map/sqlmap-config.xml"/></bean><bean id="metaHistoryDAO" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.dao.MetaHistoryDAO"><property name="sqlSessionFactory" ref="sqlSessionFactory"/></bean><bean id="metaSnapshotDAO" class="com.alibaba.otter.canal.parse.inbound.mysql.tsdb.dao.MetaSnapshotDAO"><property name="sqlSessionFactory" ref="sqlSessionFactory"/></bean></beans>

*********************

canal server 单机

创建mysql

[root@centos ~]# docker run -it -d --net fixed --ip 172.18.0.2 \> -p 3306:3306 --privileged=true \> --name mysql -e MYSQL_ROOT_PASSWORD=123456 mysql# mysql8 bin_log默认开启mysql> show variables like "log_bin";+---------------+-------+| Variable_name | Value |+---------------+-------+| log_bin | ON |+---------------+-------+1 row in set (0.00 sec)# mysql8 binlog_format默认为ROWmysql> show variables like "binlog_format";+---------------+-------+| Variable_name | Value |+---------------+-------+| binlog_format | ROW |+---------------+-------+1 row in set (0.01 sec)

创建用户并授予权限,供canal server连接使用

mysql> create user canal identified with mysql_native_password by "123456";Query OK, 0 rows affected (0.11 sec)mysql> GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';Query OK, 0 rows affected (0.01 sec)mysql> flush privileges;Query OK, 0 rows affected (0.00 sec)

创建canal server

docker run -it -d --net fixed --ip 172.18.0.3 \-p 11111:11111 --name canal-server \-e canal.instance.master.address=172.18.0.2:3306 \-e canal.instance.dbUsername=canal \-e canal.instance.dbPassword=123456 canal/canal-server

查看canal server日志

[root@centos ~]# docker logs canal-serverDOCKER_DEPLOY_TYPE=VM==> INIT /alidata/init/02init-sshd.sh==> EXIT CODE: 0==> INIT /alidata/init/fix-hosts.py==> EXIT CODE: 0==> INIT DEFAULTGenerating SSH1 RSA host key: [ OK ]Starting sshd: [ OK ]Starting crond: [ OK ]==> INIT DONE==> RUN /home/admin/app.sh==> START ...start canal ...start canal successful

查看instance 日志

[root@centos ~]# docker exec -it canal-server bash[root@55e591394ef4 admin]# cd canal-server/logs/example[root@55e591394ef4 example]# lsexample.log[root@55e591394ef4 example]# cat example.log2021-06-29 01:03:54.889 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example2021-06-29 01:03:54.954 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$2021-06-29 01:03:54.954 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : ^mysql\.slave_.*$2021-06-29 01:03:55.025 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....2021-06-29 01:03:55.378 [destination = example , address = /172.18.0.2:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> begin to find start position, it will be long time for reset or first position2021-06-29 01:03:55.379 [destination = example , address = /172.18.0.2:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - prepare to find start position just show master status2021-06-29 01:03:57.678 [destination = example , address = /172.18.0.2:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=binlog.000002,position=4,serverId=1,gtid=<null>,timestamp=1624899604000] cost : 2206ms , the next step is binlog dump

说明:instance成功连接上MySQL,并开始解析数据源

*********************

canal server 单机多instance

修改 canal.properties

canal.destinations = example,example2

修改 example/instance.properties

canal.instance.master.address=172.18.0.11:3306canal.instance.dbUsername=canalcanal.instance.dbPassword=123456

修改 example2/instance.properties

canal.instance.master.address=172.18.0.12:3306canal.instance.dbUsername=canalcanal.instance.dbPassword=123456

创建 mysql实例

docker run -it -d --net fixed --ip 172.18.0.11 -p 3306:3306 --privileged=true \--name mysql -e MYSQL_ROOT_PASSWORD=123456 mysqldocker run -it -d --net fixed --ip 172.18.0.12 -p 3307:3306 --privileged=true \--name mysql2 -e MYSQL_ROOT_PASSWORD=123456 mysql# mysql、mysql2 创建用户并授权create user canal identified with mysql_native_password by "123456";GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';flush privileges;

创建 canal实例

docker run -it -d --net fixed --ip 172.18.0.3 -p 11111:11111 --name canal-server \-v /usr/canal/single/conf:/home/admin/canal-server/conf canal/canal-server# 宿主机目录[root@centos conf]# pwd/usr/canal/single/conf[root@centos conf]# lscanal_local.properties canal.properties example example2 logback.xml metrics spring

查看canal server日志

[root@centos example]# docker logs canal-serverDOCKER_DEPLOY_TYPE=VM==> INIT /alidata/init/02init-sshd.sh==> EXIT CODE: 0==> INIT /alidata/init/fix-hosts.py==> EXIT CODE: 0==> INIT DEFAULTGenerating SSH1 RSA host key: [ OK ]Starting sshd: [ OK ]Starting crond: [ OK ]==> INIT DONE==> RUN /home/admin/app.sh==> START ...start canal ...start canal successful==> START SUCCESSFUL ...

canal server实例创建成功

查看 instance实例日志

[root@centos example]# docker exec -it canal-server bash[root@ac38c13bce07 admin]# cd canal-server/logs[root@ac38c13bce07 logs]# pwd/home/admin/canal-server/logs[root@ac38c13bce07 logs]# lscanal example example2[root@ac38c13bce07 logs]# ls canalcanal.log canal_stdout.log[root@ac38c13bce07 logs]# ls exampleexample.log[root@ac38c13bce07 logs]# ls example2example2.log# instance日志[root@ac38c13bce07 logs]# cat example/example.log2021-06-29 22:53:04.947 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example2021-06-29 22:53:05.089 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$2021-06-29 22:53:05.089 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : ^mysql\.slave_.*$2021-06-29 22:53:05.153 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....2021-06-29 22:53:05.958 [destination = example , address = /172.18.0.11:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> begin to find start position, it will be long time for reset or first position2021-06-29 22:53:05.969 [destination = example , address = /172.18.0.11:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - prepare to find start position just show master status2021-06-29 22:53:12.659 [destination = example , address = /172.18.0.11:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=binlog.000002,position=4,serverId=1,gtid=<null>,timestamp=1624978095000] cost : 6604ms , the next step is binlog dump# instance2 日志[root@ac38c13bce07 logs]# cat example2/example2.log2021-06-29 22:53:06.677 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example22021-06-29 22:53:06.717 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$2021-06-29 22:53:06.717 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : ^mysql\.slave_.*$2021-06-29 22:53:06.735 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....2021-06-29 22:53:06.918 [destination = example2 , address = /172.18.0.12:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> begin to find start position, it will be long time for reset or first position2021-06-29 22:53:06.934 [destination = example2 , address = /172.18.0.12:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - prepare to find start position just show master status2021-06-29 22:53:12.660 [destination = example2 , address = /172.18.0.12:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=binlog.000002,position=4,serverId=1,gtid=<null>,timestamp=1624978126000] cost : 5665ms , the next step is binlog dump

instance、instance2创建成功

*********************

canal server 单机 mysql存储tsdb

创建 mysql、mysql2实例

docker run -it -d --net fixed --ip 172.18.0.21 -p 3306:3306 --privileged=true \--name mysql -e MYSQL_ROOT_PASSWORD=123456 mysqldocker run -it -d --net fixed --ip 172.18.0.20 -p 3307:3306 --privileged=true \--name mysql2 -e MYSQL_ROOT_PASSWORD=123456 mysql*************mysql:源数据# 创建用户,添加权限mysql> create user canal identified with mysql_native_password by "123456";Query OK, 0 rows affected (0.01 sec)mysql> GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;Query OK, 0 rows affected (0.01 sec)*************mysql2:存储tsdb# 创建用户,添加权限mysql> create user canal identified with mysql_native_password by "123456";Query OK, 0 rows affected (0.00 sec)mysql> GRANT ALL ON *.* TO 'canal'@'%';Query OK, 0 rows affected (0.01 sec)mysql> flush privileges;Query OK, 0 rows affected (0.00 sec)# 创建数据库、表mysql> create database example;Query OK, 1 row affected (0.00 sec)mysql> use example;Database changedmysql> CREATE TABLE IF NOT EXISTS `meta_snapshot` (-> `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '',-> `gmt_create` datetime NOT NULL COMMENT '',-> `gmt_modified` datetime NOT NULL COMMENT '',-> `destination` varchar(128) DEFAULT NULL COMMENT '',-> `binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog',-> `binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlogid',-> `binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `data` longtext DEFAULT NULL COMMENT '',-> `extra` text DEFAULT NULL COMMENT '',-> PRIMARY KEY (`id`),-> UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),-> KEY `destination` (`destination`),-> KEY `destination_timestamp` (`destination`,`binlog_timestamp`),-> KEY `gmt_modified` (`gmt_modified`)-> ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='';改时间',`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',`binlog_master_id` varchar(64) DEFAULT Query OK, 0 rows affected, 3 warnings (0.04 sec)mysql>mysql> CREATE TABLE IF NOT EXISTS `meta_history` (-> `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '',-> `gmt_create` datetime NOT NULL COMMENT '',-> `gmt_modified` datetime NOT NULL COMMENT '',-> `destination` varchar(128) DEFAULT NULL COMMENT '',-> `binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog',-> `binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlogid',-> `binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `use_schema` varchar(1024) DEFAULT NULL COMMENT 'sqlschema',-> `sql_schema` varchar(1024) DEFAULT NULL COMMENT 'schema',-> `sql_table` varchar(1024) DEFAULT NULL COMMENT 'table',-> `sql_text` longtext DEFAULT NULL COMMENT 'sql',-> `sql_type` varchar(256) DEFAULT NULL COMMENT 'sql',-> `extra` text DEFAULT NULL COMMENT '',-> PRIMARY KEY (`id`),-> UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),-> KEY `destination` (`destination`),-> KEY `destination_timestamp` (`destination`,`binlog_timestamp`),-> KEY `gmt_modified` (`gmt_modified`)-> ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='';Query OK, 0 rows affected, 3 warnings (0.02 sec)

修改配置文件

# canal.propertiescanal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml# example/instance.propertiescanal.instance.master.address=172.18.0.11:3306canal.instance.dbUsername=canalcanal.instance.dbPassword=123456canal.instance.tsdb.enable=truecanal.instance.tsdb.url=jdbc:mysql://172.18.0.20:3306/examplecanal.instance.tsdb.dbUsername=canalcanal.instance.tsdb.dbPassword=123456

创建 canal server实例

docker run -it -d --net fixed --ip 172.18.0.23 -p 11111:11111 --name canal-server \-v /usr/canal/single/tsdb/conf:/home/admin/canal-server/conf canal/canal-server

查看canal server日志

[root@centos tsdb]# docker logs canal-serverDOCKER_DEPLOY_TYPE=VM==> INIT /alidata/init/02init-sshd.sh==> EXIT CODE: 0==> INIT /alidata/init/fix-hosts.py==> EXIT CODE: 0==> INIT DEFAULTGenerating SSH1 RSA host key: [ OK ]Starting sshd: [ OK ]Starting crond: [ OK ]==> INIT DONE==> RUN /home/admin/app.sh==> START ...start canal ...start canal successful==> START SUCCESSFUL ...

canal server启动成功

查看instance日志

[root@centos tsdb]# docker exec -it canal-server bash[root@69b22f3fc434 admin]# cd canal-server/logs/example[root@69b22f3fc434 example]# cat example.log2021-06-30 00:43:57.172 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example2021-06-30 00:43:57.205 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$2021-06-30 00:43:57.205 [main] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : ^mysql\.slave_.*$2021-06-30 00:43:57.213 [main] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....2021-06-30 00:43:57.410 [destination = example , address = /172.18.0.21:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> begin to find start position, it will be long time for reset or first position2021-06-30 00:43:57.410 [destination = example , address = /172.18.0.21:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - prepare to find start position just show master status2021-06-30 00:43:59.387 [destination = example , address = /172.18.0.21:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=binlog.000002,position=4,serverId=1,gtid=<null>,timestamp=1624984902000] cost : 1966ms , the next step is binlog dump

instance创建成功

*********************

canal server 集群

mysql:元数据

mysql2:存储内存表快照

zookeeper:存储集群元数据

canal-server、canal-server2:canal server集群

创建 mysql实例

#mysql:源数据docker run -it -d --net fixed --ip 172.18.0.31 -p 3306:3306 --privileged=true \--name mysql -e MYSQL_ROOT_PASSWORD=123456 mysql#mysql2:存储内存表快照数据docker run -it -d --net fixed --ip 172.18.0.32 -p 3307:3306 --privileged=true \--name mysql2 -e MYSQL_ROOT_PASSWORD=123456 mysql**************mysql创建用户、授权mysql> create user canal identified with mysql_native_password by "123456";Query OK, 0 rows affected (0.01 sec)mysql> GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'canal'@'%';Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;Query OK, 0 rows affected (0.00 sec)**************mysql2创建用户、授权,创建数据库、表mysql> create user canal identified with mysql_native_password by "123456";Query OK, 0 rows affected (0.01 sec)mysql> GRANT ALL ON *.* TO 'canal'@'%';Query OK, 0 rows affected (0.00 sec)mysql> flush privileges;Query OK, 0 rows affected (0.01 sec)mysql> create database example;Query OK, 1 row affected (0.01 sec)mysql> use example;Database changedmysql> CREATE TABLE IF NOT EXISTS `meta_snapshot` (-> `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '',-> `gmt_create` datetime NOT NULL COMMENT '',-> `gmt_modified` datetime NOT NULL COMMENT '',-> `destination` varchar(128) DEFAULT NULL COMMENT '',-> `binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog',-> `binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlogid',-> `binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `data` longtext DEFAULT NULL COMMENT '',-> `extra` text DEFAULT NULL COMMENT '',-> PRIMARY KEY (`id`),-> UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),-> KEY `destination` (`destination`),-> KEY `destination_timestamp` (`destination`,`binlog_timestamp`),-> KEY `gmt_modified` (`gmt_modified`)-> ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='';改时间',`destination` varchar(128) DEFAULT NULL COMMENT '通道名称',`binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog文件名',`binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog偏移量',`binlog_master_id` varchar(64) DEFAULT Query OK, 0 rows affected, 3 warnings (0.02 sec)mysql>mysql> CREATE TABLE IF NOT EXISTS `meta_history` (-> `id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '',-> `gmt_create` datetime NOT NULL COMMENT '',-> `gmt_modified` datetime NOT NULL COMMENT '',-> `destination` varchar(128) DEFAULT NULL COMMENT '',-> `binlog_file` varchar(64) DEFAULT NULL COMMENT 'binlog',-> `binlog_offest` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `binlog_master_id` varchar(64) DEFAULT NULL COMMENT 'binlogid',-> `binlog_timestamp` bigint(20) DEFAULT NULL COMMENT 'binlog',-> `use_schema` varchar(1024) DEFAULT NULL COMMENT 'sqlschema',-> `sql_schema` varchar(1024) DEFAULT NULL COMMENT 'schema',-> `sql_table` varchar(1024) DEFAULT NULL COMMENT 'table',-> `sql_text` longtext DEFAULT NULL COMMENT 'sql',-> `sql_type` varchar(256) DEFAULT NULL COMMENT 'sql',-> `extra` text DEFAULT NULL COMMENT '',-> PRIMARY KEY (`id`),-> UNIQUE KEY binlog_file_offest(`destination`,`binlog_master_id`,`binlog_file`,`binlog_offest`),-> KEY `destination` (`destination`),-> KEY `destination_timestamp` (`destination`,`binlog_timestamp`),-> KEY `gmt_modified` (`gmt_modified`)-> ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4 COMMENT='';Query OK, 0 rows affected, 3 warnings (0.02 sec)

创建 zookeeper实例

docker run -it -d --net fixed --ip 172.18.0.33 -p 2181:2181 --name zoo zookeeper

修改 canal配置文件

# canal.propertiescanal.zkServers=172.18.0.33:2181canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xmlcanal.instance.global.spring.xml = classpath:spring/default-instance.xml# instance.propertiescanal.instance.master.address=172.18.0.31:3306canal.instance.dbUsername=canalcanal.instance.dbPassword=123456canal.instance.tsdb.enable=truecanal.instance.tsdb.url=jdbc:mysql://172.18.0.32:3306/examplecanal.instance.tsdb.dbUsername=canalcanal.instance.tsdb.dbPassword=123456

创建 canal server集群

docker run -it -d --net fixed --ip 172.18.0.34 -p 11111:11111 --name canal-server \-v /usr/canal/cluster/server/conf:/home/admin/canal-server/conf canal/canal-serverdocker run -it -d --net fixed --ip 172.18.0.35 -p 11112:11111 --name canal-server2 \-v /usr/canal/cluster/server2/conf:/home/admin/canal-server/conf canal/canal-server

查看 canal server日志

# canal-server[root@centos ~]# docker logs canal-serverDOCKER_DEPLOY_TYPE=VM==> INIT /alidata/init/02init-sshd.sh==> EXIT CODE: 0==> INIT /alidata/init/fix-hosts.py==> EXIT CODE: 0==> INIT DEFAULTGenerating SSH1 RSA host key: [ OK ]Starting sshd: [ OK ]Starting crond: [ OK ]==> INIT DONE==> RUN /home/admin/app.sh==> START ...start canal ...start canal successful==> START SUCCESSFUL ...# canal-server2[root@centos ~]# docker logs canal-server2DOCKER_DEPLOY_TYPE=VM==> INIT /alidata/init/02init-sshd.sh==> EXIT CODE: 0==> INIT /alidata/init/fix-hosts.py==> EXIT CODE: 0==> INIT DEFAULTGenerating SSH1 RSA host key: [ OK ]Starting sshd: [ OK ]Starting crond: [ OK ]==> INIT DONE==> RUN /home/admin/app.sh==> START ...start canal ...start canal successful==> START SUCCESSFUL ...

canal-server、canal-server2 启动成功

查看 instance日志

# canal-server[root@centos ~]# docker exec -it canal-server bash[root@ddcb9672751c admin]# cd canal-server/logs[root@ddcb9672751c logs]# lscanal# canal-server2[root@centos cluster]# docker exec -it canal-server2 bash[root@e54128f28507 admin]# cd canal-server/logs[root@e54128f28507 logs]# lscanal example[root@e54128f28507 logs]# cd example[root@e54128f28507 example]# lsexample.log[root@e54128f28507 example]# cat example.log2021-06-30 22:42:37.377 [ZkClient-EventThread-10-172.18.0.33:2181] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example2021-06-30 22:42:37.497 [ZkClient-EventThread-10-172.18.0.33:2181] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table filter : ^.*\..*$2021-06-30 22:42:37.498 [ZkClient-EventThread-10-172.18.0.33:2181] WARN c.a.o.canal.parse.inbound.mysql.dbsync.LogEventConvert - --> init table black filter : ^mysql\.slave_.*$2021-06-30 22:42:37.597 [ZkClient-EventThread-10-172.18.0.33:2181] INFO c.a.otter.canal.instance.core.AbstractCanalInstance - start successful....2021-06-30 22:42:38.351 [destination = example , address = /172.18.0.31:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> begin to find start position, it will be long time for reset or first position2021-06-30 22:42:38.359 [destination = example , address = /172.18.0.31:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - prepare to find start position just show master status2021-06-30 22:42:40.622 [destination = example , address = /172.18.0.31:3306 , EventParser] WARN c.a.o.c.p.inbound.mysql.rds.RdsBinlogEventParserProxy - ---> find start position successfully, EntryPosition[included=false,journalName=binlog.000002,position=4,serverId=1,gtid=<null>,timestamp=1625063533000] cost : 2206ms , the next step is binlog dump

canal-server没有启动,处于Standby状态

canal-server2启动成功,可以看到启动日志

zookeeper中存储的元数据

[root@centos cluster]# docker exec -it zoo bashroot@bfa8dada58dc:/apache-zookeeper-3.6.2-bin# bin/zkCli.shConnecting to localhost:21812021-06-30 15:11:46,453 [myid:] - INFO [main:Environment@98] - Client environment:zookeeper.version=3.6.2--803c7f1a12f85978cb049af5e4ef23bd8b688715, built on 09/04/2020 12:44 GMT2021-06-30 15:11:46,465 [myid:] - INFO [main:Environment@98] - Client environment:host.name=bfa8dada58dc2021-06-30 15:11:46,466 [myid:] - INFO [main:Environment@98] - Client environment:java.version=11.0.82021-06-30 15:11:46,472 [myid:] - INFO [main:Environment@98] - Client environment:java.vendor=N/A2021-06-30 15:11:46,472 [myid:] - INFO [main:Environment@98] - Client environment:java.home=/usr/local/openjdk-112021-06-30 15:11:46,473 [myid:] - INFO [main:Environment@98] - Client environment:java.class.path=/apache-zookeeper-3.6.2-bin/bin/../zookeeper-server/target/classes:/apache-zookeeper-3.6.2-bin/bin/../build/classes:/apache-zookeeper-3.6.2-bin/bin/../zookeeper-server/target/lib/*.jar:/apache-zookeeper-3.6.2-bin/bin/../build/lib/*.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/zookeeper-prometheus-metrics-3.6.2.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/zookeeper-jute-3.6.2.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/zookeeper-3.6.2.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/snappy-java-1.1.7.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/slf4j-log4j12-1.7.25.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/slf4j-api-1.7.25.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/simpleclient_servlet-0.6.0.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/simpleclient_hotspot-0.6.0.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/simpleclient_common-0.6.0.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/simpleclient-0.6.0.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-transport-native-unix-common-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-transport-native-epoll-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-transport-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-resolver-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-handler-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-common-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-codec-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/netty-buffer-4.1.50.Final.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/metrics-core-3.2.5.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/log4j-1.2.17.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/json-simple-1.1.1.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jline-2.14.6.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-util-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-servlet-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-server-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-security-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-io-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jetty-http-9.4.24.v20191120.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/javax.servlet-api-3.1.0.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jackson-databind-2.10.3.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jackson-core-2.10.3.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/jackson-annotations-2.10.3.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/commons-lang-2.6.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/commons-cli-1.2.jar:/apache-zookeeper-3.6.2-bin/bin/../lib/audience-annotations-0.5.0.jar:/apache-zookeeper-3.6.2-bin/bin/../zookeeper-*.jar:/apache-zookeeper-3.6.2-bin/bin/../zookeeper-server/src/main/resources/lib/*.jar:/conf:2021-06-30 15:11:46,479 [myid:] - INFO [main:Environment@98] - Client environment:java.library.path=/usr/java/packages/lib:/usr/lib64:/lib64:/lib:/usr/lib2021-06-30 15:11:46,480 [myid:] - INFO [main:Environment@98] - Client environment:java.io.tmpdir=/tmp2021-06-30 15:11:46,480 [myid:] - INFO [main:Environment@98] - Client environment:java.compiler=<NA>2021-06-30 15:11:46,480 [myid:] - INFO [main:Environment@98] - Client environment:os.name=Linux2021-06-30 15:11:46,480 [myid:] - INFO [main:Environment@98] - Client environment:os.arch=amd642021-06-30 15:11:46,481 [myid:] - INFO [main:Environment@98] - Client environment:os.version=3.10.0-957.el7.x86_642021-06-30 15:11:46,481 [myid:] - INFO [main:Environment@98] - Client environment:user.name=root2021-06-30 15:11:46,481 [myid:] - INFO [main:Environment@98] - Client environment:user.home=/root2021-06-30 15:11:46,485 [myid:] - INFO [main:Environment@98] - Client environment:user.dir=/apache-zookeeper-3.6.2-bin2021-06-30 15:11:46,486 [myid:] - INFO [main:Environment@98] - Client environment:os.memory.free=21MB2021-06-30 15:11:46,492 [myid:] - INFO [main:Environment@98] - Client environment:os.memory.max=247MB2021-06-30 15:11:46,493 [myid:] - INFO [main:Environment@98] - Client environment:os.memory.total=29MB2021-06-30 15:11:46,514 [myid:] - INFO [main:ZooKeeper@1006] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@6166e06f2021-06-30 15:11:46,524 [myid:] - INFO [main:X509Util@77] - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation2021-06-30 15:11:46,547 [myid:] - INFO [main:ClientCnxnSocket@239] - jute.maxbuffer value is 1048575 Bytes2021-06-30 15:11:46,589 [myid:] - INFO [main:ClientCnxn@1716] - zookeeper.request.timeout value is 0. feature enabled=falseWelcome to ZooKeeper!2021-06-30 15:11:46,687 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1167] - Opening socket connection to server localhost/127.0.0.1:2181.2021-06-30 15:11:46,688 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1169] - SASL config status: Will not attempt to authenticate using SASL (unknown error)JLine support is enabled2021-06-30 15:11:46,761 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@999] - Socket connection established, initiating session, client: /127.0.0.1:43956, server: localhost/127.0.0.1:21812021-06-30 15:11:46,827 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn$SendThread@1433] - Session establishment complete on server localhost/127.0.0.1:2181, session id = 0x100002977550009, negotiated timeout = 30000WATCHER::WatchedEvent state:SyncConnected type:None path:null[zk: localhost:2181(CONNECTED) 0] get /otter/canal/destinations/example/running{"active":true,"address":"172.18.0.35:11111"}

当前运行的节点信息:{“active”:true,”address”:”172.18.0.35:11111”}

还没有评论,来说两句吧...