25.prometheus监控k8s集群节点

25.prometheus监控k8s集群

一、node-exporter

node_exporter抓取用于采集服务器节点的各种运行指标,比如 conntrack,cpu,diskstats,filesystem,loadavg,meminfo,netstat等

更多查看:https://github.com/prometheus/node\_exporter

1. Daemon Set部署node-exporter

拉取镜像docker pull prom/node-exporter:v1.1.2vi node-exporter-dm.yaml

apiVersion: apps/v1kind: DaemonSetmetadata:name: node-exporternamespace: kube-monlabels:name: node-exporterspec:selector:matchLabels:name: node-exportertemplate:metadata:labels:name: node-exporterspec:hostPID: true # 使用主机PID namespacehostIPC: true # 使用主机IPC namespacehostNetwork: true # 使用主机net namespacecontainers:- name: node-exporterimage: harbor.hzwod.com/k8s/prom/node-exporter:v1.1.2ports:- containerPort: 9100resources:requests:cpu: 150m# securityContext:# privileged: trueargs:- --path.rootfs- /hostvolumeMounts:- name: rootfsmountPath: /hosttolerations:- key: "node-role.kubernetes.io/master"operator: "Exists"effect: "NoSchedule"volumes:- name: rootfshostPath:path: /

hostPID: true、hostIPC: true、hostNetwork: true使node-export容器和主机共享PID、IPC、NET命名空间,以能使用主机的命令等资源- 注意,因和主机共享了net namespace ,则

containerPort: 9100会直接暴露到主机的9001端口,该端口将作为metrics的服务入口 - 挂载主机的

/目录到容器/host目录,指定参数--path.rootfs=/host,使容器能找到并通过主机的这些文件获取主机的信息,如/proc/stat能获取cpu信息、/proc/meminfo能获取内存信息 tolerations为pod添加容忍,允许该pod能运行在master节点上,因为我们希望master节点也能被监控,若有其他污点node再同理处理

kubectl apply -f node-exporter-dm.yaml异常

查看kube-apiserver -h找到这条说明

给kube-apiserver添加该启动参数--allow-privileged=true允许容器请求特权模式

或去掉上面的securityContext.privileged: true这个配置(TODO有什么影响暂时还不知)

检查metricscurl http://172.10.10.100:9100/metrics

我们能看到能多指标信息

此时每个节点都有一个metrics接口,我们可以在prometheus上为每个node都配置上监控,但是若我们增加了一个node是不是就需要修改一次prometheus配置,有没有简单的方式能自动发现node呢?接下来看一看prometheus的服务发现

2. 服务发现

在 Kubernetes 下,Promethues 通过与 Kubernetes API 集成,目前主要支持5中服务发现模式,分别是:Node、Service、Pod、Endpoints、Ingress。

a. node发现

添加prometheus config

- job_name: 'kubernetes-nodes'kubernetes_sd_configs:- role: node

kubernetes_sd_configs是prometheus提供的Kubernetes API服务发现配置role可以是node、service、pod、endpoints、ingress,不同的role支持不同的meta labels

更多信息可以查看官方文档:kubernetes_sd_config

除了

kubernetes_sd_configprometheus还有还有很多其他选项prometheus configuration

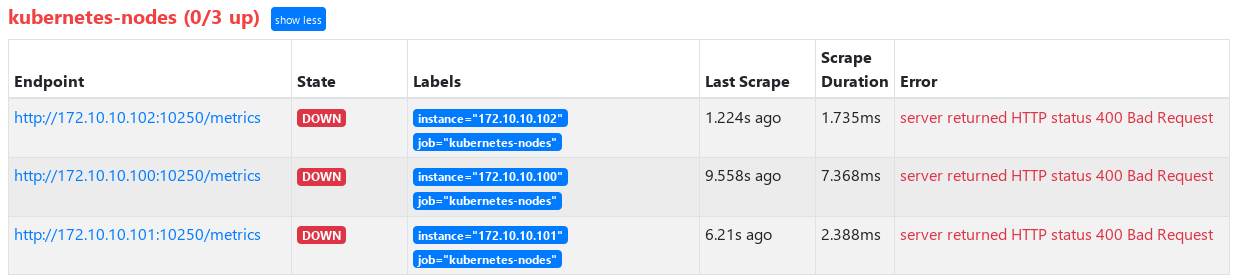

reload prometheus后查看targets,发现自动发现生效了,但是接口都400了

b. 使用relabel_config调整服务发现的Endpoint

我们发现自动发现node后,prometheus自动寻找的端口是10250,而且还不通,这是为什么呢

10250端口实际上是旧版本kubelet提供的只读数据统一接口,现在版本的kubelet(此文版本:v1.17.16)已经修改为10255

而我们希望此处自动发现node的监听端口是我们node-export提供的9100端口(即使要使用kubelet自带的metrics也要修改成10255端口,下文配置cAdvisor时会用到)

kubelet启动后自动开启10255端口,可以通过

curl http://[nodeIP]:10255/metrics查看监控信息

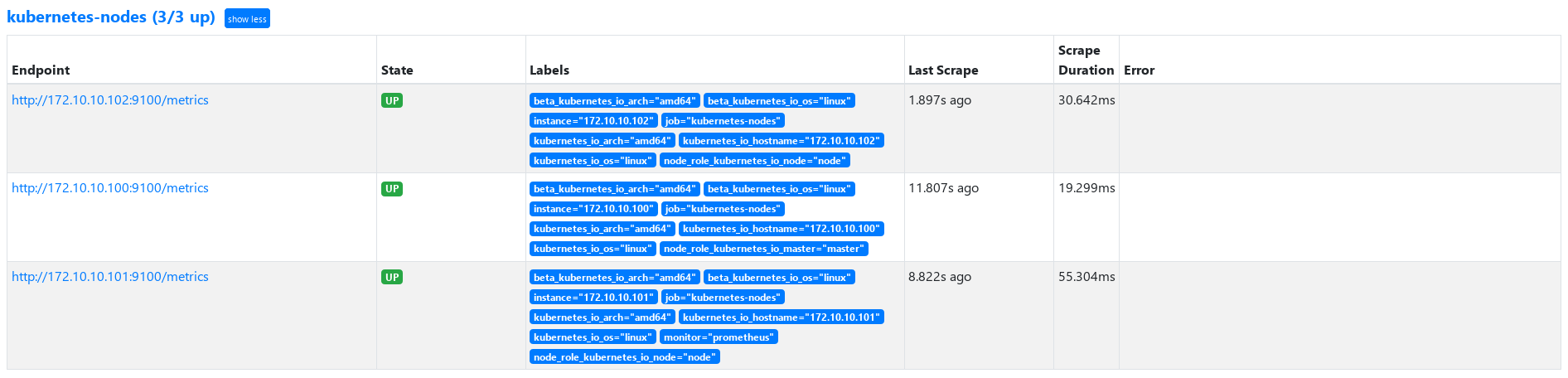

我们也可以通过relabel_configs来介入修改此处的Endpoint的端口或其他信息

修改prometheus.yaml 的kubernetes-nodes job配置

- job_name: 'kubernetes-nodes'kubernetes_sd_configs:- role: noderelabel_configs:- action: replace # 替换动作source_labels: [__address__] # 数组,指定多个label串联被regex匹配target_label: __address__ # 替换的目标labelregex: '(.*):10250' # 正则匹配source_labels指定的labels串联值replacement: '${1}:9100' # 为目标label替换后的值

action: replace动作为替换__address__replacement: '${1}:9100'${1}为引用regex正则表达式的第一个匹配组

更多信息查看relabel_configs

官网关于

__address__的一段描述

The__address__label is set to the<host>:<port>address of the target. After relabeling, the instance label is set to the value of__address__by default if it was not set during relabeling. The__scheme__and__metrics_path__labels are set to the scheme and metrics path of the target respectively. The__param_<name>label is set to the value of the first passed URL parameter called<name>

再添加 labelmap 添加kubernetes node的label作为prometheus的Labels,便于后续监控数据的筛选

- action: labelmapregex: __meta_kubernetes_node_label_(.*)

更新prometheus.yaml并reload后,查看prometheus

c. 完整的prometheus.yaml

我们看一下完整的prometheus configmap(prometheus.yam使用configmap方式储存在etcd中)

prometheus-cm.yaml

apiVersion: v1kind: ConfigMapmetadata:name: prometheus-confignamespace: kube-mondata:prometheus.yml: |global:scrape_interval: 15sscrape_timeout: 15sscrape_configs:- job_name: 'prometheus'static_configs:- targets: ['localhost:9090']- job_name: 'coredns'static_configs:- targets: ['kube-dns.kube-system:9153']- job_name: 'traefik'static_configs:- targets: ['traefiktcp.default:8180']- job_name: 'kubernetes-nodes'kubernetes_sd_configs:- role: noderelabel_configs:- action: replace # 替换动作source_labels: [__address__] # 数组,指定多个label串联被regex匹配target_label: __address__ # 替换的目标labelregex: '(.*):10250' # 正则匹配source_labels指定的labels串联值replacement: '${1}:9100' # 为目标label替换后的值- action: labelmapregex: __meta_kubernetes_node_label_(.*)

3. 配置grafana展示节点监控信息

前面我们已经安装好grafana且配置好了prometheus数据源,我们现在配置grafana模板监控展示nodeexport信息

下载模板:https://grafana.com/api/dashboards/8919/revisions/24/download

二、kube-state-metrics + cAdvisor

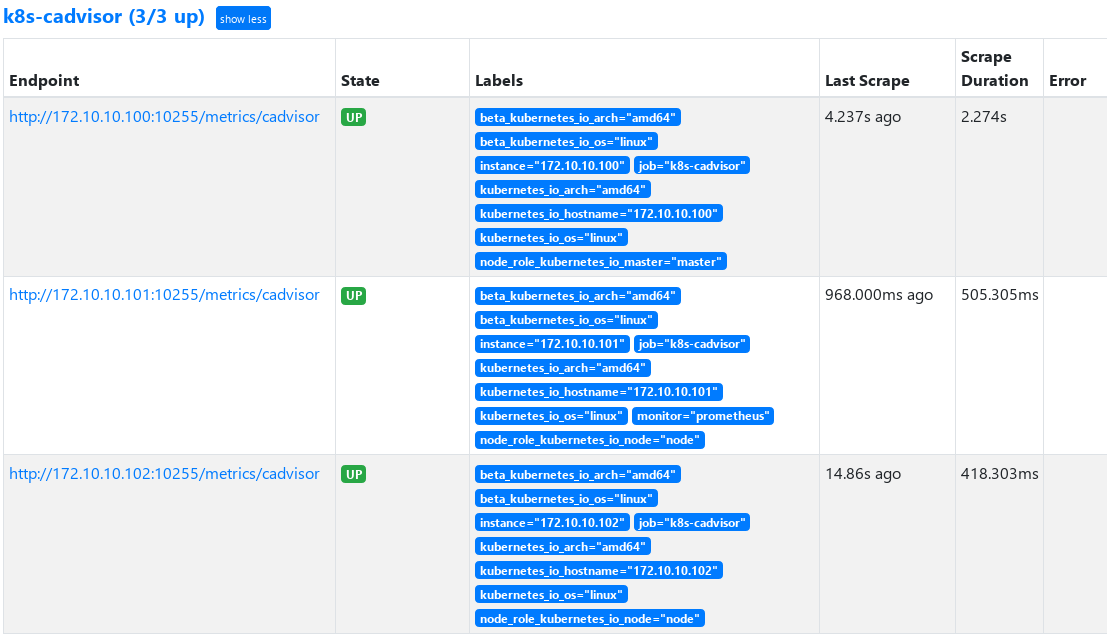

1. 配置prometheus监控cAdvisor

cAdvisor作为kubelet内置的一部分程序可以直接使用

- job_name: 'k8s-cadvisor'metrics_path: /metrics/cadvisorkubernetes_sd_configs:- role: noderelabel_configs:- source_labels: [__address__]regex: '(.*):10250'replacement: '${1}:10255'target_label: __address__action: replace- action: labelmapregex: __meta_kubernetes_node_label_(.+)metric_relabel_configs:- source_labels: [instance]separator: ;regex: (.+)target_label: nodereplacement: $1action: replace- source_labels: [pod_name]separator: ;regex: (.+)target_label: podreplacement: $1action: replace- source_labels: [container_name]separator: ;regex: (.+)target_label: containerreplacement: $1action: replace

2. 部署kube-state-metrics

https://github.com/kubernetes/kube-state-metrics/tree/master/examples/standard

本节部署kube-state-metrics的namespace:kube-mon

kube-state-metrics版本为v1.9.8

- 下载镜像

docker pull quay.mirrors.ustc.edu.cn/coreos/kube-state-metrics:v1.9.8 cluster-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-mon

apiVersion: v1

kind: ServiceAccount

metadata:

labels:app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8

name: kube-state-metrics

namespace: kube-moncluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8

name: kube-state-metrics

rules:- apiGroups:

- “”

resources: - configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: - list

- watch

- “”

- apiGroups:

- apps

resources: - statefulsets

- daemonsets

- deployments

- replicasets

verbs: - list

- watch

- apps

- apiGroups:

- batch

resources: - cronjobs

- jobs

verbs: - list

- watch

- batch

- apiGroups:

- autoscaling

resources: - horizontalpodautoscalers

verbs: - list

- watch

- autoscaling

- apiGroups:

- authentication.k8s.io

resources: - tokenreviews

verbs: - create

- authentication.k8s.io

- apiGroups:

- authorization.k8s.io

resources: - subjectaccessreviews

verbs: - create

- authorization.k8s.io

- apiGroups:

- policy

resources: - poddisruptionbudgets

verbs: - list

- watch

- policy

- apiGroups:

- certificates.k8s.io

resources: - certificatesigningrequests

verbs: - list

- watch

- certificates.k8s.io

- apiGroups:

- storage.k8s.io

resources: - storageclasses

- volumeattachments

verbs: - list

- watch

- storage.k8s.io

- apiGroups:

- admissionregistration.k8s.io

resources: - mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs: - list

- watch

- admissionregistration.k8s.io

- apiGroups:

- networking.k8s.io

resources: - networkpolicies

- ingresses

verbs: - list

- watch

- networking.k8s.io

- apiGroups:

- coordination.k8s.io

resources: - leases

verbs: - list

- watch

- coordination.k8s.io

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8

name: kube-state-metrics

namespace: kube-mon

spec:

replicas: 1

selector:matchLabels:app.kubernetes.io/name: kube-state-metrics

template:

metadata:labels:app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8spec:containers:- image: harbor.hzwod.com/k8s/kube-state-metrics:v1.9.8livenessProbe:httpGet:path: /healthzport: 8080initialDelaySeconds: 5timeoutSeconds: 5name: kube-state-metricsports:- containerPort: 8080name: http-metrics- containerPort: 8081name: telemetryreadinessProbe:httpGet:path: /port: 8081initialDelaySeconds: 5timeoutSeconds: 5securityContext:runAsUser: 65534nodeSelector:kubernetes.io/os: linuxserviceAccountName: kube-state-metrics

service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:prometheus.io/scraped: "true"

labels:

app.kubernetes.io/name: kube-state-metricsapp.kubernetes.io/version: 1.9.8

name: kube-state-metrics

namespace: kube-mon

spec:

clusterIP: None

ports:- name: http-metrics

port: 8080

targetPort: http-metrics - name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

kubectl apply -f . 应用这些资源启动kube-state-metrics容器及服务

3. 配置prometheus获取kube-state-metrics监控信息

prometheus.yaml 添加入如下job

- job_name: kube-state-metricskubernetes_sd_configs:- role: endpointsnamespaces:names:- kube-monrelabel_configs:- source_labels: [__meta_kubernetes_service_label_app_kubernetes_io_name]regex: kube-state-metricsreplacement: $1action: keep- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: k8s_namespace- source_labels: [__meta_kubernetes_service_name]action: replacetarget_label: k8s_sname

- endpoints自动发现service

- keep 只监控label为

app.kubernetes.io/name: kube-state-metrics的service

修改配置,reload prometheus后查看

4. 配置grafana模板展示监控信息

该模板需cadvisor和kube-state-metrics两提供的信息,因此上文完成了prometheus对这两个metrics的信息获取

- 下载模板

https://grafana.com/grafana/dashboards/13105 - 效果

还没有评论,来说两句吧...