第一个Flink例子-flink写入kafka

第一个Flink例子-flink写入kafka

- 依赖

- 代码

- 运行

依赖

1.zookeeper

单机模式,参考:https://blog.csdn.net/sndayYU/article/details/90718238

基本上绿色版改下conf/zoo.cfg的日志和数据目录、建立下对应目录。

2.kafka

单机模式,参考:https://blog.csdn.net/sndayYU/article/details/90718786

基本上建立下 D:\tmp\kafka-logs 这个目录就ok了

代码

1.pom.xml

<properties><project.build.sourceEncoding>UTF-8</project.build.sourceEncoding><maven.compiler.source>1.8</maven.compiler.source><maven.compiler.target>1.8</maven.compiler.target></properties><dependencies><dependency><groupId>junit</groupId><artifactId>junit</artifactId><version>4.13</version><scope>test</scope></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-connector-kafka_2.12</artifactId><version>1.8.0</version></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-java</artifactId><version>1.8.0</version><scope>provided</scope></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-streaming-java_2.11</artifactId><version>1.8.0</version><scope>provided</scope></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-java</artifactId><version>1.8.0</version><scope>compile</scope></dependency><dependency><groupId>org.apache.flink</groupId><artifactId>flink-streaming-java_2.11</artifactId><version>1.8.0</version><scope>compile</scope></dependency></dependencies>

2.FlinkToKafka

package com.ydfind;import org.apache.flink.api.common.serialization.SimpleStringSchema;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.api.functions.source.SourceFunction;import org.apache.flink.streaming.connectors.kafka.FlinkKafkaProducer;import java.text.SimpleDateFormat;import java.util.*;/*** flink不断产生数据 发送到 kafka* @author drd* @date 2021.1.29*/public class FlinkToKafka {public static void main(String[] args) throws Exception{StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();// 注册数据源DataStreamSource<String> text = env.addSource(new MyFlinkProducer()).setParallelism(1);Properties properties = new Properties();properties.setProperty("bootstrap.servers", "localhost:9092");FlinkKafkaProducer<String> producer = new FlinkKafkaProducer("flink-test",new SimpleStringSchema(),properties);producer.setWriteTimestampToKafka(true);// 添加处理对象text.addSink(producer);// 开始执行env.execute();}public static class MyFlinkProducer implements SourceFunction<String> {//1private boolean running = true;@Overridepublic void run(SourceContext<String> sourceContext) throws Exception {String format = "yyyy-MM-dd HH:mm:ss.SSS";SimpleDateFormat sdf = new SimpleDateFormat(format);while(running){String msg = sdf.format(new Date(System.currentTimeMillis())) + ", this is from MySourceProducer";sourceContext.collect(msg);Thread.sleep(3000);}}@Overridepublic void cancel() {running = false;}}}

运行

1.运行zookeeper

win10:.\bin\zkServer.cmdcontenos:.\bin\zkServer.sh start

2.kafka

// 创建kafka的topiccd D:\YDGreenNew\kafka_2.12-2.2.0bin\windows\kafka-topics.bat --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic flink-test

3.用idea运行代码

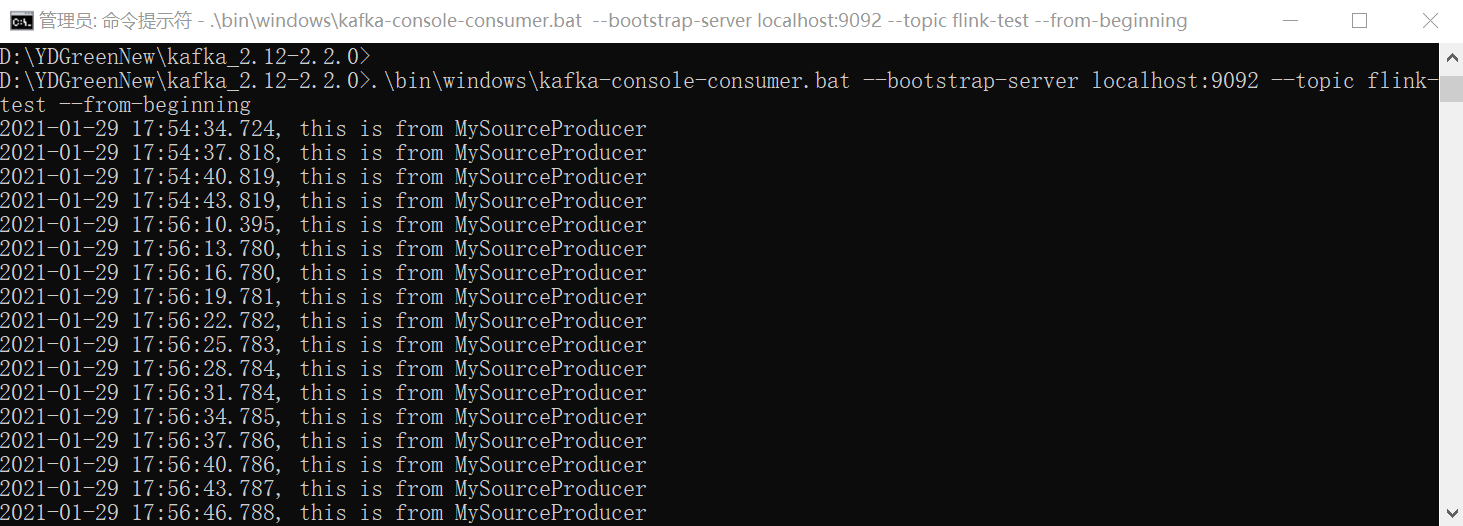

4.观察kafka的消费者输出

// 启动消费者观察结果.\bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic flink-test --from-beginning

还没有评论,来说两句吧...