kafka报错which is larger than the maximum request size you have configured with the max.request.......

一、kafka默认的发送一条消息的大小是1M,如果不配置,当发送的消息大于1M是,就会报错

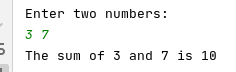

[2018-07-03 14:49:38,411] ERROR Error when sending message to topic testTopic with key: null, value: 2095476 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback)org.apache.kafka.common.errors.RecordTooLargeException: The message is 2095510 bytes when serialized which is larger than the maximum request size you have configured with the max.request.size configuration.

实时计算平台体现为:dbus拉取数据报错,3万多条数据只能拉取几条到kafka

pull error! dsKey: dcmall_prd.sys_public_user_1614823566880, splitIndex: 1, kafka send exception!

详细日志

二、具体配置调整

1、server.properties中添加

message.max.bytes=5242880(5M)replica.fetch.max.bytes=6291456(6M)每个分区试图获取的消息字节数。要大于等于message.max.bytes

2、producer.properties中添加

max.request.size = 5242880 (5M)请求的最大大小为字节。要小于 message.max.bytes

3、consumer.properties中添加

fetch.message.max.bytes=6291456(6M)每个提取请求中为每个主题分区提取的消息字节数。要大于等于message.max.bytes

三、重启

1、更改完配置要重启kafka server才能生效

1.1、先停止kafka.

a、通过命令:bin/kafka-server-stop.sh

b、找到kafka进程,命令:ps -ef | grep kafka,然后kill掉

1.2、启动kafka server:

nohup bin/kafka-server-start.sh config/server.properties&

再使用dbus拉取数据,问题解决了。

还没有评论,来说两句吧...