spark读取excel表格

参考资料:https://blog.csdn.net/qq_38689769/article/details/79471332

参考资料:https://blog.csdn.net/Dr_Guo/article/details/77374403?locationNum=9&fps=1

pom.xml:

<!--读取excel文件--><dependency><groupId>org.apache.poi</groupId><artifactId>poi</artifactId><version>3.10-FINAL</version></dependency><dependency><groupId>org.apache.poi</groupId><artifactId>poi-ooxml</artifactId><version>3.10-FINAL</version></dependency>

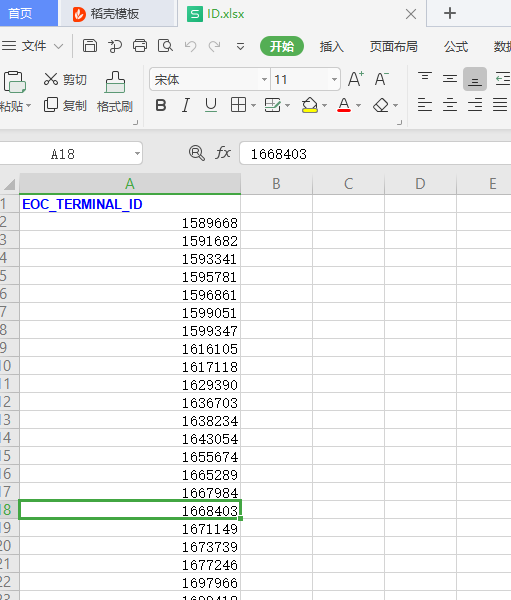

数据:

代码:

import java.io.FileInputStreamimport com.emg.join.model.{AA, BB}import org.apache.poi.ss.usermodel.Cellimport org.apache.poi.xssf.usermodel.XSSFWorkbookimport org.apache.spark.SparkConfimport org.apache.spark.sql.SparkSessionimport scala.collection.mutable.ListBufferobject Excels {val conf = new SparkConf().setAppName("join").set("spark.serializer", "org.apache.spark.serializer.KryoSerializer").setMaster("local[*]").registerKryoClasses(Array[Class[_]](AA.getClass, BB.getClass))val spark = SparkSession.builder().config(conf).getOrCreate()val sc = spark.sparkContextimport spark.implicits._val filePath = "c:\\user\\id.xlsx"//val filePath1 = "hdfs://192.168.40.0:9000/user/id.xlsx"val fs = new FileInputStream(filePath)val workbook: XSSFWorkbook = new XSSFWorkbook(fs)val sheet = workbook.getSheetAt(0) //获取第一个sheetval rowCount = sheet.getPhysicalNumberOfRows() //获取总行数val data = new ListBuffer[BB]()for (i <- 1 until rowCount) {val row = sheet.getRow(i)// 得到第一列第一行的单元格val cellwellname: Cell = row.getCell(0)//同一字段不同数据类型处理var wellname = 0Lif (cellwellname.getCellType == 0) {wellname = cellwellname.getNumericCellValue.toLong}data.+=(BB(wellname))data}val data1 = spark.createDataset(data)data1.createTempView("data1")val result = spark.sql("select * from data1").coalesce(1)result.rdd.saveAsTextFile(outPath)}

注意:

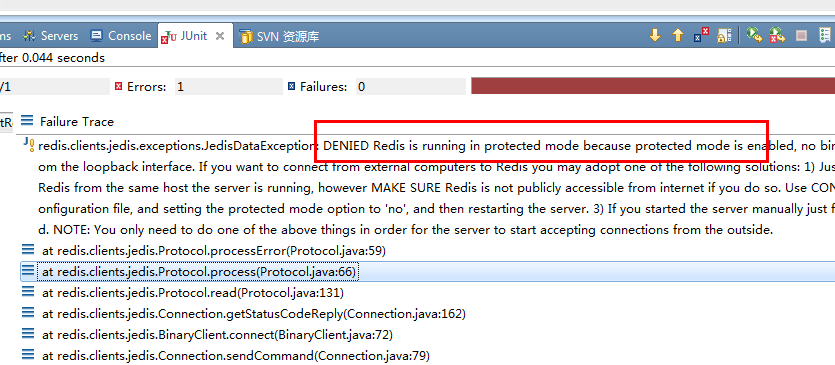

当路径为本地的时候,运行好使。当路径为hdfs时,报错找不到路径,会出现转义符问题,查了查资料还是没能解决!

有解决方法记得回复哈。

还没有评论,来说两句吧...