mmdetection 安装与使用(win10)

一、安装

(1)创建虚拟环境:

conda create -n mmdec python=3.6

完成

(2)激活虚拟环境,安装torch(现在mmdetection需要的torch最低版本为1.1.0)

activate mmdec

发现官网的这个pip也是挺快的(https://pytorch.org/)

pip install http://download.pytorch.org/whl/cu100/torch-1.1.0-cp36-cp36m-win\_amd64.whl (本人使用)

pip (—default-timeout=600等待时间) install ****** 若果超时可以用下面的多等待一会儿

其他版本路径:

https://download.pytorch.org/whl/cpu/torch-1.1.0-cp36-cp36m-win_amd64.whl(WIN,CPU)https://download.pytorch.org/whl/cu100/torch-1.1.0-cp36-cp36m-win_amd64.whl (WIN,CUDA 10.0)https://download.pytorch.org/whl/cu90/torch-1.1.0-cp36-cp36m-win_amd64.whl (WIN,CUDA 9.0)pip install https://download.pytorch.org/whl/cu100/torch-1.0.1-cp35-cp35m-linux_x86_64.whl(linux,CUDA 10.0)

pip install PyYAML -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install cython

pip install scikit-image

pip install torchvision

Pycocotools 这个东西windows安装需要https://github.com/philferriere/cocoapi下载后进入pythonAPI文件夹内

使用python setup.py install

继续安装上面缺少的库:

pip install 库名称 -i https://pypi.tuna.tsinghua.edu.cn/simple

V2EX:http://pypi.v2ex.com/simple

豆瓣:http://pypi.douban.com/simple

中国科学技术大学:http://pypi.mirrors.ustc.edu.cn/simple

清华:https://pypi.tuna.tsinghua.edu.cn/simple

(3)安装mmcv

直接pip安装就可以:pip install mmcv

以前 我是按照以下安装的

下载:https://github.com/open-mmlab/mmcv

pip install .

补充,电脑重装遇到的问题:anaconda3\envs\torch\lib\site-packages\pip\compat\__init__.py”, line 75, in console_to_str

return s.decode(‘utf_8’)

UnicodeDecodeError: ‘utf-8’ codec can’t decode byte 0xa1 in position 60: invalid start byte

发现是pip版本过低,更新pip 解决问题| python -m pip install —upgrade pip

(4)安装

mmdetection 1.x版本:如果你安装的是VS2015,自定义安装,updata3需要选上,否则这一步失败。

下载:https://github.com/open-mmlab/mmdetection

使用如下命令安装

python setup.py develop -i https://pypi.tuna.tsinghua.edu.cn/simple

mmdetection 2.x版本,使用原本的VS2015报错,然后我安装了vs2019结果cuda又不支持了,我太难了

纠结了一下是升级cuda还是卸载vs2019,决定重新安装一个VS2017 试试。

待续。。。2.1版本的报错,先安装了个2.0 的

的

ubuntu下和1.x一样的安装方式正常安装

(5)测试

from mmdet.apis import init_detector, inference_detector, show_resultconfig_file = './configs/faster_rcnn_r50_fpn_1x.py'checkpoint_file = './checkpoints/faster_rcnn_r50_fpn_1x_20181010-3d1b3351.pth'model = init_detector(config_file, checkpoint_file)img = 'demo.jpg'result = inference_detector(model, img)show_result(img, result, model.CLASSES)

遇到问题:

0、ImportError: cannot import name ‘get_dist_info’

mmcv版本和mmdetection版本不匹配

1、在测试的时候提示:AttributeError: module ‘torch.distributed’ has no attribute ‘is_initialized’定位到错误处修改

-———————————————————————————分割线————————————————————————-

使用自己的数据集进行训练

1数据集准备:

因为一直用的都是VOC数据集,所以直接训练Voc的数据集

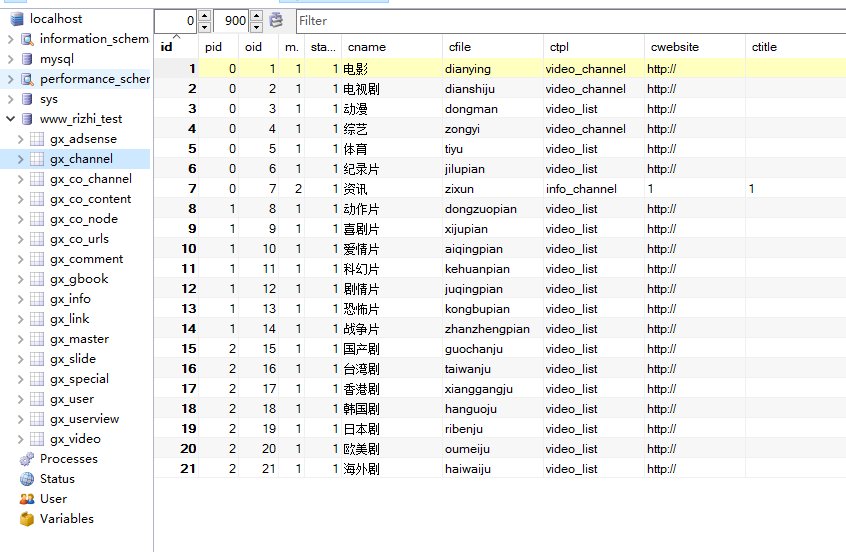

2.在mmdet/datasets文件夹内,修改自己的数据

1、在\_\_init\_\_ 中新增自己的

2、复制voc重命名成自己的MyData.py修改地方已标红

3.修改configs中的配置文件

# model settingsmodel = dict(type='FasterRCNN',pretrained='torchvision://resnet50',backbone=dict(type='ResNet',depth=50,num_stages=4,out_indices=(0, 1, 2, 3),frozen_stages=1,style='pytorch'),neck=dict(type='FPN',in_channels=[256, 512, 1024, 2048],out_channels=256,num_outs=5),rpn_head=dict(type='RPNHead',in_channels=256,feat_channels=256,anchor_scales=[8],anchor_ratios=[0.5, 1.0, 2.0],anchor_strides=[4, 8, 16, 32, 64],target_means=[.0, .0, .0, .0],target_stds=[1.0, 1.0, 1.0, 1.0],loss_cls=dict(type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),loss_bbox=dict(type='SmoothL1Loss', beta=1.0 / 9.0, loss_weight=1.0)),bbox_roi_extractor=dict(type='SingleRoIExtractor',roi_layer=dict(type='RoIAlign', out_size=7, sample_num=2),out_channels=256,featmap_strides=[4, 8, 16, 32]),bbox_head=dict(type='SharedFCBBoxHead',num_fcs=2,in_channels=256,fc_out_channels=1024,roi_feat_size=7,num_classes=3, # 类别+1target_means=[0., 0., 0., 0.],target_stds=[0.1, 0.1, 0.2, 0.2],reg_class_agnostic=False,loss_cls=dict(type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0)))# model training and testing settingstrain_cfg = dict(rpn=dict(assigner=dict(type='MaxIoUAssigner',pos_iou_thr=0.7,neg_iou_thr=0.3,min_pos_iou=0.3,ignore_iof_thr=-1),sampler=dict(type='RandomSampler',num=256,pos_fraction=0.5,neg_pos_ub=-1,add_gt_as_proposals=False),allowed_border=0,pos_weight=-1,debug=False),rpn_proposal=dict(nms_across_levels=False,nms_pre=2000,nms_post=2000,max_num=2000,nms_thr=0.7,min_bbox_size=0),rcnn=dict(assigner=dict(type='MaxIoUAssigner',pos_iou_thr=0.5,neg_iou_thr=0.5,min_pos_iou=0.5,ignore_iof_thr=-1),sampler=dict(type='RandomSampler',num=512,pos_fraction=0.25,neg_pos_ub=-1,add_gt_as_proposals=True),pos_weight=-1,debug=False))test_cfg = dict(rpn=dict(nms_across_levels=False,nms_pre=1000,nms_post=1000,max_num=1000,nms_thr=0.7,min_bbox_size=0),rcnn=dict(score_thr=0.05, nms=dict(type='nms', iou_thr=0.5), max_per_img=100)# soft-nms is also supported for rcnn testing# e.g., nms=dict(type='soft_nms', iou_thr=0.5, min_score=0.05))# dataset settingsdataset_type = 'MyData'data_root = 'E:/mm/VOCdevkit/'img_norm_cfg = dict(mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)data = dict(imgs_per_gpu=2,workers_per_gpu=2,train=dict(type='RepeatDataset', # to avoid reloading datasets frequentlytimes=3,dataset=dict(type=dataset_type,ann_file=[data_root + 'VOC2007/ImageSets/Main/train.txt'],img_prefix=[data_root + 'VOC2007/'],img_scale=(400, 400),img_norm_cfg=img_norm_cfg,size_divisor=32,flip_ratio=0.5,with_mask=False,with_crowd=False,with_label=True)),val=dict(type=dataset_type,ann_file=data_root + 'VOC2007/ImageSets/Main/val.txt',img_prefix=data_root + 'VOC2007/',img_scale=(400, 400),img_norm_cfg=img_norm_cfg,size_divisor=32,flip_ratio=0,with_mask=False,with_crowd=False,with_label=True),test=dict(type=dataset_type,ann_file=data_root + 'VOC2007/ImageSets/Main/test.txt',img_prefix=data_root + 'VOC2007/',img_scale=(400, 400),img_norm_cfg=img_norm_cfg,size_divisor=32,flip_ratio=0,with_mask=False,with_crowd=False,with_label=False,test_mode=True))# optimizeroptimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001)optimizer_config = dict(grad_clip=dict(max_norm=35, norm_type=2))# learning policylr_config = dict(policy='step',warmup='linear',warmup_iters=500,warmup_ratio=1.0 / 3,step=[8, 11])checkpoint_config = dict(interval=1)# yapf:disablelog_config = dict(interval=100,hooks=[dict(type='TextLoggerHook'),# dict(type='TensorboardLoggerHook')])# yapf:enable# runtime settingstotal_epochs = 12dist_params = dict(backend='nccl')log_level = 'INFO'work_dir = 'model'load_from = Noneresume_from = Noneworkflow = [('train', 1)]

4.使用tools中的train训练

config 变为—config =“配置文件”

5.测试

下载权重

常见问题(本人遇见的):

1、在训练的时候提示的:KeyError: ‘None is not in the dataset registry’

修改mmdet/datasets内修改不完整

2、在训练完一个epoch保存权重后提示的:OSError: symbolic link privilege not held

以管理员身份运行pycharm

还没有评论,来说两句吧...