TensorFlow2、CUDA10、cuDNN7.6.5

" class="reference-link">

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

安装

TensorFlow2、CUDA10、cuDNN7.6.5

Anaconda3 python 3.7、TensorFlow2、CUDA10、cuDNN7.6.5

TensorFlow 2.0 环境搭建

window下安装 Keras、TensorFlow(先安装CUDA、cuDNN,再安装Keras、TensorFlow)

下载NVIDIA驱动:https://www.geforce.cn/drivers

TensorFlow2.0需要cuda10,所以应该装410.48以上版本驱动

CUDA、cuDNN百度盘下载

链接:https://pan.baidu.com/s/1oqYxOYob9MBuqHYsxkS-IA

提取码:y8ub

链接:https://pan.baidu.com/s/1YqfX0ObJSSUIaHYW3OW3cQ

提取码:21lu

链接:https://pan.baidu.com/s/1yF7e6ntWpXpdPWFLN4gi2g

提取码:f1zi

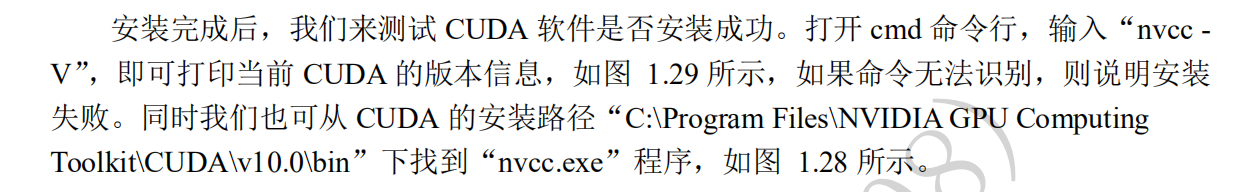

CUDA、tensorflow版本清单:https://tensorflow.google.cn/install/source#linuxCUDA下载:https://developer.nvidia.com/cuda-toolkit-archive

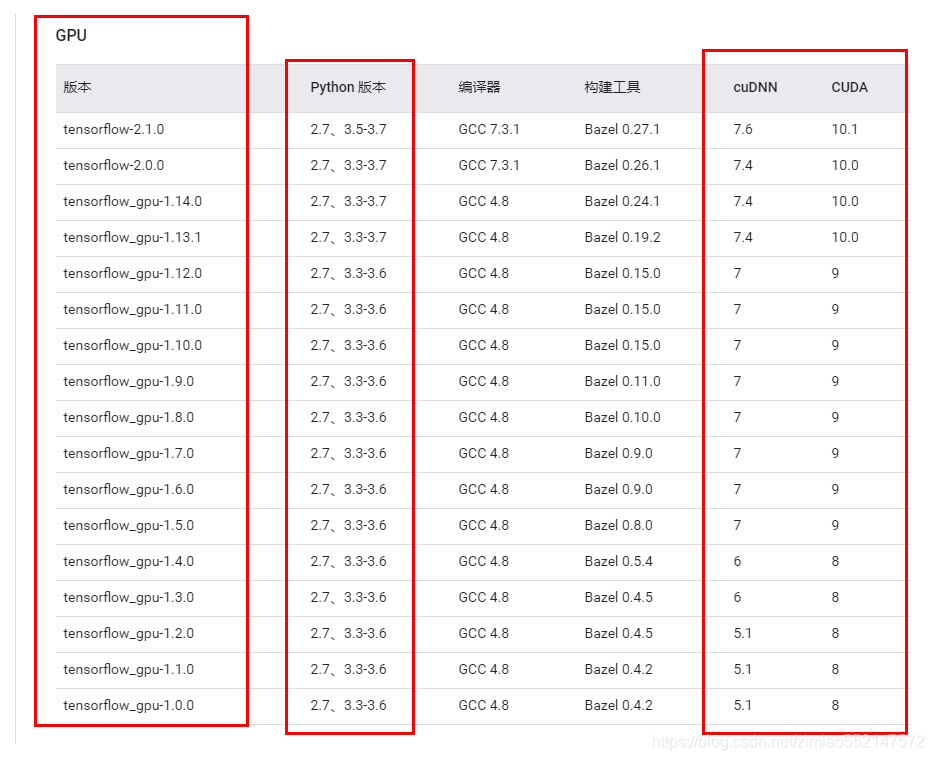

CUDA、cuDNN、tensorflow版本要求清单

注意:使用cuDNN v7.4.2.24可能会报错(亲测),但使用cuDNN v7.6.5.32的话,并不会出现“cuDNN v7.4.2.24可能会出现的”报错,虽然官网推荐组合是cuDNN v7.4.2.24,但建议使用cuDNN v7.6.5.32

" class="reference-link">

测试GPU是否能正常运行tensorflow

>>> import tensorflow as tf>>> tf.test.is_gpu_available()import tensorflow as tfimport timeitwith tf.device('/cpu:0'):cpu_a = tf.random.normal([10000, 1000])cpu_b = tf.random.normal([1000, 2000])print(cpu_a.device, cpu_b.device)with tf.device('/gpu:0'):gpu_a = tf.random.normal([10000, 1000])gpu_b = tf.random.normal([1000, 2000])print(gpu_a.device, gpu_b.device)def cpu_run():with tf.device('/cpu:0'):c = tf.matmul(cpu_a, cpu_b)return cdef gpu_run():with tf.device('/gpu:0'):c = tf.matmul(gpu_a, gpu_b)return c# warm upcpu_time = timeit.timeit(cpu_run, number=10)gpu_time = timeit.timeit(gpu_run, number=10)print('warmup:', cpu_time, gpu_time)cpu_time = timeit.timeit(cpu_run, number=10)gpu_time = timeit.timeit(gpu_run, number=10)print('run time:', cpu_time, gpu_time)

还没有评论,来说两句吧...