tensorflow 2.0 深度学习 (第三部分 卷积神经网络 part1)

" class="reference-link">

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

tensorflow 2.0 深度学习(第一部分 part1)

tensorflow 2.0 深度学习(第一部分 part2)

tensorflow 2.0 深度学习(第一部分 part3)

tensorflow 2.0 深度学习(第二部分 part1)

tensorflow 2.0 深度学习(第二部分 part2)

tensorflow 2.0 深度学习(第二部分 part3)

tensorflow 2.0 深度学习 (第三部分 卷积神经网络 part1)

tensorflow 2.0 深度学习 (第三部分 卷积神经网络 part2)

tensorflow 2.0 深度学习(第四部分 循环神经网络)

tensorflow 2.0 深度学习(第五部分 GAN生成神经网络 part1)

tensorflow 2.0 深度学习(第五部分 GAN生成神经网络 part2)

tensorflow 2.0 深度学习(第六部分 强化学习)

Model: "sequential"_________________________________________________________________Layer (type) Output Shape Param #=================================================================dense (Dense) multiple 200960_________________________________________________________________dense_1 (Dense) multiple 65792_________________________________________________________________dense_2 (Dense) multiple 65792_________________________________________________________________dense_3 (Dense) multiple 2570=================================================================Total params: 335,114Trainable params: 335,114Non-trainable params: 0_________________________________________________________________

import tensorflow as tffrom tensorflow import keras# 获取所有 GPU 设备列表gpus = tf.config.experimental.list_physical_devices('GPU')#gpus 打印为 [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]if gpus:try:# 设置 GPU 显存占用为按需分配for gpu in gpus:tf.config.experimental.set_memory_growth(gpu, True)logical_gpus = tf.config.experimental.list_logical_devices('GPU')#1 Physical GPUs, 1 Logical GPUsprint(len(gpus), "Physical GPUs,", len(logical_gpus), "Logical GPUs")except RuntimeError as e:# 异常处理print(e)

" class="reference-link">局部相关性

权值共享

卷积运算原理

表示学习

" class="reference-link">梯度传播

卷积神经网络 基础组成部分

单通道输入 单卷积核

多通道输入 单卷积核

" class="reference-link">多通道输入 多卷积核

步长

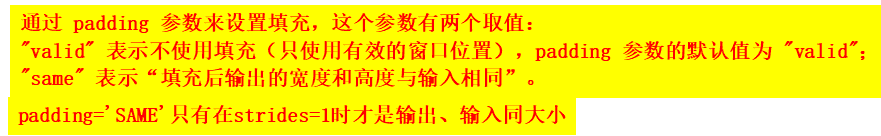

" class="reference-link">填充

#

自定义权值/偏置:tf.nn.conv2d卷积运算函数

" class="reference-link">卷积层类 layers.Conv2D

import osos.environ['TF_CPP_MIN_LOG_LEVEL']='2'import tensorflow as tffrom tensorflow import kerasfrom tensorflow.keras import layers, optimizers, datasets, Sequential# 模拟输入,3通道,高宽为5x = tf.random.normal([2,5,5,3])# 需要根据[k,k,cin,cout]格式创建,4个卷积核w = tf.random.normal([3,3,3,4])# 步长为1, padding为0,即padding=[[0,0],[0,0],[0,0],[0,0]]out = tf.nn.conv2d(x,w, strides=1, padding=[[0,0],[0,0],[0,0],[0,0]])# 模拟输入,3通道,高宽为5x = tf.random.normal([2,5,5,3])# 需要根据[k,k,cin,cout]格式创建,4个卷积核w = tf.random.normal([3,3,3,4])# 步长为1, padding为1,即padding=[[0,0],[1,1],[1,1],[0,0]]out = tf.nn.conv2d(x,w, strides=1, padding=[[0,0],[1,1],[1,1],[0,0]])# 模拟输入,3通道,高宽为5x = tf.random.normal([2,5,5,3])# 需要根据[k,k,cin,cout]格式创建,4个3x3大小的卷积核w = tf.random.normal([3,3,3,4])# 步长为1,padding设置为输出、输入同大小,即padding='SAME'# 需要注意的是, padding='SAME'只有在strides=1时才是输出、输入同大小out = tf.nn.conv2d(x,w, strides=1, padding='SAME')# 模拟输入,3通道,高宽为5x = tf.random.normal([2,5,5,3])# 需要根据[k,k,cin,cout]格式创建,4个3x3大小的卷积核w = tf.random.normal([3,3,3,4])# strides=3:高宽按3倍减少# padding设置为输出、输入同大小,即padding='SAME'。# padding='SAME'只有在strides=1时才是输出、输入同大小。out = tf.nn.conv2d(x,w, strides=3, padding='SAME')print(out.shape)# 根据[cout]格式创建偏置向量b = tf.zeros([4])# 在卷积输出上叠加偏置向量,它会自动broadcasting为[b,h',w',cout]out = out + b# 创建卷积层类# padding设置为输出、输入同大小,即padding='SAME'。# padding='SAME'只有在strides=1时才是输出、输入同大小。layer = layers.Conv2D(4,kernel_size=(3,4),strides=(2,1),padding='SAME')out = layer(x) # 前向计算out.shapelayer.kernel, layer.bias# 返回所有待优化张量列表layer.trainable_variablesfrom tensorflow.keras import Sequential# 网络容器network = Sequential([layers.Conv2D(6,kernel_size=3,strides=1), # 第一个卷积层, 6个3x3卷积核layers.MaxPooling2D(pool_size=2,strides=2), # 高宽各减半的池化层layers.ReLU(), # 激活函数layers.Conv2D(16,kernel_size=3,strides=1), # 第二个卷积层, 16个3x3卷积核layers.MaxPooling2D(pool_size=2,strides=2), # 高宽各减半的池化层layers.ReLU(), # 激活函数layers.Flatten(), # 打平层,方便全连接层处理layers.Dense(120, activation='relu'), # 全连接层,120个节点layers.Dense(84, activation='relu'), # 全连接层,84节点layers.Dense(10) # 全连接层,10个节点])# build一次网络模型,给输入X的形状,其中4为随意给的batchsznetwork.build(input_shape=(4, 28, 28, 1))# 统计网络信息network.summary()# 导入误差计算,优化器模块from tensorflow.keras import losses, optimizers# 创建损失函数的类,在实际计算时直接调用类实例即可criteon = losses.CategoricalCrossentropy(from_logits=True)# 构建梯度记录环境with tf.GradientTape() as tape:# 插入通道维度,=>[b,28,28,1]x = tf.expand_dims(x,axis=3)# 前向计算,获得10类别的预测分布,[b, 784] => [b, 10]out = network(x)# 真实标签one-hot编码,[b] => [b, 10]y_onehot = tf.one_hot(y, depth=10)# 计算交叉熵损失函数,标量loss = criteon(y_onehot, out)# 自动计算梯度grads = tape.gradient(loss, network.trainable_variables)# 自动更新参数optimizer.apply_gradients(zip(grads, network.trainable_variables))# 记录预测正确的数量,总样本数量correct, total = 0,0for x,y in db_test: # 遍历所有训练集样本# 插入通道维度,=>[b,28,28,1]x = tf.expand_dims(x,axis=3)# 前向计算,获得10类别的预测分布,[b, 784] => [b, 10]out = network(x)# 真实的流程时先经过softmax,再argmax# 但是由于softmax不改变元素的大小相对关系,故省去pred = tf.argmax(out, axis=-1)y = tf.cast(y, tf.int64)# 统计预测正确数量correct += float(tf.reduce_sum(tf.cast(tf.equal(pred, y),tf.float32)))# 统计预测样本总数total += x.shape[0]# 计算准确率print('test acc:', correct/total)

" class="reference-link">池化层

" class="reference-link">BatchNorm 层

# 构造输入x=tf.random.normal([100,32,32,3])# 将其他维度合并,仅保留通道维度x=tf.reshape(x,[-1,3])# 计算其他维度的均值,不计算通道维度ub=tf.reduce_mean(x,axis=0)ub# 创建BN层layer=layers.BatchNormalization()# 网络容器network = Sequential([layers.Conv2D(6,kernel_size=3,strides=1),# 插入BN层layers.BatchNormalization(),layers.MaxPooling2D(pool_size=2,strides=2),layers.ReLU(),layers.Conv2D(16,kernel_size=3,strides=1),# 插入BN层layers.BatchNormalization(),layers.MaxPooling2D(pool_size=2,strides=2),layers.ReLU(),layers.Flatten(),layers.Dense(120, activation='relu'),# 此处也可以插入BN层layers.Dense(84, activation='relu'),# 此处也可以插入BN层layers.Dense(10)])with tf.GradientTape() as tape:# 插入通道维度x = tf.expand_dims(x,axis=3)# 前向计算,设置计算模式,[b, 784] => [b, 10]# BN需要设置training=Trueout = network(x, training=True)# 遍历测试集for x,y in db_test:# 插入通道维度x = tf.expand_dims(x,axis=3)# 前向计算,测试模式。BN需要设置training=True。out = network(x, training=False)import tensorflow as tffrom tensorflow import kerasfrom tensorflow.keras import layers, optimizers# 2 images with 4x4 size, 3 channels# we explicitly enforce the mean and stddev to N(1, 0.5)x = tf.random.normal([2,4,4,3], mean=1.,stddev=0.5)x.shape #TensorShape([2, 4, 4, 3])net = layers.BatchNormalization(axis=-1, center=True, scale=True, trainable=True)out = net(x)print('forward in test mode:', net.variables)#forward in test mode: [#<tf.Variable 'batch_normalization/gamma:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>,#<tf.Variable 'batch_normalization/beta:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_mean:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_variance:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>]out = net(x, training=True)print('forward in train mode(1 step):', net.variables)#forward in train mode(1 step): [#<tf.Variable 'batch_normalization/gamma:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>,#<tf.Variable 'batch_normalization/beta:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_mean:0' shape=(3,) dtype=float32, numpy=array([0.01059513, 0.01055362, 0.01071654], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_variance:0' shape=(3,) dtype=float32, numpy=array([0.99315214, 0.99234647, 0.9939004 ], dtype=float32)>]for i in range(100):out = net(x, training=True)print('forward in train mode(100 steps):', net.variables)#forward in train mode(100 steps): [#<tf.Variable 'batch_normalization/gamma:0' shape=(3,) dtype=float32, numpy=array([1., 1., 1.], dtype=float32)>,#<tf.Variable 'batch_normalization/beta:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_mean:0' shape=(3,) dtype=float32, numpy=array([0.6755756, 0.6729286, 0.6833168], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_variance:0' shape=(3,) dtype=float32, numpy=array([0.5633595 , 0.51199085, 0.6110718 ], dtype=float32)>]#SGD随机梯度下降optimizer = optimizers.SGD(lr=1e-2)for i in range(10):with tf.GradientTape() as tape:out = net(x, training=True)loss = tf.reduce_mean(tf.pow(out,2)) - 1grads = tape.gradient(loss, net.trainable_variables)optimizer.apply_gradients(zip(grads, net.trainable_variables))print('backward(10 steps):', net.variables)#backward(10 steps): [#<tf.Variable 'batch_normalization/gamma:0' shape=(3,) dtype=float32, numpy=array([0.9355032 , 0.93557316, 0.935464 ], dtype=float32)>,#<tf.Variable 'batch_normalization/beta:0' shape=(3,) dtype=float32, numpy=array([ 7.4505802e-09, -4.2840842e-09, 5.2154064e-10], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_mean:0' shape=(3,) dtype=float32, numpy=array([0.71228695, 0.70949614, 0.7204489 ], dtype=float32)>,#<tf.Variable 'batch_normalization/moving_variance:0' shape=(3,) dtype=float32, numpy=array([0.5396321 , 0.485472 , 0.58993715], dtype=float32)>]

还没有评论,来说两句吧...