Spark spark.driver.maxResultSize作用,报错 is bigger than spark.driver.maxResultSize

Application Properties

| Property Name | Default | Meaning |

|---|---|---|

spark.app.name | (none) | The name of your application. This will appear in the UI and in log data. |

spark.driver.cores | 1 | Number of cores to use for the driver process, only in cluster mode. |

spark.driver.maxResultSize | 1g | Limit of total size of serialized results of all partitions for each Spark action (e.g. collect) in bytes. Should be at least 1M, or 0 for unlimited. Jobs will be aborted if the total size is above this limit. Having a high limit may cause out-of-memory errors in driver (depends on spark.driver.memory and memory overhead of objects in JVM). Setting a proper limit can protect the driver from out-of-memory errors. |

http://spark.apache.org/docs/2.4.5/configuration.html

Spark的action操作处理后的所有分区的序列化后的总字节大小。最小是1M,设置为0是不限制。如果总大小超出这个限制则job会失败。

设置spark.driver.maxResultSize过大可能会导致driver端的OOM错误。设置合适的大小可以保护driver免受内存不足错误的影响。

-——

每个Spark action的所有分区的序列化结果的总大小限制(例如,collect行动算子)。 应该至少为1M,或者为无限制。 如果超过1g,job将被中止。 如果driver.maxResultSize设置过大可能会超出内存(取决于spark.driver.memory和JVM中对象的内存开销)。 设置适当的参数限制可以防止内存不足。

默认值:1024M

设置为0则为无限制,但是有OOM的风险

-——

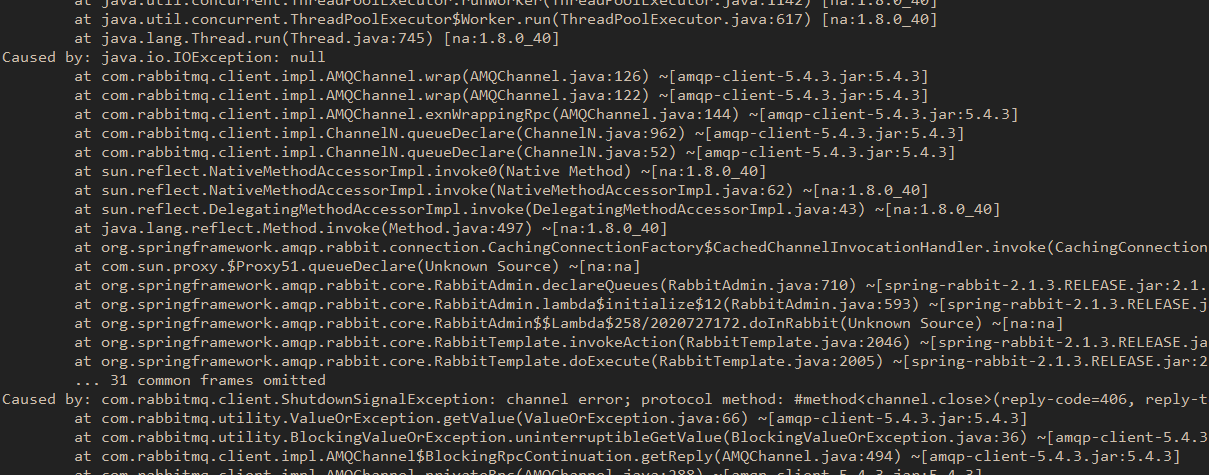

driver.maxResultSize太小

错误提示

Caused by: org.apache.spark.SparkException:Job aborted due to stage failure: Total size of serializedresults of 374 tasks (1026.0 MB) is bigger thanspark.driver.maxResultSize (1024.0 MB)

解决

spark.driver.maxResultSize默认大小为1G 每个Spark action(如collect)所有分区的序列化结果的总大小限制,简而言之就是executor给driver返回的结果过大,报这个错说明需要提高这个值或者避免使用类似的方法,比如countByValue,countByKey等。

将值调大即可

spark.driver.maxResultSize 2g

还没有评论,来说两句吧...