CS231N assignment1 SVM

from cs231n.classifiers.softmax import softmax_loss_naive

线性分类器SVM,分成两个部分

1.a score function that maps the raw data to class scores,也就是所谓的f(w,x)函数

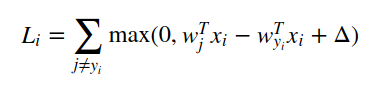

2.a loss function that quantifies the agreement between the predicted scores and the ground truth labels

margin:

SVM loss function wants the score of the correct class yi to be larger than the incorrect class scores by at least by Δ (delta). If this is not the case, we will accumulate loss.

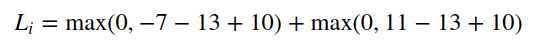

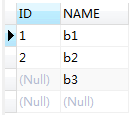

example

1. loss function

cs231n/classifiers/linear_softmax.py中

softmax_loss_naive

SVM想让正确类别的score比错误类别的score要高出一个固定的margin Δ.

svm的损失函数计算方法

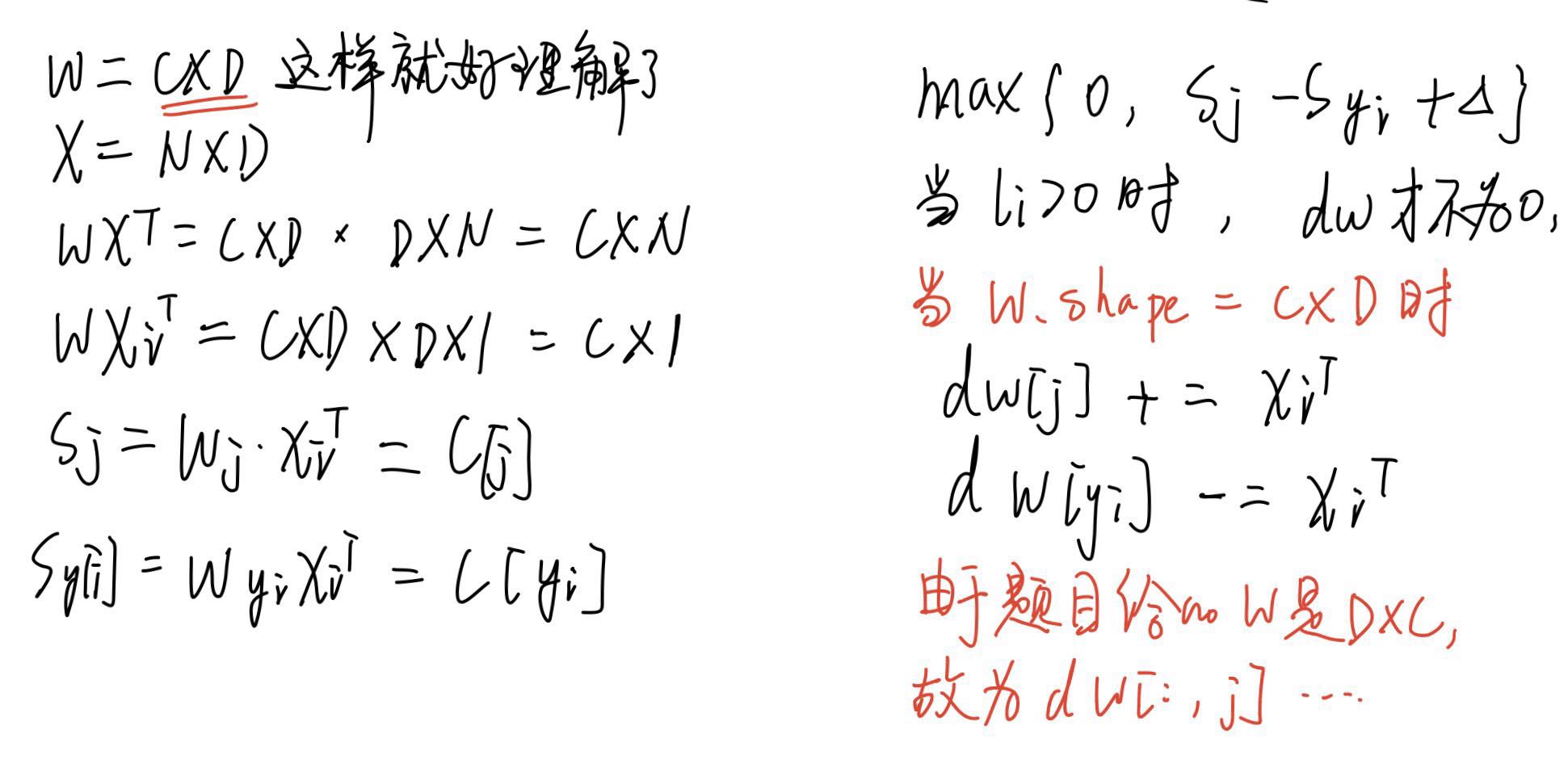

Inputs:- W: A numpy array of shape (D, C) containing weights.- X: A numpy array of shape (N, D) containing a minibatch of data.- y: A numpy array of shape (N,) containing training labels; y[i] = c meansthat X[i] has label c, where 0 <= c < C.- reg: (float) regularization strength

for i in xrange(num_train):#0-Nscores = X[i].dot(W) ##1*Ccorrect_class_score = scores[y[i]]for j in xrange(num_classes):# 0-Cif j == y[i]:continuemargin = scores[j] - correct_class_score + 1 # note delta = 1if margin > 0:loss += margindW[:,j] += X[i].TdW[:,y[i]] -= X[i].T

softmax_loss_vectorized

loss

scores = X.dot(W)yi_scores = scores[np.arange(scores.shape[0]),y]margins = np.maximum(0, scores - np.matrix(yi_scores).T + 1)margins[np.arange(num_train),y] = 0loss = np.mean(np.sum(margins, axis=1))loss += 0.5 * reg * np.sum(W * W)

####参考

cs231n linear classifier SVM

https://mlxai.github.io/2017/01/06/vectorized-implementation-of-svm-loss-and-gradient-update.html

还没有评论,来说两句吧...