kubeadm中添加k8s节点问题

从下方可以看到3个地方出了问题

etcd-master1、kube-apiserver-master1、kube-flannel-ds-42z5p

[root@master3 ~]# kubectl get pods -n kube-system -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATEScoredns-546565776c-m96fb 1/1 Running 0 46d 10.244.1.3 master2 <none> <none>coredns-546565776c-thczd 1/1 Running 0 44d 10.244.2.2 master3 <none> <none>etcd-master1 0/1 CrashLoopBackOff 21345 124d 10.128.4.164 master1 <none> <none>etcd-master2 1/1 Running 1 124d 10.128.4.251 master2 <none> <none>etcd-master3 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kube-apiserver-master1 0/1 CrashLoopBackOff 21349 124d 10.128.4.164 master1 <none> <none>kube-apiserver-master2 1/1 Running 1 124d 10.128.4.251 master2 <none> <none>kube-apiserver-master3 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kube-controller-manager-master1 1/1 Running 11 124d 10.128.4.164 master1 <none> <none>kube-controller-manager-master2 1/1 Running 2 124d 10.128.4.251 master2 <none> <none>kube-controller-manager-master3 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kube-flannel-ds-42z5p 0/1 Error 1568 6d2h 10.128.2.173 bg7.test.com.cn <none> <none>kube-flannel-ds-6g59q 1/1 Running 7 43d 10.128.4.8 wd8.test.com.cn <none> <none>kube-flannel-ds-85hxd 1/1 Running 3 123d 10.128.4.107 wd6.test.com.cn <none> <none>kube-flannel-ds-brd8d 1/1 Running 1 33d 10.128.4.160 wd9.test.com.cn <none> <none>kube-flannel-ds-gmmhx 1/1 Running 3 124d 10.128.4.82 wd5.test.com.cn <none> <none>kube-flannel-ds-lj4g2 1/1 Running 1 124d 10.128.4.251 master2 <none> <none>kube-flannel-ds-n68dn 1/1 Running 11 124d 10.128.4.164 master1 <none> <none>kube-flannel-ds-ppnd7 1/1 Running 4 124d 10.128.4.191 wd4.test.com.cn <none> <none>kube-flannel-ds-tf9lk 1/1 Running 0 33d 10.128.4.170 wd7.test.com.cn <none> <none>kube-flannel-ds-vt5nh 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kube-proxy-622c7 1/1 Running 11 124d 10.128.4.164 master1 <none> <none>kube-proxy-7bp72 1/1 Running 0 7d4h 10.128.2.173 bg7.test.com.cn <none> <none>kube-proxy-8cx5q 1/1 Running 4 123d 10.128.4.107 wd6.test.com.cn <none> <none>kube-proxy-h2qh5 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kube-proxy-kpkm4 1/1 Running 7 43d 10.128.4.8 wd8.test.com.cn <none> <none>kube-proxy-lp74p 1/1 Running 1 33d 10.128.4.160 wd9.test.com.cn <none> <none>kube-proxy-nwsnm 1/1 Running 1 124d 10.128.4.251 master2 <none> <none>kube-proxy-psjll 1/1 Running 4 124d 10.128.4.82 wd5.test.com.cn <none> <none>kube-proxy-v6x42 1/1 Running 0 33d 10.128.4.170 wd7.test.com.cn <none> <none>kube-proxy-vdfmz 1/1 Running 4 124d 10.128.4.191 wd4.test.com.cn <none> <none>kube-scheduler-master1 1/1 Running 11 124d 10.128.4.164 master1 <none> <none>kube-scheduler-master2 1/1 Running 1 124d 10.128.4.251 master2 <none> <none>kube-scheduler-master3 1/1 Running 1 124d 10.128.4.211 master3 <none> <none>kuboard-7986796cf8-2g6bs 1/1 Running 0 44d 10.244.1.4 master2 <none> <none>metrics-server-677dcb8b4d-pshqw 1/1 Running 0 44d 10.128.4.191 wd4.test.com.cn <none> <none>

1 flannl的问题

K8s集群中flannel组件处于CrashLoopBackOff状态的解决思路

这个网站的内容是加载ipvs的问题,可以通过lsmod | grep ip_vs查看加载是否成功

[root@master3 net.d]# cat /etc/sysconfig/modules/ipvs.modules#!/bin/shmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrack_ipv4

但我这里的异常并不是这样的

[root@master3 ~]# kubectl logs kube-flannel-ds-42z5p -n kube-systemI0714 08:58:00.590712 1 main.go:519] Determining IP address of default interfaceI0714 08:58:00.687885 1 main.go:532] Using interface with name eth0 and address 10.128.2.173I0714 08:58:00.687920 1 main.go:549] Defaulting external address to interface address (10.128.2.173)W0714 08:58:00.687965 1 client_config.go:608] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.E0714 08:58:30.689584 1 main.go:250] Failed to create SubnetManager: error retrieving pod spec for 'kube-system/kube-flannel-ds-42z5p': Get "https://10.96.0.1:443/api/v1/namespaces/kube-system/pods/kube-flannel-ds-42z5p": dial tcp 10.96.0.1:443: i/o timeout

翻阅资料在k8s中安装flannel的故障解决: Failed to create SubnetManager: error retrieving pod spec for : the server doe

使用kubeadm在ububtu16.04安装kubernetes1.6.1-flannel

使用kubeadm快速部署一套K8S集群

查看集群,在一台没有的work节点可以看到

[root@wd5 ~]# ps -ef|grep flannelroot 8359 28328 0 17:13 pts/0 00:00:00 grep --color=auto flannelroot 22735 22714 0 May31 ? 00:26:16 /opt/bin/flanneld --ip-masq --kube-subnet-mgr

而有问题的work节点是没有此进程的

[root@bg7 ~]# kubectl create -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel-rbac.ymlThe connection to the server localhost:8080 was refused - did you specify the right host or port?

查看k8s集群状态

[root@master3 ~]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refusedscheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refusedetcd-0 Healthy {"health":"true"}

解决k8s Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1 connect: connection refused

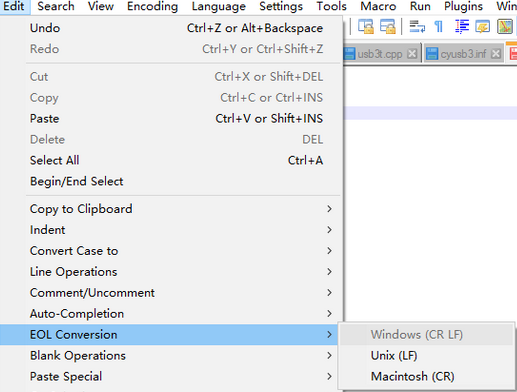

connect: connection refusedvi /etc/kubernetes/manifests/kube-scheduler.yaml和vi /etc/kubernetes/manifests/kube-controller-manager.yaml

将- --port=0注释掉后,执行systemctl restart kubelet.service,现在的状态才正常了

NAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-0 Healthy {"health":"true"}

上面的配置更改并没有修复pods状态异常的问题

查看k8s flannel 网络问题 dial tcp 10.0.0.1 i/o timeout

i/o timeout

没有问题的节点都有这个cni虚拟网卡,而有问题的节点则没有.

[root@wd4 ~]# ifconfigcni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450inet 10.244.3.1 netmask 255.255.255.0 broadcast 10.244.3.255inet6 fe80::44d6:8ff:fe10:9c7e prefixlen 64 scopeid 0x20<link>ether 46:d6:08:10:9c:7e txqueuelen 1000 (Ethernet)RX packets 322756760 bytes 105007395106 (97.7 GiB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 328180837 bytes 158487160202 (147.6 GiB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

因为kube-controller-manager.yaml设置的集群管理的网段是10.244.0.0/16

查看节点状态,下方有异常信息,之前没有注意到

[root@bg7 net.d]# service kubelet statusRedirecting to /bin/systemctl status kubelet.service● kubelet.service - kubelet: The Kubernetes Node AgentLoaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)Drop-In: /usr/lib/systemd/system/kubelet.service.d└─10-kubeadm.confActive: active (running) since Thu 2021-07-08 13:40:44 CST; 6 days agoDocs: https://kubernetes.io/docs/Main PID: 5290 (kubelet)Tasks: 45Memory: 483.8MCGroup: /system.slice/kubelet.service└─5290 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --co...Jul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.322908 5290 cni.go:364] Error adding longhorn-system_longhorn-csi-plugi...rectoryJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.355433 5290 cni.go:364] Error adding longhorn-system_engine-image-ei-e1...rectoryJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.372196 5290 cni.go:364] Error adding longhorn-system_longhorn-manager-2...rectoryJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: W0714 18:30:58.378600 5290 pod_container_deletor.go:77] Container "5ae13a0a2be56237a3f...tainersJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: W0714 18:30:58.395855 5290 pod_container_deletor.go:77] Container "ea0b2a805f720628172...tainersJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: W0714 18:30:58.411259 5290 pod_container_deletor.go:77] Container "63776660a9ee92b50ee...tainersJul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.700878 5290 remote_runtime.go:105] RunPodSandbox from runtime service failed: ...Jul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.700942 5290 kuberuntime_sandbox.go:68] CreatePodSandbox for pod "longhorn-csi-...Jul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.700958 5290 kuberuntime_manager.go:733] createPodSandbox for pod "longhorn-csi...Jul 14 18:30:58 bg7.test.com.cn kubelet[5290]: E0714 18:30:58.701009 5290 pod_workers.go:191] Error syncing pod 3b0799d3-9446-4f51-94...446-4f5Hint: Some lines were ellipsized, use -l to show in full.

在work节点安装网络插件

[root@bg7 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlThe connection to the server localhost:8080 was refused - did you specify the right host or port?

出现这个问题是需要在master节点中admin.conf配置到work节点中

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profilesource ~/.bash_profile[root@bg7 kubernetes]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlpodsecuritypolicy.policy/psp.flannel.unprivileged configuredclusterrole.rbac.authorization.k8s.io/flannel unchangedclusterrolebinding.rbac.authorization.k8s.io/flannel unchangedserviceaccount/flannel unchangedconfigmap/kube-flannel-cfg unchangeddaemonset.apps/kube-flannel-ds configured

kubeadm 安装kubetnetes(flannel)

实在找不到办法,重置work节点

systemctl stop kubeletkubeadm resetrm -rf /etc/cni/net.d# 如果开启了防火墙则执行iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X# 加入集群kubeadm join 10.128.4.18:16443 --token xfp80m.xx--discovery-token-ca-cert-hash sha256:dee39c2f7c7484af5872018d786626c9a6264da9334xxxxxxxx#

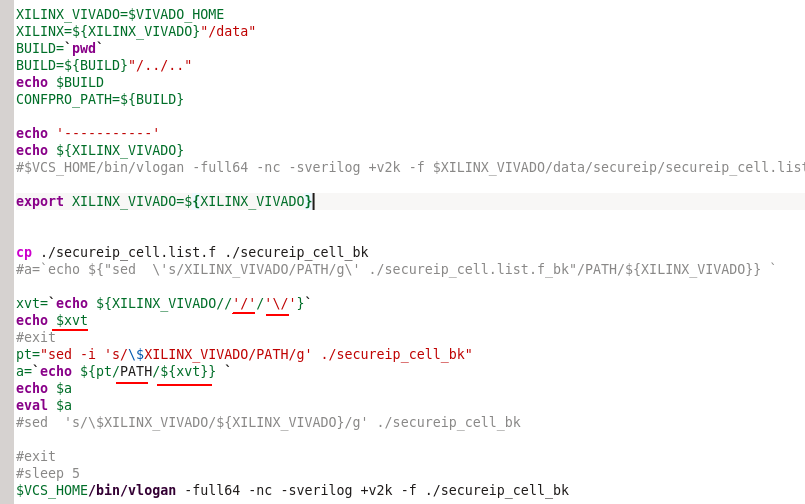

根本问题原来是6443端口被限制的问题

[root@master2 ~]# netstat -ntlp | grep 6443tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 886/haproxytcp6 0 0 :::6443 :::* LISTEN 3006/kube-apiserver[root@bg7 net.d]# kubectl describe pod kube-flannel-ds-5jhm6 -n kube-systemName: kube-flannel-ds-5jhm6Namespace: kube-systemPriority: 2000001000Priority Class Name: system-node-criticalNode: bg7.test.com.cn/10.128.2.173Start Time: Thu, 15 Jul 2021 14:17:39 +0800Labels: app=flannelcontroller-revision-hash=68c5dd74dfpod-template-generation=2tier=nodeAnnotations: <none>Status: RunningIP: 10.128.2.173IPs:IP: 10.128.2.173Controlled By: DaemonSet/kube-flannel-dsInit Containers:install-cni:Container ID: docker://f04fdac1c8d9d0f98bd11159aebb42f9870709fd6fa2bb96739f8d255967033aImage: quay.io/coreos/flannel:v0.14.0Image ID: docker-pullable://quay.io/coreos/flannel@sha256:4a330b2f2e74046e493b2edc30d61fdebbdddaaedcb32d62736f25be8d3c64d5Port: <none>Host Port: <none>Command:cpArgs:-f/etc/kube-flannel/cni-conf.json/etc/cni/net.d/10-flannel.conflistState: TerminatedReason: CompletedExit Code: 0Started: Thu, 15 Jul 2021 14:45:18 +0800Finished: Thu, 15 Jul 2021 14:45:18 +0800Ready: TrueRestart Count: 0Environment: <none>Mounts:/etc/cni/net.d from cni (rw)/etc/kube-flannel/ from flannel-cfg (rw)/var/run/secrets/kubernetes.io/serviceaccount from flannel-token-wc2lq (ro)Containers:kube-flannel:Container ID: docker://8ab52d4dc3c29d13d7453a33293a8696391f31826afdc1981a1df9c7eafd6994Image: quay.io/coreos/flannel:v0.14.0Image ID: docker-pullable://quay.io/coreos/flannel@sha256:4a330b2f2e74046e493b2edc30d61fdebbdddaaedcb32d62736f25be8d3c64d5Port: <none>Host Port: <none>Command:/opt/bin/flanneldArgs:--ip-masq--kube-subnet-mgrState: WaitingReason: CrashLoopBackOffLast State: TerminatedReason: ErrorExit Code: 1Started: Thu, 15 Jul 2021 15:27:58 +0800Finished: Thu, 15 Jul 2021 15:28:29 +0800Ready: FalseRestart Count: 12Limits:cpu: 100mmemory: 50MiRequests:cpu: 100mmemory: 50MiEnvironment:POD_NAME: kube-flannel-ds-5jhm6 (v1:metadata.name)POD_NAMESPACE: kube-system (v1:metadata.namespace)Mounts:/etc/kube-flannel/ from flannel-cfg (rw)/run/flannel from run (rw)/var/run/secrets/kubernetes.io/serviceaccount from flannel-token-wc2lq (ro)Conditions:Type StatusInitialized TrueReady FalseContainersReady FalsePodScheduled TrueVolumes:run:Type: HostPath (bare host directory volume)Path: /run/flannelHostPathType:cni:Type: HostPath (bare host directory volume)Path: /etc/cni/net.dHostPathType:flannel-cfg:Type: ConfigMap (a volume populated by a ConfigMap)Name: kube-flannel-cfgOptional: falseflannel-token-wc2lq:Type: Secret (a volume populated by a Secret)SecretName: flannel-token-wc2lqOptional: falseQoS Class: BurstableNode-Selectors: <none>Tolerations: :NoSchedulenode.kubernetes.io/disk-pressure:NoSchedulenode.kubernetes.io/memory-pressure:NoSchedulenode.kubernetes.io/network-unavailable:NoSchedulenode.kubernetes.io/not-ready:NoExecutenode.kubernetes.io/pid-pressure:NoSchedulenode.kubernetes.io/unreachable:NoExecutenode.kubernetes.io/unschedulable:NoScheduleEvents:Type Reason Age From Message---- ------ ---- ---- -------Normal Pulled 48m kubelet Container image "quay.io/coreos/flannel:v0.14.0" already present on machineNormal Created 48m kubelet Created container install-cniNormal Started 48m kubelet Started container install-cniNormal Created 44m (x5 over 48m) kubelet Created container kube-flannelNormal Started 44m (x5 over 48m) kubelet Started container kube-flannelNormal Pulled 28m (x9 over 48m) kubelet Container image "quay.io/coreos/flannel:v0.14.0" already present on machineWarning BackOff 3m8s (x177 over 47m) kubelet Back-off restarting failed containerjournalctl -xeu kubelet"longhorn-csi-plugin-fw2ck_longhorn-system" network: open /run/flannel/subnet.env: no such file or directory[root@wd4 flannel]# cat subnet.envFLANNEL_NETWORK=10.244.0.0/16FLANNEL_SUBNET=10.244.3.1/24FLANNEL_MTU=1450FLANNEL_IPMASQ=true

从下图可以看到10.96.0.1可以ping,但是443端口却无法访问

[root@bg7 ~]# ping 10.96.0.1PING 10.96.0.1 (10.96.0.1) 56(84) bytes of data.64 bytes from 10.96.0.1: icmp_seq=1 ttl=64 time=0.034 ms[root@bg7 ~]# telnet 10.96.0.1 443Trying 10.96.0.1...

2 etcd-master1

[root@master1 ~]# kubectl logs etcd-master1 -n kube-system[WARNING] Deprecated '--logger=capnslog' flag is set; use '--logger=zap' flag instead2021-07-14 09:56:08.703026 I | etcdmain: etcd Version: 3.4.32021-07-14 09:56:08.703052 I | etcdmain: Git SHA: 3cf2f69b52021-07-14 09:56:08.703055 I | etcdmain: Go Version: go1.12.122021-07-14 09:56:08.703058 I | etcdmain: Go OS/Arch: linux/amd642021-07-14 09:56:08.703062 I | etcdmain: setting maximum number of CPUs to 16, total number of available CPUs is 162021-07-14 09:56:08.703101 N | etcdmain: the server is already initialized as member before, starting as etcd member...[WARNING] Deprecated '--logger=capnslog' flag is set; use '--logger=zap' flag instead2021-07-14 09:56:08.703131 I | embed: peerTLS: cert = /etc/kubernetes/pki/etcd/peer.crt, key = /etc/kubernetes/pki/etcd/peer.key, trusted-ca = /etc/kubernetes/pki/etcd/ca.crt, client-cert-auth = true, crl-file =2021-07-14 09:56:08.703235 C | etcdmain: open /etc/kubernetes/pki/etcd/peer.crt: no such file or directory

这个问题相对简单, 将其他master节点etcd的证书什么的复制过去就可以了,因为k8s集群每个master节点是对等,故而猜测直接复制过去可以用

[root@master2 ~]# cd /etc/kubernetes/pki/etcd[root@master2 etcd]# lltotal 32-rw-r--r-- 1 root root 1017 Mar 12 11:59 ca.crt-rw------- 1 root root 1675 Mar 12 11:59 ca.key-rw-r--r-- 1 root root 1094 Mar 12 13:47 healthcheck-client.crt-rw------- 1 root root 1675 Mar 12 13:47 healthcheck-client.key-rw-r--r-- 1 root root 1127 Mar 12 13:47 peer.crt-rw------- 1 root root 1675 Mar 12 13:47 peer.key-rw-r--r-- 1 root root 1127 Mar 12 13:47 server.crt-rw------- 1 root root 1675 Mar 12 13:47 server.keycd /etc/kubernetes/pki/etcdscp healthcheck-client.crt root@10.128.4.164:/etc/kubernetes/pki/etcdscp healthcheck-client.key peer.crt peer.key server.crt server.key root@10.128.4.164:/etc/kubernetes/pki/etcd

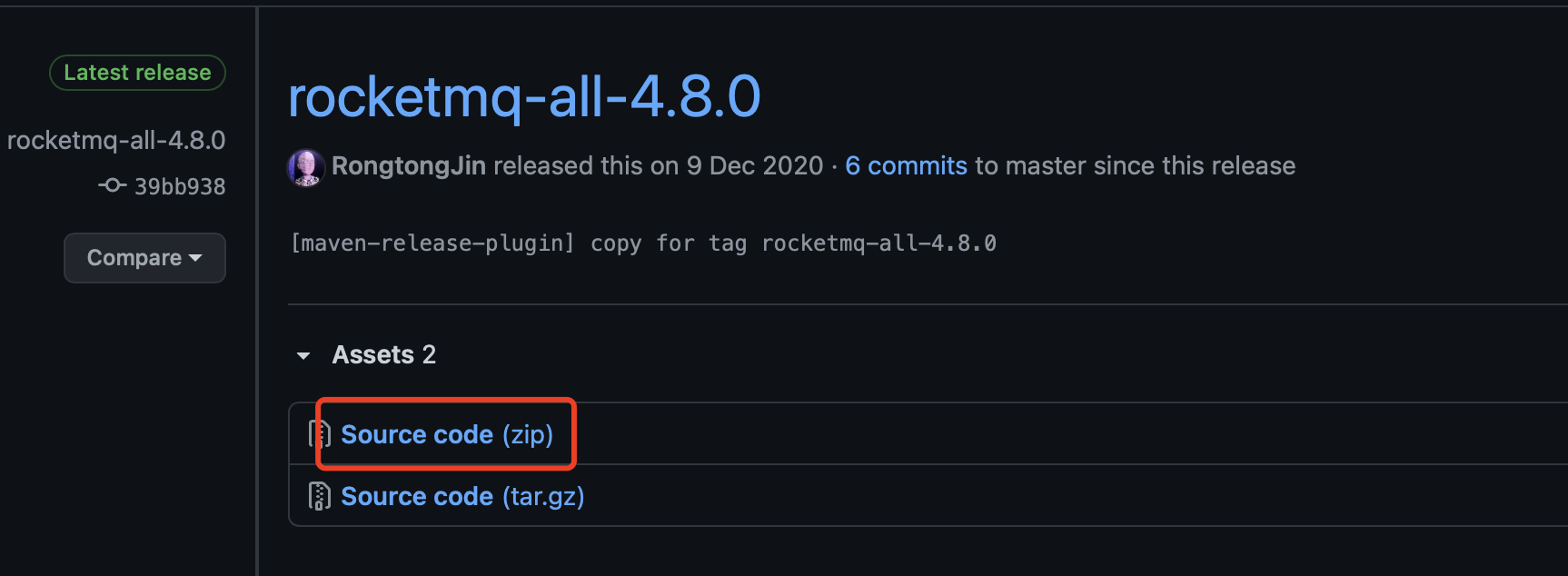

查看etcd,按照下面的命令安装etcdctl客户端命令行工具,这个是宿主机中安装的ectd的访问工具

wget https://github.com/etcd-io/etcd/releases/download/v3.4.14/etcd-v3.4.14-linux-amd64.tar.gztar -zxf etcd-v3.4.14-linux-amd64.tar.gzmv etcd-v3.4.14-linux-amd64/etcdctl /usr/local/binchmod +x /usr/local/bin/

除了上面的方式,还是直接进入到docker容器中

docker exec -it $(docker ps -f name=etcd_etcd -q) /bin/sh# 查看 etcd 集群的成员列表# etcdctl --endpoints 127.0.0.1:2379 --cacert /etc/kubernetes/pki/etcd/ca.crt --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key member list63009835561e0671, started, master1, https://10.128.4.164:2380, https://10.128.4.164:2379, falseb245d1beab861d15, started, master2, https://10.128.4.251:2380, https://10.128.4.251:2379, falsef3f56f36d83eef49, started, master3, https://10.128.4.211:2380, https://10.128.4.211:2379, false

查看高可用集群健康状态

[root@master3 application]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=10.128.4.164:2379,10.128.4.251:2379,10.128.4.211:2379 endpoint health{"level":"warn","ts":"2021-07-14T19:37:51.455+0800","caller":"clientv3/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"endpoint://client-2684301f-38ba-4150-beab-ed052321a6d9/10.128.4.164:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = context deadline exceeded"}+-------------------+--------+------------+---------------------------+| ENDPOINT | HEALTH | TOOK | ERROR |+-------------------+--------+------------+---------------------------+| 10.128.4.211:2379 | true | 8.541405ms | || 10.128.4.251:2379 | true | 8.922941ms | || 10.128.4.164:2379 | false | 5.0002425s | context deadline exceeded |

查看etcd高可用集群列表

[root@master3 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=10.128.4.164:2379,10.128.4.251:2379,10.128.4.211:2379 member list+------------------+---------+---------+---------------------------+---------------------------+------------+| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |+------------------+---------+---------+---------------------------+---------------------------+------------+| 63009835561e0671 | started | master1 | https://10.128.4.164:2380 | https://10.128.4.164:2379 | false || b245d1beab861d15 | started | master2 | https://10.128.4.251:2380 | https://10.128.4.251:2379 | false || f3f56f36d83eef49 | started | master3 | https://10.128.4.211:2380 | https://10.128.4.211:2379 | false |+------------------+---------+---------+---------------------------+---------------------------+------------+

查看etcd高可用集群leader

[root@master3 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out=table --endpoints=10.128.4.164:2379,10.128.4.251:2379,10.128.4.211:2379 endpoint status{"level":"warn","ts":"2021-07-15T10:24:33.494+0800","caller":"clientv3/retry_interceptor.go:62","msg":"retrying of unary invoker failed","target":"passthrough:///10.128.4.164:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = latest balancer error: connection error: desc = \"transport: Error while dialing dial tcp 10.128.4.164:2379: connect: connection refused\""}Failed to get the status of endpoint 10.128.4.164:2379 (context deadline exceeded)+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+| 10.128.4.251:2379 | b245d1beab861d15 | 3.4.3 | 25 MB | false | false | 16 | 46888364 | 46888364 | || 10.128.4.211:2379 | f3f56f36d83eef49 | 3.4.3 | 25 MB | true | false | 16 | 46888364 | 46888364 | |+-------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

按照下面的命令,将有效的证书复制到master1,结果还是出问题

scp /etc/kubernetes/pki/ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.* root@10.128.4.164:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/admin.conf root@10.128.4.164:/etc/kubernetes/scp /etc/kubernetes/pki/ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.* root@10.128.4.164:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/admin.conf root@10.128.4.164:/etc/kubernetes/

然后想到的办法是将master节点从集群中移除并重新加入

将 master 节点服务器从 k8s 集群中移除并重新加入

# 移除k8s中的有问题的master节点kubectl drain master1kubectl delete node master1# etcl中移除相应的配置,注意etcd中12637f5ec2bd02b8是通过etcd 集群的成员列表来查看的etcdctl --endpoints 127.0.0.1:2379 --cacert /etc/kubernetes/pki/etcd/ca.crt --cert /etc/kubernetes/pki/etcd/server.crt --key /etc/kubernetes/pki/etcd/server.key member remove 12637f5ec2bd02b8# 注意这个是在没有问题的master节点中执行mkdir -p /etc/kubernetes/pki/etcd/scp /etc/kubernetes/pki/ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.* root@10.128.4.164:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/admin.conf root@10.128.4.164:/etc/kubernetes/scp /etc/kubernetes/pki/ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/sa.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/front-proxy-ca.* root@10.128.4.164:/etc/kubernetes/pki/scp /etc/kubernetes/pki/etcd/ca.* root@10.128.4.164:/etc/kubernetes/pki/etcd/scp /etc/kubernetes/admin.conf root@10.128.4.164:/etc/kubernetes/# 注意下面的命令是 有问题的master节点中执行kubeadm reset# 注意这个是 有问题的节点中执行kubeadm join 10.128.4.18:16443 --token xfp80m.tzbnqxoyv1p21687 --discovery-token-ca-cert-hash sha256:dee39c2f7c7484af5872018d786626c9a6264da93346acc9114ffacd0a2782d7 --control-planekubectl cordon master1# 至此就ok了,同步kube-apiserver-master1的问题也解决了

如果不小心在原本没有问题的机器上执行kubeadm reset,可以看到下面的情况master3变为了NotReady状态

[root@master1 pki]# kubectl get nodesNAME STATUS ROLES AGE VERSIONbg7.test.com.cn Ready <none> 7d22h v1.18.9master1 Ready master 6m6s v1.18.9master2 Ready,SchedulingDisabled master 124d v1.18.9master3 NotReady,SchedulingDisabled master 124d v1.18.9wd4.test.com.cn Ready <none> 124d v1.18.9

解决方案参考K8S的master节点与NODE节点误执行kubeadm reset处理办法

下面的操作方式我没有执行成功,我还是按照先移除再添加的方式成功了

scp /etc/kubernetes/admin.conf root@10.128.2.173:/etc/kubernetes/mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configkubeadm init --kubernetes-version=v1.18.9 --pod-network-cidr=10.244.0.0/16echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profilesource ~/.bash_profile

3 节点调度问题SchedulingDisabled是不可调度,这个肯定有问题,执行命令kubectl uncordon wd9.test.com.cn,将原来不能调度的节点设置为可调度

通过kubectl cordon master1又可以将master节点设置为不可调度

[root@master1 pki]# kubectl get nodesNAME STATUS ROLES AGE VERSIONbg7.test.com.cn Ready,SchedulingDisabled <none> 7d6h v1.18.9master1 Ready master 124d v1.18.9master2 Ready master 124d v1.18.9master3 Ready master 124d v1.18.9wd4.test.com.cn Ready <none> 124d v1.18.9wd5.test.com.cn Ready <none> 124d v1.18.9wd6.test.com.cn Ready,SchedulingDisabled <none> 124d v1.18.9wd7.test.com.cn Ready <none> 34d v1.18.9wd8.test.com.cn Ready,SchedulingDisabled <none> 43d v1.18.9wd9.test.com.cn Ready

还没有评论,来说两句吧...