解决kafka消费者测试时报错“zookeeper is not a recognized option...”

案例:使用kafka做消息系统缓存ELK日志

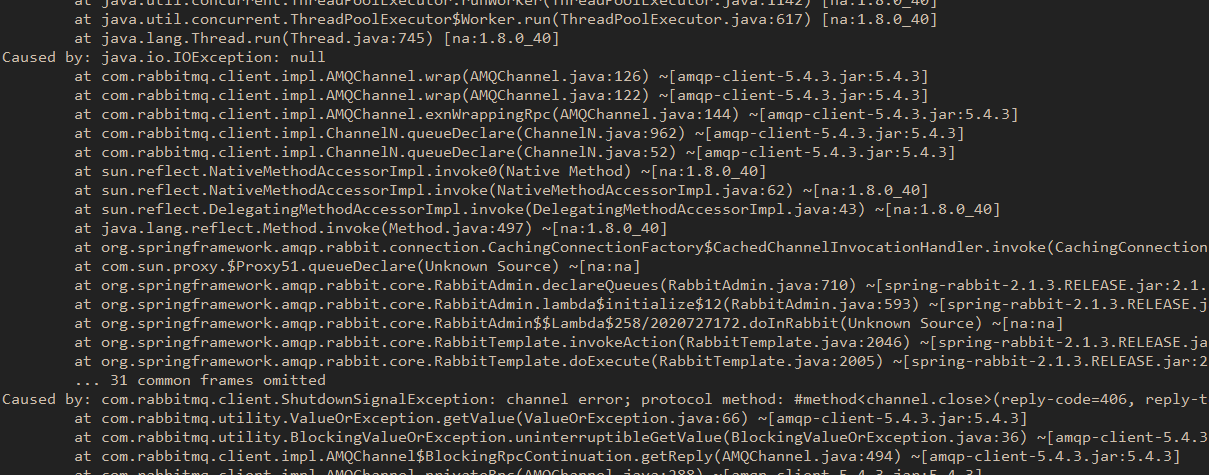

搭建完成以后,使用kafka消费者测试时出现一下情况

[root@filebeat opt]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.10.1:2181,192.168.10.2:2181,192.168.10.7:2181 --topic message --from-beginningzookeeper is not a recognized optionOption Description------ -------------bootstrap-server <String: server to REQUIRED: The server(s) to connect to.connect to>--consumer-property <String: A mechanism to pass user-definedconsumer_prop> properties in the form key=value tothe consumer.--consumer.config <String: config file> Consumer config properties file. Notethat [consumer-property] takesprecedence over this config.--enable-systest-events Log lifecycle events of the consumerin addition to logging consumedmessages. (This is specific forsystem tests.)--formatter <String: class> The name of a class to use forformatting kafka messages fordisplay. (default: kafka.tools.DefaultMessageFormatter)--from-beginning If the consumer does not already havean established offset to consumefrom, start with the earliestmessage present in the log ratherthan the latest message.--group <String: consumer group id> The consumer group id of the consumer.--help Print usage information.--isolation-level <String> Set to read_committed in order tofilter out transactional messageswhich are not committed. Set toread_uncommitted to read allmessages. (default: read_uncommitted)--key-deserializer <String:deserializer for key>--max-messages <Integer: num_messages> The maximum number of messages toconsume before exiting. If not set,consumption is continual.--offset <String: consume offset> The offset id to consume from (a non-negative number), or 'earliest'which means from beginning, or'latest' which means from end(default: latest)--partition <Integer: partition> The partition to consume from.Consumption starts from the end ofthe partition unless '--offset' isspecified.--property <String: prop> The properties to initialize themessage formatter. Defaultproperties include:print.timestamp=true|falseprint.key=true|falseprint.value=true|falsekey.separator=<key.separator>line.separator=<line.separator>key.deserializer=<key.deserializer>value.deserializer=<value.deserializer>Users can also pass in customizedproperties for their formatter; morespecifically, users can pass inproperties keyed with 'key. deserializer.' and 'value. deserializer.' prefixes to configuretheir deserializers.--skip-message-on-error If there is an error when processing amessage, skip it instead of halt.--timeout-ms <Integer: timeout_ms> If specified, exit if no message isavailable for consumption for thespecified interval.--topic <String: topic> The topic id to consume on.--value-deserializer <String:deserializer for values>--version Display Kafka version.--whitelist <String: whitelist> Regular expression specifyingwhitelist of topics to include forconsumption.

有经验的人这时候一看就是报错信息就知道语法错误

查阅资料发现

是版本的问题,老版本支持上面的语法,新版本如下/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server 节点测试的ip:9092 --topic 发布主题 --from-beginning

[root@filebeat opt]# /opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.10.7:9092 --topic message --from-beginninghellohilalalala

至于新旧版本怎么区分的,我也不是很清楚,这里我选择的kafka版本是kafka_2.12-2.5.0.tgz,只能用第二种方法测试,

至于你们的呢?

就都试试吧,看看支持的是哪个

还没有评论,来说两句吧...