机器学习之聚类算法——聚类效果评估可视化

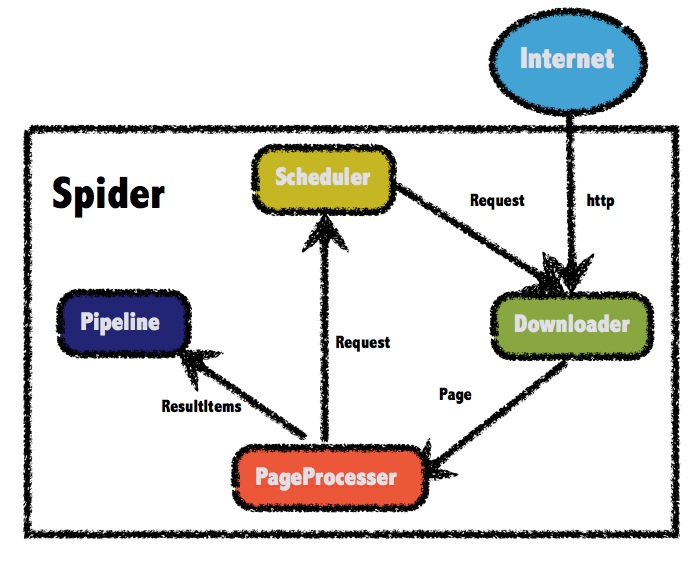

我曾在机器学习之聚类算法应用篇中介绍过,聚类算法常使用轮廓系数来评估聚类效果,不过有时候并不是轮廓系数越大越好,如下面两幅图所示,图中的红色虚线表示聚类系数分数:

显然将簇数据设置为2的时候得到的轮廓系数最高,达到了0.705分,但是这并不一定是最好的聚类结果,显然在这个测试集中,我们有4个簇。为了将各个簇的轮廓系数以可视化的形式展现出来,辅助决策聚类参数,【机器学习】菜菜的sklearn课堂06 - 聚类算法与KMeans 中提供了对应的代码,能够绘制上面的可视化图,带有详细注释的代码见最后。

另外我已经将两份聚类可视化的代码封装,方便调参以及调用。

cluster_algos.py

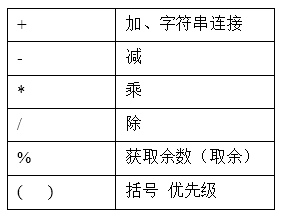

第一份代码是做各个聚类算法对比的cluster_algos.py:

# 不同聚类算法结果对比from sklearn.datasets import make_circlesimport matplotlib.pyplot as pltimport numpy as npfrom sklearn.cluster import KMeans, DBSCAN, SpectralClustering, Birch, MeanShift, AgglomerativeClusteringfrom sklearn.metrics import silhouette_score, silhouette_samplesfrom sklearn.decomposition import PCAimport pandas as pdimport timeimport functoolsdef time_cost(func):@functools.wraps(func)def wrapper(*args, **kwargs):t0 = time.time()func(*args, **kwargs)t1 = time.time()print(args[0],':%.2fs' % (t1 - t0))return func(*args, **kwargs), t1 - t0return wrapperdef load_data(file):assert file != ''df = pd.read_csv(file)x = df.valuespca = PCA(n_components=2)pca_result = pca.fit_transform(x)return x, pca_result@time_costdef cluster_function(model_name, model, data):y_pred = model.fit_predict(data)return y_predif __name__ == '__main__':model_list = {"AgglomerativeClustering": AgglomerativeClustering(n_clusters = 4),"KMeans": KMeans(n_clusters = 4, random_state=10),"DBSCAN": DBSCAN(eps=0.1),"Birch": Birch(n_clusters=4),"SpectralClustering": SpectralClustering(n_clusters = 4, random_state=10),"MeanShift": MeanShift()}x, pca_result = load_data('./data.csv')i = 1fig = plt.figure(figsize=(15,10))for model in model_list:fig.add_subplot(2,3,i)result = cluster_function(model, model_list[model], x)plt.scatter(pca_result[:,0], pca_result[:,1], marker='.', c=result[0])plt.title("{}({})".format(model, silhouette_score(x, result[0])))plt.text(.99, .01, ('%.2fs' % (result[1])).lstrip('0'), transform=plt.gca().transAxes, horizontalalignment='right')i += 1plt.show()

其生成结果如下图所示:

clusters_test

第二份代码用于测试给定聚类算法情况下簇的个数的选择对结果的影响

# 不同簇个数聚类结果对比from sklearn.datasets import make_circlesimport matplotlib.pyplot as pltimport numpy as npfrom sklearn.cluster import KMeans, DBSCAN, SpectralClustering, Birch, MeanShift, AgglomerativeClusteringfrom sklearn.metrics import silhouette_score, silhouette_samplesfrom sklearn.decomposition import PCAimport pandas as pdimport timeimport functoolsimport matplotlib.cm as cmdef cluster_test(model_name, model, X, clusters_list = [2,3,4,5,6,7]):for n_clusters in clusters_list:if hasattr(model, "n_clusters"):model.set_params(n_clusters = n_clusters)elif len(clusters_list) >= 2 and n_clusters == clusters_list[1]:print("{} do not have parameter 'n_clusters', return automatically.".format(model_name))returnfig, (ax1, ax2) = plt.subplots(1, 2)fig.set_size_inches(18, 7)ax1.set_xlim([-0.1, 1])ax1.set_ylim([0, X.shape[0] + (n_clusters + 1) * 10])clusterer, t = cluster_function(model_name, model, X)cluster_labels = clusterer.labels_silhouette_avg = silhouette_score(X, cluster_labels)print("For n_clusters = ", n_clusters, " the average silhoutte_score is ", silhouette_avg)sample_silhouette_values = silhouette_samples(X, cluster_labels)y_lower = 10for i in range(n_clusters):ith_cluster_silhouette_values = sample_silhouette_values[cluster_labels == i]ith_cluster_silhouette_values.sort()size_cluster_i = ith_cluster_silhouette_values.shape[0]y_upper = y_lower + size_cluster_icolor = cm.nipy_spectral(float(i) / n_clusters)ax1.fill_betweenx(np.arange(y_lower, y_upper), ith_cluster_silhouette_values, facecolor = color, alpha = 0.7)ax1.text(-0.05, y_lower + 0.5 * size_cluster_i, str(i))y_lower = y_upper + 10ax1.set_title("The silhouette plot for the various clusters")ax1.set_xlabel("The silhouette coefficient values")ax1.set_ylabel("Cluster label")ax1.axvline(x = silhouette_avg, color = 'red', linestyle = "--")ax1.set_yticks([])ax1.set_xticks([-0.1, 0, 0.2, 0.4, 0.6, 0.8, 1])colors = cm.nipy_spectral(cluster_labels.astype(float) / n_clusters)ax2.scatter(pca_result[:,0], pca_result[:,1], marker = 'o', s = 8, c = colors)if hasattr(clusterer, 'cluster_centers_'):centers = clusterer.cluster_centers_ax2.scatter(centers[:, 0], centers[:, 1], marker = 'x', c = 'red', alpha = 1, s = 200)ax2.text(.99, .01, ('%.2fs' % (t)).lstrip('0'), transform=plt.gca().transAxes, size=12,horizontalalignment='right')ax2.set_title("The visualization of the clustered data")ax2.set_xlabel("Feature space for the 1st feature")ax2.set_ylabel("Feature space for the 2nd feature")plt.suptitle("Silhouette analysis for {} clustering on sample data with n_clusters = {} ({})".format(model_name, n_clusters, silhouette_avg), fontsize = 14, fontweight="bold")plt.show()def time_cost(func):@functools.wraps(func)def wrapper(*args, **kwargs):t0 = time.time()func(*args, **kwargs)t1 = time.time()return func(*args, **kwargs), t1 - t0return wrapper@time_costdef cluster_function(model_name, model, data):model = model.fit(data)return modeldef load_data(file):assert file != ''df = pd.read_csv(file)x = df.valuespca = PCA(n_components=2)pca_result = pca.fit_transform(x)return x, pca_result# 加载数据x, pca_result = load_data('data.csv')cluster_test("AgglomerativeClustering", AgglomerativeClustering(), x)

绘图代码详细解释

此原始代码来自【机器学习】菜菜的sklearn课堂06 - 聚类算法与KMeans

import numpy as npimport matplotlib.cm as cmfrom sklearn.metrics import silhouette_score, silhouette_samplesfrom sklearn.datasets import make_blobsfrom sklearn.cluster import KMeansX, y = make_blobs(n_samples=500, n_features=2, centers=4, random_state = 1)for n_clusters in [2,3,4,5,6,7]:n_clusters = n_clusters# 创建画布,画布上共有一行两列两个子图fig, (ax1, ax2) = plt.subplots(1, 2)# 画布尺寸fig.set_size_inches(18, 7)ax1.set_xlim([-0.1, 1])ax1.set_ylim([0, X.shape[0] + (n_clusters + 1) * 10])# 聚类clusterer = KMeans(n_clusters = n_clusters, random_state = 10).fit(X)cluster_labels = clusterer.labels_# 使用轮廓系数分数silhouette_avg = silhouette_score(X, cluster_labels)print("For n_clusters = ", n_clusters, " the average silhoutte_score is ", silhouette_avg)# 调用silhouette_samples,返回每个样本点的轮廓系数,这就是我们的横坐标sample_silhouette_values = silhouette_samples(X, cluster_labels)# 设定y轴上的初始取值y_lower = 10# 对每一个簇进行循环for i in range(n_clusters):# 从每个样本的轮廓系数中抽取出第i个簇的轮廓系数,并对他进行排序ith_cluster_silhouette_values = sample_silhouette_values[cluster_labels == i]# 排序ith_cluster_silhouette_values.sort()# 查看这个簇中有多少个样本size_cluster_i = ith_cluster_silhouette_values.shape[0]# 这一个簇在y轴上从上往下画图的起点y_upper = y_lower + size_cluster_i# colormap库中的,使用小数来调用颜色的函数color = cm.nipy_spectral(float(i) / n_clusters)ax1.fill_betweenx(np.arange(y_lower, y_upper), ith_cluster_silhouette_values, facecolor = color, alpha = 0.7)# 为每个簇的轮廓系数写上簇的编号ax1.text(-0.05, y_lower + 0.5 * size_cluster_i, str(i))# 为下一个簇计算新的y周上的初始值y_lower = y_upper + 10# 给图1加上标题ax1.set_title("The silhouette plot for the various clusters")ax1.set_xlabel("The silhouette coefficient values")ax1.set_ylabel("Cluster label")# 把整个数据集上的轮廓系数的均值以虚线的形式放入我们的图中ax1.axvline(x = silhouette_avg, color = 'red', linestyle = "--")# 让y轴不显示任何刻度ax1.set_yticks([])# 设置x轴刻度ax1.set_xticks([-0.1, 0, 0.2, 0.4, 0.6, 0.8, 1])# 开始对第二个图进行处理colors = cm.nipy_spectral(cluster_labels.astype(float) / n_clusters)ax2.scatter(X[:,0], X[:,1], marker = 'o', s = 8, c = colors)# 把生成的质心放到图像中去centers = clusterer.cluster_centers_ax2.scatter(centers[:, 0], centers[:, 1], marker = 'x', c = 'red', alpha = 1, s = 200)# 为图二设置标题ax2.set_title("The visualization of the clustered data")ax2.set_xlabel("Feature space for the 1st feature")ax2.set_ylabel("Feature space for the 2nd feature")# 为整个图设置标题plt.suptitle("Silhouette analysis for KMeans clustering on sample data with n_clusters = {}".format(n_clusters), fontsize = 14, fontweight="bold")plt.show()

(完)

还没有评论,来说两句吧...