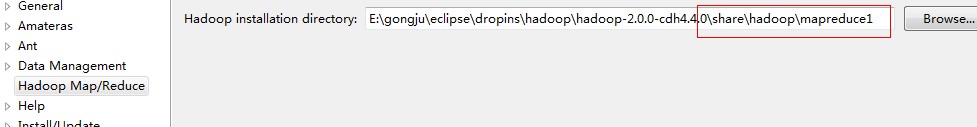

Hadoop2.0 YARN cloudra4.4.0 WordCount实例

其他都没啥 这个 jar都在这里了。

import java.io.IOException;import java.util.StringTokenizer;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.Mapper;import org.apache.hadoop.mapreduce.Reducer;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;import org.apache.hadoop.util.GenericOptionsParser;public class WordCount {public static class TokenizerMapperextends Mapper<Object, Text, Text, IntWritable>{private final static IntWritable one = new IntWritable(1);private Text word = new Text();public void map(Object key, Text value, Context context) throws IOException, InterruptedException {StringTokenizer itr = new StringTokenizer(value.toString());while (itr.hasMoreTokens()) {word.set(itr.nextToken());context.write(word, one);}}}public static class IntSumReducerextends Reducer<Text,IntWritable,Text,IntWritable> {private IntWritable result = new IntWritable();public void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {int sum = 0;for (IntWritable val : values) {sum += val.get();}result.set(sum);context.write(key, result);}}public static void main(String[] args) throws Exception {Configuration conf = new Configuration();String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();if (otherArgs.length != 2) {System.err.println("Usage: wordcount <in> <out>");System.exit(2);}Job job = new Job(conf, "word count");job.setJarByClass(WordCount.class);job.setMapperClass(TokenizerMapper.class);job.setCombinerClass(IntSumReducer.class);job.setReducerClass(IntSumReducer.class);job.setOutputKeyClass(Text.class);job.setOutputValueClass(IntWritable.class);FileInputFormat.addInputPath(job, new Path(otherArgs[0]));FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));System.exit(job.waitForCompletion(true) ? 0 : 1);}}

导出为Jar包,上传(发现这个版本居然不知道怎么在Eclipse里直接跑,只好上传了)

./hadoop jar ../etc/hadoop/WordCount.jar /user/hadoop/input /user/hadoop/output

input里是yard02的etc/profile文件

结果:

!= 1"$BASH" 2"$PS1" 1"/bin/sh" 1"`id 1# 6$i 2&& 1' 3(bash(1), 1(sh(1)) 1-d 1-eq 1-f 1-r 1-u`" 1. 2...). 1.profile 1/etc/bash.bashrc 2/etc/login.defs. 1/etc/profile.d 1/etc/profile.d/*.sh; 1/etc/profile: 10 1Bourne 2CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH 1JAVA_HOME=/usr/local/jdk1.7.0_21 1JRE_HOME=/usr/local/jdk1.7.0_21/jre 1PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH 1PS1. 1PS1='# 1PS1='$ 1PS1='\h:\w\$ 1See 1The 2[ 7] 1]; 6already 1and 2ash(1), 1bash.bashrc 1by 1compatible 1default 2do 1done 1else 2export 4fi 6file 2for 2handled 1i 2if 6in 1is 1ksh(1), 1now 1pam_umask(8) 1pam_umask. 1sets 1shell 1shells 1system-wide 1the 2then 6umask 1unset 1

还没有评论,来说两句吧...